Reinforcement Learningについて体系的学習

テキストは「Pythonde学ぶ強化学習、著:久保孝宏」

——————————————-

Day 4、88頁もあるぞ。とても一日では終わらないけど、

この本の中心的課題と想像し、おそらく大事!

一週間くらいかかるかなあ。

——————————————-

前半は、ニューラルネットワークの説明部分。TensorFlowの導入は面白い。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

#explanation_keras.py import numpy as np from tensorflow.python import keras as K model = K.Sequential([ K.layers.Dense(units=4, input_shape=((2, ))), ]) #Denseは、fully connectedのこと weight, bias = model.layers[0].get_weights() print("Weight shape is {}.".format(weight.shape)) print("Bias shape is {}.".format(bias.shape)) x = np.random.rand(1, 2) #注: 行をデータ数(バッチサイズ) y = model.predict(x) print("x is ({}) and y is ({}).".format(x.shape, y.shape)) |

ここで、Sequenceモデルは、層を積み上げるだけの単純なモデル。

K.layers.Denseで入力2つ、出力4つ

|

1 2 3 4 5 |

(rl-book) bash-3.2$ python explanation_keras.py Weight shape is (2, 4). #注: 列-行の順 Bias shape is (4,). x is ((1, 2)) and y is ((1, 4)). |

——————————————-

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

import numpy as np from tensorflow.python import keras as K # 2-layer neural network. model = K.Sequential([ K.layers.Dense(units=4, input_shape=((2, )), activation="sigmoid"), #活性化関数シグモイドを適応 K.layers.Dense(units=4), #二層目 ]) # Make batch size = 3 data (dimension of x is 2). batch = np.random.rand(3, 2) #3バッチ y = model.predict(batch) print(y.shape) # Will be (3, 4) |

|

1 2 3 |

(rl-book) bash-3.2$ python explanation_keras.py (3, 4) #3行x4データ |

——————————————-

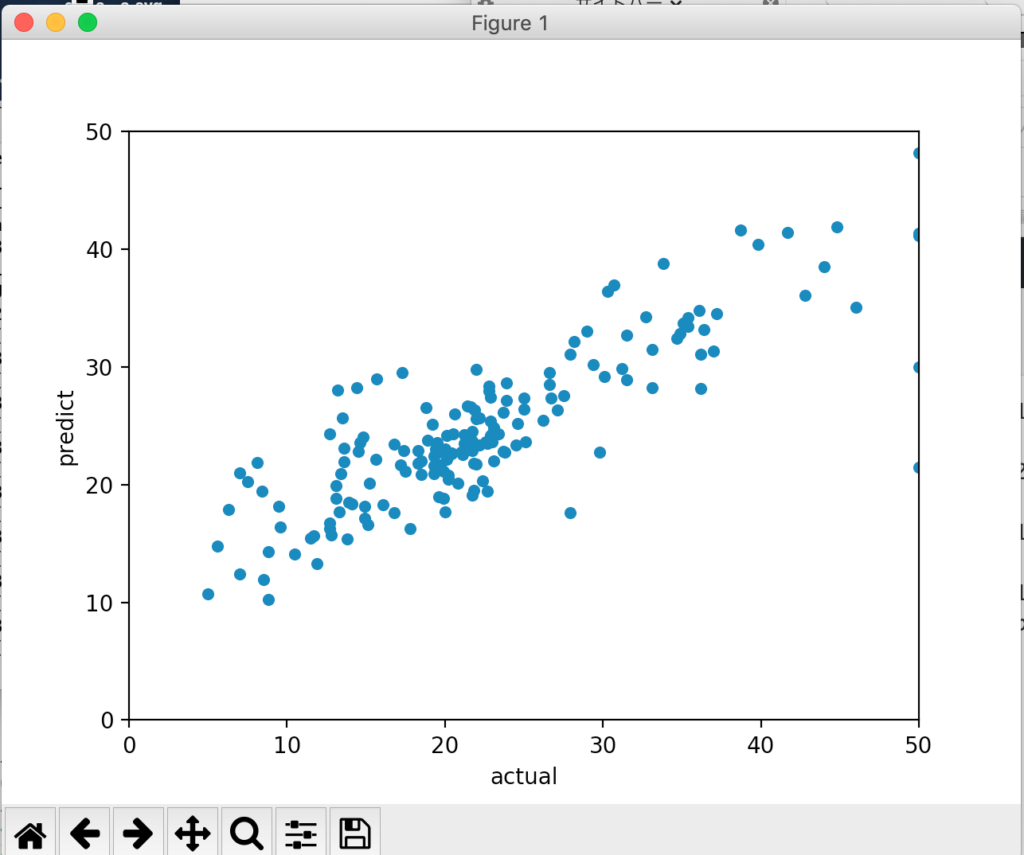

で、誤差逆伝播法(Bckpropagation)が、ボストン住宅価格データセットで提示される

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

import numpy as np from sklearn.model_selection import train_test_split from sklearn.datasets import load_boston import pandas as pd import matplotlib.pyplot as plt from tensorflow.python import keras as K dataset = load_boston() y = dataset.target X = dataset.data X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.33) model = K.Sequential([ K.layers.BatchNormalization(input_shape=(13,)), #正規化 K.layers.Dense(units=13, activation="softplus", kernel_regularizer="l1"), #softplus活性化関数、正則化=過学習防止 K.layers.Dense(units=1) #出力層を集約 ]) model.compile(loss="mean_squared_error", optimizer="sgd") #誤差関数:二乗誤差、最適化:確率的勾配降下法 model.fit(X_train, y_train, epochs=8) predicts = model.predict(X_test) result = pd.DataFrame({ "predict": np.reshape(predicts, (-1,)), "actual": y_test }) limit = np.max(y_test) result.plot.scatter(x="actual", y="predict", xlim=(0, limit), ylim=(0, limit)) plt.show() |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

(rl-book) bash-3.2$ python explanation_keras_boston.py Epoch 1/8 2019-08-14 19:49:13.553839: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA 339/339 [==============================] - 0s 839us/sample - loss: 173.9210 Epoch 2/8 339/339 [==============================] - 0s 34us/sample - loss: 66.8153 Epoch 3/8 339/339 [==============================] - 0s 40us/sample - loss: 97.3949 Epoch 4/8 339/339 [==============================] - 0s 36us/sample - loss: 41.8964 Epoch 5/8 339/339 [==============================] - 0s 43us/sample - loss: 45.5689 Epoch 6/8 339/339 [==============================] - 0s 38us/sample - loss: 24.3077 Epoch 7/8 339/339 [==============================] - 0s 38us/sample - loss: 33.8164 Epoch 8/8 339/339 [==============================] - 0s 38us/sample - loss: 25.8822 |

次に畳み込みニューラルネットワークConvolutional Neural Network: CNNによる数字の認識

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 |

import numpy as np from sklearn.model_selection import train_test_split from sklearn.datasets import load_digits from sklearn.metrics import classification_report from tensorflow.python import keras as K dataset = load_digits() image_shape = (8, 8, 1) num_class = 10 y = dataset.target y = K.utils.to_categorical(y, num_class) X = dataset.data X = np.array([data.reshape(image_shape) for data in X]) X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.33) model = K.Sequential([ K.layers.Conv2D( 5, kernel_size=3, strides=1, padding="same", input_shape=image_shape, activation="relu"), K.layers.Conv2D( 3, kernel_size=2, strides=1, padding="same", activation="relu"), K.layers.Flatten(), K.layers.Dense(units=num_class, activation="softmax") ]) model.compile(loss="categorical_crossentropy", optimizer="sgd") model.fit(X_train, y_train, epochs=8) predicts = model.predict(X_test) predicts = np.argmax(predicts, axis=1) actual = np.argmax(y_test, axis=1) print(classification_report(actual, predicts)) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

(rl-book) bash-3.2$ python explanation_keras_mnist.py Epoch 1/8 2019-08-14 19:55:51.712289: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2 FMA 1203/1203 [==============================] - 0s 416us/sample - loss: 2.0072 Epoch 2/8 1203/1203 [==============================] - 0s 168us/sample - loss: 1.1737 Epoch 3/8 1203/1203 [==============================] - 0s 140us/sample - loss: 0.7097 Epoch 4/8 1203/1203 [==============================] - 0s 140us/sample - loss: 0.4927 Epoch 5/8 1203/1203 [==============================] - 0s 198us/sample - loss: 0.3667 Epoch 6/8 1203/1203 [==============================] - 0s 211us/sample - loss: 0.2978 Epoch 7/8 1203/1203 [==============================] - 0s 158us/sample - loss: 0.2376 Epoch 8/8 1203/1203 [==============================] - 0s 146us/sample - loss: 0.2144 precision recall f1-score support 0 0.98 0.95 0.96 56 1 0.91 0.86 0.88 58 2 0.93 0.98 0.95 51 3 0.87 0.89 0.88 65 4 0.95 0.90 0.93 62 5 0.93 0.95 0.94 60 6 0.88 0.97 0.92 63 7 0.96 0.95 0.96 57 8 0.88 0.93 0.91 57 9 0.92 0.83 0.87 65 micro avg 0.92 0.92 0.92 594 macro avg 0.92 0.92 0.92 594 weighted avg 0.92 0.92 0.92 594 |

次は、ニューラルネットワークの実装

モジュール構成:

Agent

Trainer

Observer

Logger

だいたいの理論は把握できた。Deep−Q Network以下は一旦、休憩ということで、おいておく。