今日の一言:”Building Deep Network:IntelliJ IDEAにMavenを導入し、DL4Jを作動!”

Multilayer Perceptron Networkの構築

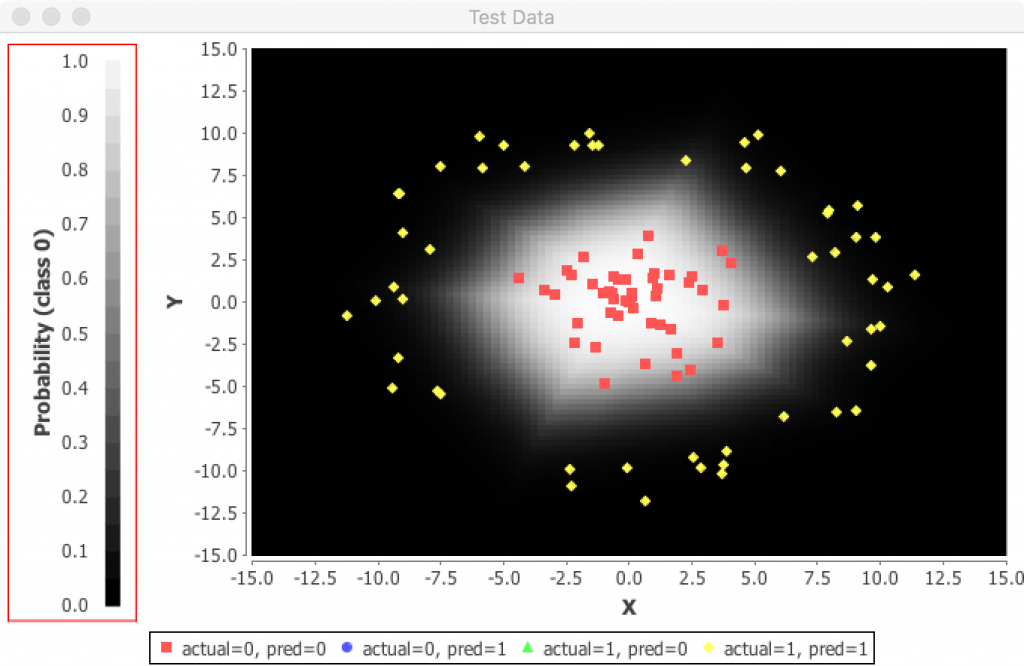

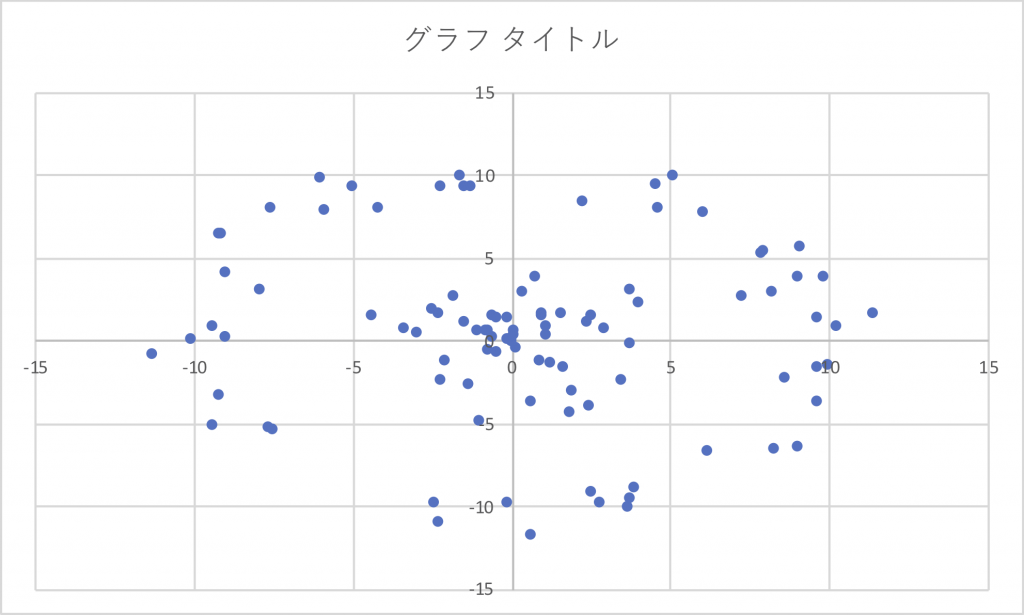

MLPClassSaturnのデータはどうなっているのか?

訓練データ:XY座標値 500個

評価データ:XY座標値 100個

要するに土星Saturnの中心核と輪を構成している2群をクラスター分類するAIアルゴリズムの構築にニューラルネットワークを使う。

登場するクラス群

ーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーー

Class MultiLayerConfiguration org.deeplearning4j.nn.conf

Class MultiLayerNetwork org.deeplearning4j.nn.multilayer

Class Evaluation org.deeplearning4j.eval

Class RecordReaderDataSetIterator org.deeplearning4j.datasets.datavec

Interface RecordReader org.datavec.api.records.reader

Interface DataSetIterator org.nd4j.linalg.dataset.api.iterator

Interface INDArray org.nd4j.linalg.api.ndarray

ーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーー

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 |

package org.deeplearning4j.examples.feedforward.classification; import org.datavec.api.records.reader.RecordReader; import org.datavec.api.records.reader.impl.csv.CSVRecordReader; import org.datavec.api.split.FileSplit; import org.deeplearning4j.datasets.datavec.RecordReaderDataSetIterator; import org.nd4j.linalg.activations.Activation; import org.nd4j.linalg.dataset.api.iterator.DataSetIterator; import org.deeplearning4j.eval.Evaluation; import org.deeplearning4j.nn.api.OptimizationAlgorithm; import org.deeplearning4j.nn.conf.MultiLayerConfiguration; import org.deeplearning4j.nn.conf.NeuralNetConfiguration; import org.deeplearning4j.nn.conf.Updater; import org.deeplearning4j.nn.conf.layers.DenseLayer; import org.deeplearning4j.nn.conf.layers.OutputLayer; import org.deeplearning4j.nn.multilayer.MultiLayerNetwork; import org.deeplearning4j.nn.weights.WeightInit; import org.deeplearning4j.optimize.listeners.ScoreIterationListener; import org.nd4j.linalg.api.ndarray.INDArray; import org.nd4j.linalg.dataset.DataSet; import org.nd4j.linalg.factory.Nd4j; import org.nd4j.linalg.io.ClassPathResource; import org.nd4j.linalg.learning.config.Nesterovs; import org.nd4j.linalg.lossfunctions.LossFunctions.LossFunction; import java.io.File; /** * "Saturn" Data Classification Example * * Based on the data from Jason Baldridge: * https://github.com/jasonbaldridge/try-tf/tree/master/simdata * * @author Josh Patterson * @author Alex Black (added plots) * */ public class MLPClassifierSaturn { public static void main(String[] args) throws Exception { Nd4j.ENFORCE_NUMERICAL_STABILITY = true; int batchSize = 50; //ミニバッチ法:一回にアプライさせるデータ数 int seed = 123; //乱数発生の初期値? 乱数発生の初期化 //生成される乱数は擬似乱数であるため、固定したseedを指定してやれば毎回同じ乱数が出る。 //デバッグ時など毎回同じ乱数を出して欲しいときに使う。 double learningRate = 0.005; //学習率:誤差逆伝搬法における係数。 int nEpochs = 30; //エポック数とは、「一つの訓練データを何回繰り返して学習させるか」の数のことです。 //効率的に学習をすすめるにはLearning Rateをepoch数に応じて減少させる。 int numInputs = 2; //インプット層のニューロン数2 int numOutputs = 2; //アウトプット層のニューロン数2 int numHiddenNodes = 20; //隠れ層のニューロン数20 final String filenameTrain = new ClassPathResource("/classification/saturn_data_train.csv").getFile().getPath(); //訓練データファイル指定 final String filenameTest = new ClassPathResource("/classification/saturn_data_eval.csv").getFile().getPath(); //評価データファイル指定 //Load the training data: //訓練データのローディング RecordReader rr = new CSVRecordReader(); //Class CSVRecordReader: CSVRecordReader() rr.initialize(new FileSplit(new File(filenameTrain))); DataSetIterator trainIter = new RecordReaderDataSetIterator(rr,batchSize,0,2); //RecordReaderDataSetIterator iterator = new RecordReaderDataSetIterator( // recordReader, // batchSize, // labelIndex, ラベルの列位置 // numClasses データ列数 // ); //Load the test/evaluation data: //評価データのローディング RecordReader rrTest = new CSVRecordReader(); rrTest.initialize(new FileSplit(new File(filenameTest))); DataSetIterator testIter = new RecordReaderDataSetIterator(rrTest,batchSize,0,2); //log.info("Build model...."); //ネットワークアーキテクチャー MultiLayer perceptron MultiLayerConfiguration conf = new NeuralNetConfiguration.Builder() .seed(seed) //乱数設定? .updater(new Nesterovs(learningRate, 0.9)) //ネステロフの加速勾配法、モーメンタムファクター0.9でセット .list() .layer(0, new DenseLayer.Builder().nIn(numInputs).nOut(numHiddenNodes) //第1隠れ層 .weightInit(WeightInit.XAVIER) //ザビエルの初期値 .activation(Activation.RELU) //活性化関数としてReLU関数 .build()) .layer(1, new OutputLayer.Builder(LossFunction.NEGATIVELOGLIKELIHOOD) //出力層 .weightInit(WeightInit.XAVIER) //ザビエルの初期値 .activation(Activation.SOFTMAX) //活性化関数としてSoftmax関数 .nIn(numHiddenNodes).nOut(numOutputs).build()) .pretrain(false).backprop(true).build(); MultiLayerNetwork model = new MultiLayerNetwork(conf); //モデル構築 model.init(); model.setListeners(new ScoreIterationListener(10)); //Print score every 10 parameter updates for ( int n = 0; n < nEpochs; n++) { //モデルへのデータアプライをエポック数繰り返す model.fit( trainIter ); } System.out.println("Evaluate model...."); //モデルの評価 Evaluation eval = new Evaluation(numOutputs); while(testIter.hasNext()){ DataSet t = testIter.next(); INDArray features = t.getFeatures(); INDArray lables = t.getLabels(); INDArray predicted = model.output(features,false); eval.eval(lables, predicted); } System.out.println(eval.stats()); // eval.stats() 結果のコンソール表示 //------------------------------------------------------------------------------------ //Training is complete. Code that follows is for plotting the data & predictions only double xMin = -15; double xMax = 15; double yMin = -15; double yMax = 15; //Let's evaluate the predictions at every point in the x/y input space, and plot this in the background グラフ作成 int nPointsPerAxis = 100; double[][] evalPoints = new double[nPointsPerAxis*nPointsPerAxis][2]; int count = 0; for( int i=0; i<nPointsPerAxis; i++ ){ for( int j=0; j<nPointsPerAxis; j++ ){ double x = i * (xMax-xMin)/(nPointsPerAxis-1) + xMin; double y = j * (yMax-yMin)/(nPointsPerAxis-1) + yMin; evalPoints[count][0] = x; evalPoints[count][1] = y; count++; } } INDArray allXYPoints = Nd4j.create(evalPoints); INDArray predictionsAtXYPoints = model.output(allXYPoints); //Get all of the training data in a single array, and plot it: rr.initialize(new FileSplit(new File(filenameTrain))); rr.reset(); int nTrainPoints = 500; trainIter = new RecordReaderDataSetIterator(rr,nTrainPoints,0,2); DataSet ds = trainIter.next(); PlotUtil.plotTrainingData(ds.getFeatures(), ds.getLabels(), allXYPoints, predictionsAtXYPoints, nPointsPerAxis); //Get test data, run the test data through the network to generate predictions, and plot those predictions: rrTest.initialize(new FileSplit(new File(filenameTest))); rrTest.reset(); int nTestPoints = 100; testIter = new RecordReaderDataSetIterator(rrTest,nTestPoints,0,2); ds = testIter.next(); INDArray testPredicted = model.output(ds.getFeatures()); PlotUtil.plotTestData(ds.getFeatures(), ds.getLabels(), testPredicted, allXYPoints, predictionsAtXYPoints, nPointsPerAxis); System.out.println("****************Example finished********************"); } } |

コンソール出力

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

o.n.l.f.Nd4jBackend - Loaded [CpuBackend] backend o.n.n.NativeOpsHolder - Number of threads used for NativeOps: 2 o.n.n.Nd4jBlas - Number of threads used for BLAS: 2 o.n.l.a.o.e.DefaultOpExecutioner - Backend used: [CPU]; OS: [Mac OS X] o.n.l.a.o.e.DefaultOpExecutioner - Cores: [4]; Memory: [3.6GB]; o.n.l.a.o.e.DefaultOpExecutioner - Blas vendor: [MKL] o.d.n.m.MultiLayerNetwork - Starting MultiLayerNetwork with WorkspaceModes set to [training: ENABLED; inference: ENABLED], cacheMode set to [NONE] o.d.o.l.ScoreIterationListener - Score at iteration 0 is 1.9500187683105468 o.d.o.l.ScoreIterationListener - Score at iteration 1 is 1.2153868865966797 o.d.o.l.ScoreIterationListener - Score at iteration 2 is 1.1008527374267578 o.d.o.l.ScoreIterationListener - Score at iteration 3 is 1.1935407257080077 o.d.o.l.ScoreIterationListener - Score at iteration 4 is 0.6177032470703125 o.d.o.l.ScoreIterationListener - Score at iteration 5 is 0.5722542190551758 .......... o.d.o.l.ScoreIterationListener - Score at iteration 295 is 0.05237224578857422 o.d.o.l.ScoreIterationListener - Score at iteration 296 is 0.057432966232299806 o.d.o.l.ScoreIterationListener - Score at iteration 297 is 0.05932496547698975 o.d.o.l.ScoreIterationListener - Score at iteration 298 is 0.05965335845947266 o.d.o.l.ScoreIterationListener - Score at iteration 299 is 0.050114612579345706 Evaluate model.... ========================Evaluation Metrics======================== # of classes: 2 Accuracy: 1.0000 Precision: 1.0000 Recall: 1.0000 F1 Score: 1.0000 Precision, recall & F1: reported for positive class (class 1 - "1") only =========================Confusion Matrix========================= 0 1 ------- 48 0 | 0 = 0 0 52 | 1 = 1 Confusion matrix format: Actual (rowClass) predicted as (columnClass) N times ================================================================== ****************Example finished******************** |

完璧な精度でもって、100個の評価データのうち、48個が0(中心核)、52個が1(輪)に分類された。

|

1 2 |

System.out.println(model.summary()); System.out.println(model.params()); |

とすれば、

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

========================================================================================================================================================================================================================================================== LayerName (LayerType) nIn,nOut TotalParams ParamsShape ========================================================================================================================================================================================================================================================== layer0 (DenseLayer) 2,20 60 W:{2,20}, b:{1,20} layer1 (OutputLayer) 20,2 42 W:{20,2}, b:{1,2} ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- Total Parameters: 102 Trainable Parameters: 102 Frozen Parameters: 0 ========================================================================================================================================================================================================================================================== [[ 0.5051, -0.1064, -0.5878, 0.2147, -0.2506, 0.1694, 0.1828, 0.3966, -0.1159, -1.0667, -0.0039, 0.0414, -0.2705, 0.0965, -0.0151, -0.0183, -0.2440, 0.3099, 0.3838, -0.1108, 0.5253, 0.6209, -0.1041, -0.1726, -0.3511, -0.1890, 0.4551, 0.1900, -0.3496, 0.0644, 0.0187, 0.0306, 0.5229, -0.0608, -0.4564, 0.1865, 0.0799, 0.1576, -0.0933, 0.4160, 0.1516, -0.4136, 0.2882, 0.1872, -0.2897, -0.0136, -0.1874, 0.5774, -0.1431, -0.2045, -0.3704, 0.2499, 0.0608, -0.1484, 0.2614, 0.9763, -0.0547, -0.1443, 0.1029, -0.0322, 0.0677, -0.7437, 0.5813, -0.1314, -0.3347, -0.2371, -0.3985, 0.4483, -0.4175, -0.1150, -0.2141, 0.2760, 0.1480, -0.1473, 0.1253, 0.6902, -0.1761, -0.5307, 0.2276, -0.0136, -0.1594, 0.4310, 0.0827, -0.4691, 0.5227, -0.1974, 0.1377, -0.5734, 0.0795, 0.4972, 0.6973, -0.2429, 0.0846, 0.2800, -0.3221, -0.9952, 0.0485, -0.0923, 0.0424, 0.1793, 1.0446, -1.0446]] Evaluate model.... |

|

1 |

System.out.println(model.paramTable()); |

とすれば、パラメータの全容が以下のように理解できる。第一層は20+20+20で60個、第二層は20+20+2で42個、全部で102個。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

{0_W=[[ 0.5051, -0.5878, -0.2506, 0.1828, -0.1159, -0.0039, -0.2705, -0.0151, -0.2440, 0.3838, 0.5253, -0.1041, -0.3511, 0.4551, -0.3496, 0.0187, 0.5229, -0.4564, 0.0799, -0.0933], [ -0.1064, 0.2147, 0.1694, 0.3966, -1.0667, 0.0414, 0.0965, -0.0183, 0.3099, -0.1108, 0.6209, -0.1726, -0.1890, 0.1900, 0.0644, 0.0306, -0.0608, 0.1865, 0.1576, 0.4160]], 0_b=[[ 0.1516, -0.4136, 0.2882, 0.1872, -0.2897, -0.0136, -0.1874, 0.5774, -0.1431, -0.2045, -0.3704, 0.2499, 0.0608, -0.1484, 0.2614, 0.9763, -0.0547, -0.1443, 0.1029, -0.0322]], 1_W=[ [ 0.0677, -0.1594], [ -0.7437, 0.4310], [ 0.5813, 0.0827], [ -0.1314, -0.4691], [ -0.3347, 0.5227], [ -0.2371, -0.1974], [ -0.3985, 0.1377], [ 0.4483, -0.5734], [ -0.4175, 0.0795], [ -0.1150, 0.4972], [ -0.2141, 0.6973], [ 0.2760, -0.2429], [ 0.1480, 0.0846], [ -0.1473, 0.2800], [ 0.1253, -0.3221], [ 0.6902, -0.9952], [ -0.1761, 0.0485], [ -0.5307, -0.0923], [ 0.2276, 0.0424], [ -0.0136, 0.1793]], 1_b=[[ 1.0446, -1.0446]]} |