BPA: ポアソンGLM 第4章 その2

BPA BUGSで学ぶ階層モデリング Baysian Population Analysis Using WinBUGS, Marc Kery & Michael Schaubの学習ノート

ーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーーー

時間i 空間jで得られたCi,jを年の3次多項式関数モデルとする。期待計数値λi,jのポアソン分布を仮定する。

Ci,j ~ Poisson(λi,j)

対数リンク関数

log(λi,j) = αj + ?βp * Xi^p + εi

αj ~ Normal(μ, σα^2)

εi ~ Normal(0, σε^2)

平均サイト効果μを全体の切片とすると

この場合、サイト効果の分布は、平均μではなく0の正規分布となる

log(λi,j) = μ + ?βp * Xi^p + αj + εi

αj ~ Normal(0, σα^2)

εi ~ Normal(0, σε^2)

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

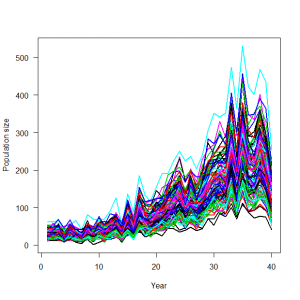

> # 4.3. Mixed models with random effects for variability among groups (site and year effects) > # 4.3.1. Generation and analysis of simulated data > data.fn <- function(nsite = 5, nyear = 40, alpha = 4.18456, beta1 = 1.90672, beta2 = 0.10852, beta3 = -1.17121, sd.site = 0.5, sd.year = 0.2){ + # nsite: Number of populations + # nyear: Number of years + # alpha, beta1, beta2, beta3: cubic polynomial coefficients of year + # sd.site: standard deviation of the normal distribution assumed for the population intercepts alpha + # sd.year: standard deviation of the normal distribution assumed for the year effects + # We standardize the year covariate so that it runs from about 1 to 1 + + # Generate data structure to hold counts and log(lambda) + C <- log.expected.count <- array(NA, dim = c(nyear, nsite)) + + # Generate covariate values + year <- 1:nyear + yr <- (year-20)/20 # Standardize + site <- 1:nsite + + # Draw two sets of random effects from their respective distribution + alpha.site <- rnorm(n = nsite, mean = alpha, sd = sd.site) + eps.year <- rnorm(n = nyear, mean = 0, sd = sd.year) + + # Loop over populations + for (j in 1:nsite){ + # Signal (plus first level of noise): build up systematic part of the GLM including random site and year effects + log.expected.count[,j] <- alpha.site[j] + beta1 * yr + beta2 * yr^2 + beta3 * yr^3 + eps.year + expected.count <- exp(log.expected.count[,j]) + + # Second level of noise: generate random part of the GLM: Poisson noise around expected counts + C[,j] <- rpois(n = nyear, lambda = expected.count) + } + + # Plot simulated data + matplot(year, C, type = "l", lty = 1, lwd = 2, main = "", las = 1, ylab = "Population size", xlab = "Year") + + return(list(nsite = nsite, nyear = nyear, alpha.site = alpha.site, beta1 = beta1, beta2 = beta2, beta3 = beta3, year = year, sd.site = sd.site, sd.year = sd.year, expected.count = expected.count, C = C)) + } > data <- data.fn(nsite = 100, nyear = 40, sd.site = 0.3, sd.year = 0.2) |

winBUGSを使うと、

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 |

> # Specify model in BUGS language > sink("GLMM_Poisson.txt") > cat(" + model { + + # Priors + for (j in 1:nsite){ + alpha[j] ~ dnorm(mu, tau.alpha) # 4. Random site effects + } + mu ~ dnorm(0, 0.01) # Hyperparameter 1 + tau.alpha <- 1 / (sd.alpha*sd.alpha) # Hyperparameter 2 + sd.alpha ~ dunif(0, 2) + for (p in 1:3){ + beta[p] ~ dnorm(0, 0.01) + } + + tau.year <- 1 / (sd.year*sd.year) + sd.year ~ dunif(0, 1) # Hyperparameter 3 + + # Likelihood + for (i in 1:nyear){ + eps[i] ~ dnorm(0, tau.year) # 4. Random year effects + for (j in 1:nsite){ + C[i,j] ~ dpois(lambda[i,j]) # 1. Distribution for random part + lambda[i,j] <- exp(log.lambda[i,j]) # 2. Link function + log.lambda[i,j] <- alpha[j] + beta[1] * year[i] + beta[2] * pow(year[i],2) + beta[3] * pow(year[i],3) + eps[i] # 3. Linear predictor including random site and random year effects + } #j + } #i + } + ",fill = TRUE) > sink() > > > # Bundle data > win.data <- list(C = data$C, nsite = ncol(data$C), nyear = nrow(data$C), year = (data$year-20) / 20) # Note year standardized > > # Initial values > inits <- function() list(mu = runif(1, 0, 2), alpha = runif(data$nsite, -1, 1), beta = runif(3, -1, 1), sd.alpha = runif(1, 0, 0.1), sd.year = runif(1, 0, 0.1)) > > # Parameters monitored (may want to add "lambda") > params <- c("mu", "alpha", "beta", "sd.alpha", "sd.year") > > # MCMC settings (may have to adapt) > ni <- 100000 > nt <- 50 > nb <- 50000 > nc <- 3 > > # Call WinBUGS from R (BRT 98 min) > out <- bugs(win.data, inits, params, "GLMM_Poisson.txt", n.chains = nc, + n.thin = nt, n.iter = ni, n.burnin = nb, debug = TRUE, bugs.directory = bugs.dir, working.directory = getwd()) Error in bugs.run(n.burnin, bugs.directory, WINE = WINE, useWINE = useWINE, : Look at the log file and try again with 'debug=TRUE' to figure out what went wrong within Bugs. > > # Summarize posteriors > print(out, dig = 3) |

途中でハング!

4.3.2 実際のデータセット解析

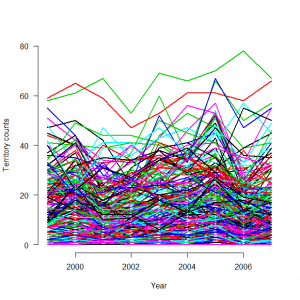

1999年からのスイス繁殖鳥類調査MHBのデータ解析。

サイト、年ごとの推定なわばり数の計数値データ:235サイトで9年間に得られたデータの一部。ヒガラの年ごとのなわばり数をモデル化する。解析の目的は、ヒガラ個体群の増減の傾向、サイト・年・観測者の間で計数値データが得られている。

固定効果モデルとランダム効果モデルを比較することでランダム効果への理解を深める。

Ci,j ~ Poisson(λi,j) #データの分布

log(λi,j) = αj + β1 * 年i + β2 * Fi,j + δi + γk(i,j) #リンク関数と線形予測子

αj ~ Normal(μ, σα^2) #ランダムサイト効果

δi ~ Normal(0, σδ^2) #ランダム効果年

γk(i,j) ~ Normal(0,σγ^2) #ランダム観察者効果

Fi,j: 観測者が初めてサイトを調査したことを表す指標変数

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 |

> # 4.3.2. Analysis of real data set > # Read in the tit data and have a look at them #ヒガラのデータの読み込みと概観 > tits <- read.table("tits.txt", header = TRUE) > str(tits) 'data.frame': 235 obs. of 31 variables: $ site : Factor w/ 235 levels "100.1","102.1",..: 36 194 42 37 38 166 39 172 40 41 ... $ spec : Factor w/ 1 level "Coaltit": 1 1 1 1 1 1 1 1 1 1 ... $ elevation: int 420 450 1050 920 1110 510 800 630 590 530 ...#標高 $ forest : int 3 21 32 9 35 2 6 60 5 13 ... #森林被覆 <strong> $ y1999 : int 0 0 NA 11 27 NA 0 14 2 7 ...#なわばり数 $ y2000 : int 1 0 7 9 24 0 6 15 3 5 ... $ y2001 : int 2 0 15 5 21 2 NA 17 2 2 ... $ y2002 : int 2 0 19 9 19 1 NA 6 1 3 ... $ y2003 : int 0 3 12 8 22 0 1 20 NA 3 ... $ y2004 : int 0 2 11 7 31 0 1 16 0 9 ... $ y2005 : int 2 0 12 9 28 0 7 24 1 10 ... $ y2006 : int 1 1 11 6 9 0 1 15 0 9 ... $ y2007 : int 0 0 7 2 18 0 0 9 1 4 ...</strong> $ obs1999 : int 1576 NA NA 1576 1976 NA 3046 3046 245 2095 ...#観測者コード $ obs2000 : int 1576 NA 2064 2031 1976 2158 2158 3046 245 2095 ... $ obs2001 : int 1576 NA 2064 2031 1976 3288 NA 3046 2064 2095 ... $ obs2002 : int 1576 NA 2064 2031 1976 3288 NA 3046 2064 2095 ... $ obs2003 : int 1576 NA 3299 2031 1976 3304 2148 2148 NA 2095 ... $ obs2004 : int 1576 NA 3299 2031 1976 3304 1971 2148 1699 2095 ... $ obs2005 : int 1576 NA 3299 2031 1976 3304 2049 2148 1699 2095 ... $ obs2006 : int 1576 NA 3299 2031 1976 3304 1699 2148 2261 2095 ... $ obs2007 : int 2125 NA 3299 169 1976 3304 1699 2148 2261 2095 ... $ first1999: int 1 NA NA 1 1 NA 1 1 1 1 ...#指標変数一式 $ first2000: int 0 NA 1 1 0 1 1 0 0 0 ... $ first2001: int 0 NA 0 0 0 1 NA 0 1 0 ... $ first2002: int 0 NA 0 0 0 0 NA 0 0 0 ... $ first2003: int 0 NA 1 0 0 1 1 1 NA 0 ... $ first2004: int 0 NA 0 0 0 0 1 0 1 0 ... $ first2005: int 0 NA 0 0 0 0 1 0 0 0 ... $ first2006: int 0 NA 0 0 0 0 1 0 1 0 ... $ first2007: int 1 NA 0 1 0 0 0 0 0 0 ... > C <- as.matrix(tits[5:13]) > obs <- as.matrix(tits[14:22]) > first <- as.matrix(tits[23:31]) > matplot(1999:2007, t(C), type = "l", lty = 1, lwd = 2, main = "", las = 1, ylab = "Territory counts", xlab = "Year", ylim = c(0, 80), frame = FALSE) |

ベクトル変数Cには、as.matrix(tits[5:13])により、上記行列から5行?13行の年ごとの計測数情報が観測者ごとにまとめられている

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

> C y1999 y2000 y2001 y2002 y2003 y2004 y2005 y2006 y2007 [1,] 0 1 2 2 0 0 2 1 0 [2,] 0 0 0 0 3 2 0 1 0 [3,] NA 7 15 19 12 11 12 11 7 [4,] 11 9 5 9 8 7 9 6 2 [5,] 27 24 21 19 22 31 28 9 18 .......... [111,] 14 14 11 10 14 14 18 13 10 [ reached getOption("max.print") -- omitted 124 rows ] > length(C) [1] 2115 #総観測数2115 > length(C[,1]) [1] 235 #235の観測者 > length(C[1,]) [1] 9 #9年分のデータ |

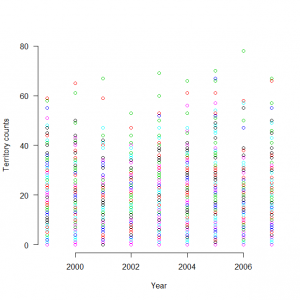

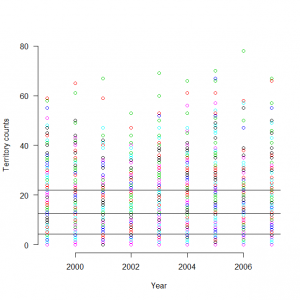

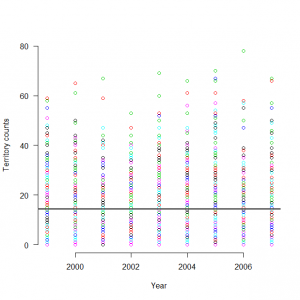

点であらわすとこんなデータ

|

1 |

> matplot(1999:2007, t(C), type = "p",pch=1, lty = 1, lwd = 1, main = "", las = 1, ylab = "Territory counts", xlab = "Year", ylim = c(0, 80), frame = FALSE) |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 |

> table(obs) #観測者 obs 105 109 144 154 169 170 174 189 193 200 221 245 278 297 961 1025 3 9 2 5 1 4 9 7 9 5 1 6 7 7 7 4 1028 1047 1048 1052 1107 1112 1118 1119 1189 1253 1255 1256 1259 1276 1281 1283 9 9 1 9 6 4 1 9 4 2 6 2 9 2 5 1 1332 1341 1356 1364 1373 1379 1383 1385 1386 1397 1414 1419 1439 1452 1455 1458 3 5 2 6 2 1 9 3 1 6 2 9 9 7 25 19 1463 1476 1489 1513 1515 1535 1545 1550 1553 1554 1557 1559 1576 1598 1612 1638 10 1 3 22 19 9 7 9 4 9 8 9 13 3 9 7 1644 1646 1650 1651 1662 1674 1678 1679 1681 1682 1690 1699 1702 1712 1715 1722 9 1 3 10 1 8 9 4 5 8 2 4 3 1 4 1 1724 1728 1730 1742 1745 1750 1756 1758 1760 1771 1792 1795 1805 1809 1820 1828 7 18 9 9 10 9 2 9 2 1 10 1 4 5 9 7 1830 1834 1837 1845 1856 1863 1876 1880 1889 1894 1895 1899 1902 1910 1933 1934 1 42 8 1 16 9 8 9 9 1 9 6 9 5 8 8 1935 1943 1947 1961 1965 1966 1971 1975 1976 1979 1984 1989 1991 1992 1994 2007 3 9 2 15 3 6 3 11 9 9 2 7 4 6 9 9 2010 2011 2018 2029 2031 2037 2040 2043 2048 2049 2050 2055 2058 2060 2064 2066 2 8 14 9 15 5 1 6 9 4 17 3 9 7 5 11 2081 2085 2087 2095 2096 2098 2100 2101 2115 2117 2120 2125 2135 2136 2139 2148 8 8 8 32 1 8 9 9 9 15 2 2 9 8 3 15 2153 2158 2163 2165 2168 2175 2177 2197 2210 2214 2217 2226 2229 2233 2234 2245 10 2 1 1 2 3 9 5 9 9 9 6 9 7 4 7 2246 2254 2261 2266 2270 2335 2337 2343 2345 2347 2353 2375 2380 2381 2387 2394 1 2 2 6 4 6 1 1 5 1 4 5 4 1 5 5 2397 2400 2415 2420 2422 2423 2440 2444 2449 2469 2485 2493 2507 2509 2518 2521 2 5 5 1 1 1 6 5 6 5 4 8 1 1 3 3 2531 2610 2633 2655 2687 3007 3010 3015 3022 3032 3034 3038 3046 3050 3073 3082 1 1 3 2 1 9 9 1 1 9 9 18 5 9 9 9 3091 3092 3094 3136 3161 3176 3196 3202 3213 3256 3257 3258 3259 3260 3261 3263 9 5 9 16 9 7 9 10 9 13 3 3 9 4 5 9 3264 3265 3268 3271 3272 3273 3275 3276 3279 3285 3288 3291 3294 3297 3298 3299 7 16 7 3 7 7 2 3 1 6 5 2 1 11 4 5 3301 3302 3303 3304 3305 3307 3309 3310 3313 3316 3318 3331 3333 3334 3338 11 1 5 5 6 9 4 6 2 3 2 1 1 1 1 > length(table(obs)) #観測者数 [1] 271 > > apply(first, 2, sum, na.rm = TRUE) #年とサイト数は観測者の間でばらついている first1999 first2000 first2001 first2002 first2003 first2004 first2005 first2006 176 37 22 25 40 31 25 33 first2007 24 > #観測者のIDを連続番号に振りなおす > a <- as.numeric(levels(factor(obs))) # All the levels, numeric > newobs <- obs # Gets ObsID from 1:271 > for (j in 1:length(a)){newobs[which(obs==a[j])] <- j } > table(newobs) newobs 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 3 9 2 5 1 4 9 7 9 5 1 6 7 7 7 4 9 9 1 9 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 6 4 1 9 4 2 6 2 9 2 5 1 3 5 2 6 2 1 9 3 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 1 6 2 9 9 7 25 19 10 1 3 22 19 9 7 9 4 9 8 9 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 13 3 9 7 9 1 3 10 1 8 9 4 5 8 2 4 3 1 4 1 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 7 18 9 9 10 9 2 9 2 1 10 1 4 5 9 7 1 42 8 1 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 16 9 8 9 9 1 9 6 9 5 8 8 3 9 2 15 3 6 3 11 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 9 9 2 7 4 6 9 9 2 8 14 9 15 5 1 6 9 4 17 3 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 9 7 5 11 8 8 8 32 1 8 9 9 9 15 2 2 9 8 3 15 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 10 2 1 1 2 3 9 5 9 9 9 6 9 7 4 7 1 2 2 6 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 4 6 1 1 5 1 4 5 4 1 5 5 2 5 5 1 1 1 6 5 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 6 5 4 8 1 1 3 3 1 1 3 2 1 9 9 1 1 9 9 18 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 5 9 9 9 9 5 9 16 9 7 9 10 9 13 3 3 9 4 5 9 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 7 16 7 3 7 7 2 3 1 6 5 2 1 11 4 5 11 1 5 5 261 262 263 264 265 266 267 268 269 270 271 6 9 4 6 2 3 2 1 1 1 1 > #欠損値に数値を与える。 > newobs[is.na(newobs)] <- 272 > table(newobs) newobs 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 3 9 2 5 1 4 9 7 9 5 1 6 7 7 7 4 9 9 1 9 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 6 4 1 9 4 2 6 2 9 2 5 1 3 5 2 6 2 1 9 3 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 1 6 2 9 9 7 25 19 10 1 3 22 19 9 7 9 4 9 8 9 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 13 3 9 7 9 1 3 10 1 8 9 4 5 8 2 4 3 1 4 1 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 7 18 9 9 10 9 2 9 2 1 10 1 4 5 9 7 1 42 8 1 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 16 9 8 9 9 1 9 6 9 5 8 8 3 9 2 15 3 6 3 11 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 9 9 2 7 4 6 9 9 2 8 14 9 15 5 1 6 9 4 17 3 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 9 7 5 11 8 8 8 32 1 8 9 9 9 15 2 2 9 8 3 15 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 10 2 1 1 2 3 9 5 9 9 9 6 9 7 4 7 1 2 2 6 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 4 6 1 1 5 1 4 5 4 1 5 5 2 5 5 1 1 1 6 5 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 6 5 4 8 1 1 3 3 1 1 3 2 1 9 9 1 1 9 9 18 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 5 9 9 9 9 5 9 16 9 7 9 10 9 13 3 3 9 4 5 9 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 7 16 7 3 7 7 2 3 1 6 5 2 1 11 4 5 11 1 5 5 261 262 263 264 265 266 267 268 269 270 271 272 6 9 4 6 2 3 2 1 1 1 1 414 > first[is.na(first)] <- 0 > table(first) first 0 1 1702 413 |

以下、切片だけを含む最も単純なモデルから始めて、徐々に複雑にしていく。

まずは、帰無モデル、切片だけのモデル

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 |

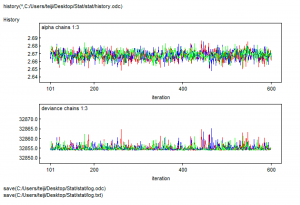

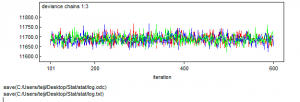

> # (a) Null or intercept-only model > # Specify model in BUGS language > sink("GLM0.txt") > cat(" + model { + + # Prior + alpha ~ dnorm(0, 0.01) # log(mean count) + + # Likelihood + for (i in 1:nyear){ + for (j in 1:nsite){ + C[i,j] ~ dpois(lambda[i,j]) + lambda[i,j] <- exp(log.lambda[i,j]) + log.lambda[i,j] <- alpha + } #j + } #i + } + ",fill = TRUE) > sink() > > # Bundle data > win.data <- list(C = t(C), nsite = nrow(C), nyear = ncol(C)) > > # Initial values > inits <- function() list(alpha = runif(1, -10, 10)) > > # Parameters monitored > params <- c("alpha") > > # MCMC settings > ni <- 1200 > nt <- 2 > nb <- 200 > nc <- 3 > > # Call WinBUGS from R (BRT < 1 min) > out0 <- bugs(win.data, inits, params, "GLM0.txt", n.chains = nc, + n.thin = nt, n.iter = ni, n.burnin = nb, debug = TRUE, bugs.directory = bugs.dir, working.directory = getwd()) > > # Summarize posteriors > print(out0, dig = 3) Inference for Bugs model at "GLM0.txt", fit using WinBUGS, 3 chains, each with 1200 iterations (first 200 discarded), n.thin = 2 n.sims = 1500 iterations saved mean sd 2.5% 25% 50% 75% 97.5% Rhat alpha 2.668 0.006 2.656 2.664 2.668 2.672 2.679 1.002 deviance 32853.287 4.713 32850.000 32850.000 32850.000 32860.000 32860.000 1.001 n.eff alpha 1100 deviance 1500 For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = Dbar-Dhat) pD = 1.1 and DIC = 32856.1 DIC is an estimate of expected predictive error (lower deviance is better). |

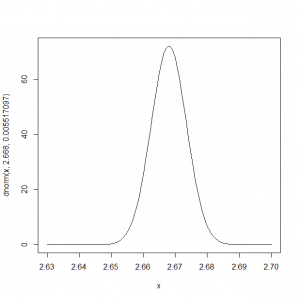

αの平均μ は2.668 ± 0.006

αの分散σ は32853.287 ± 4.713

αの標準偏差sd 0.005517097 ± 0.460629

mean sd 2.5% 25% 50% 75% 97.5% Rhat

2.668 0.006 2.656 2.664 2.668 2.672 2.679 1.002

deviance 32853.287 4.713 32850.000 32850.000 32850.000 32860.000 32860.000 1.001

αの分布を正規分布に当てはめて描くと、

|

1 |

> curve(dnorm(x, 2.668, 0.005517097), 2.63, 2.7) |

このモデルでは、ヒガラの平均観測密度は、exp(2.67)=14.4

mean 2.5% = 2.656 exp(mean 2.5%) = 14.23922

mean 50% = 2.668 exp(mean 50%) = 14.41112

mean 97.5% = 2.679 exp(mean 97.5%) = 14.57052

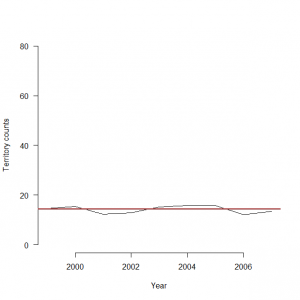

> y <- c(mean(C[,1], na.rm=TRUE),mean(C[,2], na.rm=TRUE),mean(C[,3], na.rm=TRUE),mean(C[,4], na.rm=TRUE),mean(C[,5], na.rm=TRUE),mean(C[,6], na.rm=TRUE),mean(C[,6], na.rm=TRUE),mean(C[,8], na.rm=TRUE),mean(C[,9], na.rm=TRUE)) 年ごとの平均値と、今回ベイズ流に求めた全体の平均値±95%信用区間 > matplot(1999:2007, y, type = “l”, lty = 1, lwd = 1, main = “”, las = 1, ylab = “Territory counts”, xlab = “Year”, ylim = c(0, 80), frame = FALSE)

> abline(h=14.41112, col=”red”)

> abline(h=14.23922, col = gray(0.5))

> abline(h=14.57052, col = gray(0.5))

こんなところがこのモデル化による結果というところか。

つまり、全体の平均で置き換えたというところか。

次に固定サイト効果を追加する。サイトsiteは、235個含まれている。

これらのサイトごとにパラメータαi = ポアソン分布の平均値を正規分布として定義する。サイトごとに計測値がどうかという情報が得られるということか。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 |

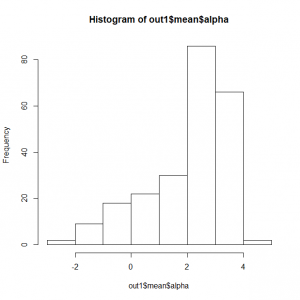

> # (b) Fixed site effects > # Specify model in BUGS language > sink("GLM1.txt") > cat(" + model { + + # Priors + for (j in 1:nsite){ + alpha[j] ~ dnorm(0, 0.01) # Site effects + } + + # Likelihood + for (i in 1:nyear){ + for (j in 1:nsite){ + C[i,j] ~ dpois(lambda[i,j]) + lambda[i,j] <- exp(log.lambda[i,j]) + log.lambda[i,j] <- alpha[j] + } #j + } #i + } + ",fill = TRUE) > sink() > > # Bundle data > win.data <- list(C = t(C), nsite = nrow(C), nyear = ncol(C)) > > # Initial values (not required for all) > inits <- function() list(alpha = runif(235, -1, 1)) > > # Parameters monitored > params <- c("alpha") > > # MCMC settings > ni <- 1200 > nt <- 2 > nb <- 200 > nc <- 3 > > # Call WinBUGS from R (BRT < 1 min) > out1 <- bugs(win.data, inits, params, "GLM1.txt", n.chains = nc, + n.thin = nt, n.iter = ni, n.burnin = nb, debug = TRUE, bugs.directory = bugs.dir, working.directory = getwd()) > > # Summarize posteriors > print(out1, dig = 2) Inference for Bugs model at "GLM1.txt", fit using WinBUGS, 3 chains, each with 1200 iterations (first 200 discarded), n.thin = 2 n.sims = 1500 iterations saved mean sd 2.5% 25% 50% 75% 97.5% Rhat alpha[1] -0.20 0.36 -0.97 -0.42 -0.18 0.05 0.44 1.00 alpha[2] -0.50 0.42 -1.39 -0.76 -0.49 -0.21 0.24 1.00 alpha[3] 2.46 0.10 2.25 2.39 2.46 2.53 2.65 1.00 alpha[4] 1.98 0.12 1.71 1.90 1.98 2.07 2.21 1.00 alpha[5] 3.09 0.07 2.94 3.05 3.09 3.14 3.23 1.00 alpha[6] -1.19 0.64 -2.64 -1.57 -1.13 -0.75 -0.10 1.00 alpha[7] 0.78 0.25 0.26 0.62 0.79 0.96 1.23 1.00 alpha[8] 2.71 0.09 2.54 2.65 2.71 2.77 2.87 1.00 alpha[9] 0.17 0.32 -0.50 -0.02 0.18 0.40 0.74 1.00 alpha[10] 1.75 0.14 1.46 1.66 1.75 1.84 2.02 1.00 alpha[11] 3.36 0.06 3.23 3.32 3.36 3.40 3.47 1.00 alpha[12] 3.56 0.06 3.45 3.52 3.56 3.59 3.66 1.00 alpha[13] 2.75 0.08 2.60 2.69 2.75 2.80 2.90 1.00 alpha[14] 1.76 0.15 1.46 1.66 1.75 1.86 2.03 1.00 alpha[15] 1.54 0.17 1.19 1.43 1.54 1.65 1.86 1.00 alpha[16] 2.51 0.10 2.32 2.45 2.52 2.58 2.70 1.00 alpha[17] 2.41 0.10 2.23 2.35 2.41 2.48 2.60 1.00 alpha[18] 2.71 0.09 2.54 2.66 2.72 2.77 2.88 1.00 alpha[19] 3.58 0.06 3.46 3.54 3.58 3.61 3.69 1.00 alpha[20] 2.62 0.09 2.44 2.56 2.62 2.68 2.79 1.00 alpha[21] 2.34 0.10 2.12 2.27 2.34 2.41 2.54 1.00 alpha[22] 1.96 0.13 1.69 1.88 1.96 2.05 2.19 1.00 alpha[23] 2.53 0.09 2.35 2.47 2.54 2.60 2.71 1.00 alpha[24] -0.95 0.51 -2.08 -1.28 -0.91 -0.58 -0.08 1.00 alpha[25] 2.77 0.08 2.60 2.71 2.77 2.82 2.92 1.00 alpha[26] 2.18 0.11 1.96 2.11 2.18 2.25 2.41 1.00 alpha[27] 2.95 0.08 2.80 2.90 2.96 3.00 3.10 1.00 alpha[28] 3.64 0.05 3.54 3.61 3.64 3.68 3.74 1.00 alpha[29] 1.55 0.15 1.24 1.45 1.55 1.66 1.84 1.00 alpha[30] 0.18 0.30 -0.44 -0.02 0.20 0.39 0.71 1.00 alpha[31] 1.55 0.16 1.25 1.45 1.56 1.67 1.84 1.00 alpha[32] 3.27 0.06 3.15 3.23 3.27 3.32 3.41 1.00 alpha[33] 4.18 0.04 4.10 4.15 4.18 4.21 4.26 1.00 alpha[34] -0.09 0.40 -0.94 -0.33 -0.07 0.18 0.60 1.00 alpha[35] 3.60 0.05 3.50 3.56 3.60 3.64 3.70 1.00 alpha[36] 2.68 0.10 2.48 2.61 2.68 2.74 2.87 1.00 alpha[37] 3.47 0.06 3.35 3.43 3.47 3.50 3.58 1.00 alpha[38] -0.82 0.53 -1.95 -1.15 -0.79 -0.45 0.11 1.00 alpha[39] 2.57 0.09 2.39 2.51 2.57 2.63 2.74 1.00 alpha[40] 3.09 0.07 2.94 3.04 3.09 3.13 3.22 1.00 alpha[41] 1.97 0.13 1.71 1.89 1.98 2.06 2.21 1.00 alpha[42] 1.91 0.14 1.62 1.82 1.91 2.00 2.17 1.00 alpha[43] 3.00 0.07 2.85 2.95 3.00 3.05 3.14 1.00 alpha[44] 4.07 0.04 3.99 4.04 4.07 4.10 4.16 1.00 alpha[45] 3.38 0.06 3.26 3.34 3.38 3.42 3.51 1.00 alpha[46] 1.46 0.16 1.15 1.35 1.46 1.57 1.76 1.00 alpha[47] -1.78 0.79 -3.60 -2.22 -1.68 -1.24 -0.54 1.00 alpha[48] 3.26 0.06 3.14 3.22 3.26 3.31 3.39 1.00 alpha[49] 3.16 0.07 3.02 3.12 3.16 3.21 3.30 1.00 alpha[50] 3.00 0.07 2.86 2.96 3.00 3.06 3.15 1.00 alpha[51] 0.71 0.23 0.26 0.57 0.72 0.87 1.15 1.00 alpha[52] 2.82 0.08 2.66 2.77 2.82 2.88 2.97 1.00 alpha[53] 2.66 0.09 2.49 2.60 2.66 2.72 2.82 1.01 alpha[54] 2.46 0.10 2.26 2.40 2.47 2.53 2.64 1.00 alpha[55] 2.92 0.08 2.76 2.87 2.92 2.97 3.07 1.00 alpha[56] 0.66 0.25 0.16 0.50 0.65 0.84 1.13 1.00 alpha[57] 3.70 0.05 3.59 3.67 3.71 3.74 3.81 1.00 alpha[58] 3.12 0.07 2.98 3.07 3.12 3.16 3.25 1.00 alpha[59] 3.10 0.07 2.96 3.06 3.10 3.15 3.24 1.00 alpha[60] 1.11 0.20 0.69 0.98 1.12 1.25 1.50 1.00 alpha[61] 2.82 0.08 2.66 2.76 2.82 2.87 2.97 1.00 alpha[62] 2.25 0.11 2.02 2.18 2.25 2.33 2.46 1.00 alpha[63] 3.02 0.07 2.88 2.97 3.02 3.07 3.16 1.00 alpha[64] 3.16 0.07 3.02 3.11 3.16 3.21 3.30 1.00 alpha[65] 3.46 0.06 3.34 3.42 3.46 3.50 3.57 1.00 alpha[66] 0.14 0.30 -0.51 -0.06 0.16 0.35 0.67 1.00 alpha[67] 3.52 0.06 3.41 3.48 3.52 3.56 3.62 1.00 alpha[68] 0.66 0.25 0.11 0.49 0.66 0.84 1.12 1.00 alpha[69] 2.65 0.09 2.48 2.59 2.65 2.71 2.82 1.00 alpha[70] 0.48 0.25 -0.04 0.31 0.49 0.66 0.94 1.00 alpha[71] -0.17 0.38 -0.96 -0.41 -0.16 0.10 0.50 1.00 alpha[72] 3.53 0.06 3.42 3.49 3.53 3.56 3.64 1.00 alpha[73] 2.68 0.09 2.51 2.62 2.68 2.74 2.85 1.00 alpha[74] 2.81 0.08 2.65 2.76 2.81 2.86 2.96 1.00 alpha[75] 0.40 0.27 -0.15 0.22 0.42 0.60 0.91 1.00 alpha[76] 1.19 0.18 0.81 1.08 1.20 1.31 1.51 1.00 alpha[77] 2.72 0.08 2.56 2.66 2.72 2.77 2.88 1.00 alpha[78] -1.76 0.81 -3.61 -2.22 -1.66 -1.22 -0.41 1.00 alpha[79] 3.32 0.07 3.19 3.28 3.32 3.37 3.45 1.00 alpha[80] 2.09 0.12 1.86 2.01 2.09 2.17 2.31 1.00 alpha[81] 2.82 0.09 2.64 2.76 2.82 2.88 3.00 1.00 alpha[82] 1.90 0.13 1.64 1.82 1.90 1.99 2.15 1.00 alpha[83] 2.30 0.10 2.10 2.23 2.31 2.37 2.50 1.00 alpha[84] 3.36 0.06 3.24 3.32 3.36 3.40 3.48 1.00 alpha[85] 2.64 0.09 2.47 2.58 2.64 2.70 2.81 1.00 alpha[86] 3.08 0.07 2.94 3.04 3.08 3.13 3.22 1.00 alpha[87] 3.49 0.06 3.36 3.45 3.49 3.52 3.60 1.00 alpha[88] 3.22 0.07 3.08 3.18 3.23 3.27 3.36 1.00 alpha[89] 3.16 0.07 3.02 3.11 3.16 3.21 3.30 1.00 alpha[90] 2.64 0.09 2.47 2.58 2.65 2.70 2.80 1.00 alpha[91] 1.62 0.15 1.32 1.52 1.63 1.72 1.91 1.00 alpha[92] 2.72 0.09 2.55 2.66 2.72 2.78 2.88 1.00 alpha[93] 2.54 0.09 2.36 2.48 2.55 2.61 2.73 1.01 alpha[94] 2.39 0.10 2.19 2.33 2.39 2.46 2.58 1.00 alpha[95] 1.56 0.15 1.23 1.46 1.56 1.66 1.84 1.00 alpha[96] 3.59 0.06 3.48 3.55 3.58 3.62 3.69 1.00 alpha[97] 2.79 0.09 2.62 2.73 2.79 2.85 2.95 1.00 alpha[98] 2.17 0.11 1.95 2.10 2.17 2.25 2.39 1.00 alpha[99] 3.66 0.05 3.55 3.62 3.66 3.70 3.76 1.00 alpha[100] 3.08 0.07 2.94 3.04 3.08 3.13 3.22 1.00 alpha[101] 2.46 0.10 2.27 2.39 2.46 2.53 2.65 1.00 alpha[102] 3.13 0.07 2.99 3.08 3.13 3.18 3.27 1.00 alpha[103] -1.75 0.80 -3.56 -2.21 -1.66 -1.18 -0.50 1.00 alpha[104] 3.42 0.06 3.30 3.38 3.42 3.46 3.54 1.00 alpha[105] 2.92 0.08 2.74 2.86 2.92 2.97 3.08 1.00 alpha[106] 2.14 0.11 1.91 2.06 2.14 2.21 2.34 1.00 alpha[107] -0.57 0.47 -1.59 -0.85 -0.52 -0.25 0.24 1.00 alpha[108] -1.75 0.80 -3.58 -2.18 -1.64 -1.22 -0.52 1.00 alpha[109] 1.69 0.14 1.41 1.59 1.69 1.78 1.96 1.00 alpha[110] 3.37 0.06 3.24 3.33 3.37 3.41 3.48 1.00 alpha[111] 2.57 0.09 2.39 2.51 2.58 2.64 2.75 1.00 n.eff alpha[1] 1500 alpha[2] 1500 alpha[3] 460 alpha[4] 1500 alpha[5] 1500 alpha[6] 1000 alpha[7] 1500 alpha[8] 1500 alpha[9] 1500 alpha[10] 1500 alpha[11] 1500 alpha[12] 1000 alpha[13] 1500 alpha[14] 1500 alpha[15] 1200 alpha[16] 1500 alpha[17] 860 alpha[18] 1400 alpha[19] 700 alpha[20] 1200 alpha[21] 1500 alpha[22] 1200 alpha[23] 1500 alpha[24] 1500 alpha[25] 1500 alpha[26] 1500 alpha[27] 1500 alpha[28] 1500 alpha[29] 1500 alpha[30] 1400 alpha[31] 1500 alpha[32] 1500 alpha[33] 570 alpha[34] 1500 alpha[35] 1000 alpha[36] 700 alpha[37] 1500 alpha[38] 1200 alpha[39] 1300 alpha[40] 1500 alpha[41] 1500 alpha[42] 1500 alpha[43] 510 alpha[44] 1500 alpha[45] 1200 alpha[46] 730 alpha[47] 1500 alpha[48] 1500 alpha[49] 1400 alpha[50] 1500 alpha[51] 1500 alpha[52] 920 alpha[53] 860 alpha[54] 1500 alpha[55] 1500 alpha[56] 1500 alpha[57] 1500 alpha[58] 1500 alpha[59] 1500 alpha[60] 1500 alpha[61] 1100 alpha[62] 1500 alpha[63] 620 alpha[64] 1400 alpha[65] 1500 alpha[66] 1500 alpha[67] 1500 alpha[68] 1500 alpha[69] 480 alpha[70] 1500 alpha[71] 860 alpha[72] 1500 alpha[73] 1500 alpha[74] 990 alpha[75] 960 alpha[76] 700 alpha[77] 1500 alpha[78] 1500 alpha[79] 1500 alpha[80] 1500 alpha[81] 1500 alpha[82] 1500 alpha[83] 1500 alpha[84] 1500 alpha[85] 1500 alpha[86] 970 alpha[87] 1500 alpha[88] 1500 alpha[89] 580 alpha[90] 1500 alpha[91] 610 alpha[92] 1500 alpha[93] 220 alpha[94] 1500 alpha[95] 1500 alpha[96] 1500 alpha[97] 530 alpha[98] 1500 alpha[99] 1500 alpha[100] 1500 alpha[101] 1500 alpha[102] 1500 alpha[103] 1500 alpha[104] 1500 alpha[105] 720 alpha[106] 1500 alpha[107] 1500 alpha[108] 990 alpha[109] 1400 alpha[110] 1500 alpha[111] 1500 [ reached getOption("max.print") -- omitted 125 rows ] For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = Dbar-Dhat) pD = 232.5 and DIC = 11920.5 DIC is an estimate of expected predictive error (lower deviance is better). > |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 |

> length(out1$mean$alpha) [1] 235 > out1$mean$alpha [1] -0.19527917 -0.48912494 2.45791133 1.97780133 3.09235333 -1.15894377 [7] 0.78798295 2.70868200 0.17196934 1.74406667 3.36595733 3.55249133 [13] 2.74636400 1.75312800 1.54987767 2.51234467 2.40703867 2.71388667 [19] 3.57859933 2.62405533 2.33972800 1.95742933 2.53615067 -0.95608938 [25] 2.76783933 2.17910133 2.95065333 3.64236467 1.55554467 0.16423942 [31] 1.55465733 3.27887200 4.18009733 -0.05902481 3.59994067 2.67844733 [37] 3.46848533 -0.83284968 2.57371267 3.08844733 1.97271467 1.91435000 [43] 3.00528000 4.07026733 3.37754200 1.45107320 -1.78909766 3.26025267 [49] 3.16248867 3.00359533 0.72428351 2.82479133 2.65983600 2.46499533 [55] 2.91823000 0.66928351 3.70413067 3.11509333 3.09999733 1.11932487 [61] 2.81700667 2.24907867 3.02236267 3.16256200 3.45480667 0.14867858 [67] 3.51214933 0.65484273 2.64852333 0.47075776 -0.18812376 3.52427400 [73] 2.68009733 2.81459000 0.41088601 1.18891460 2.71471600 -1.76380229 [79] 3.31663400 2.08592800 2.82393133 1.90357800 2.30995200 3.36367400 [85] 2.64317533 3.08230867 3.48455200 3.22587533 3.16227133 2.64747667 [91] 1.61695067 2.71745000 2.54342333 2.39598333 1.55054200 3.59021467 [97] 2.79181600 2.18191600 3.65971000 3.08732000 2.46452467 3.12824267 [103] -1.80363373 3.41854200 2.91444600 2.14606467 -0.58298858 -1.77668209 [109] 1.68543200 3.36283667 2.57163933 3.49446200 2.77768933 2.17957400 [115] 1.92206400 3.14208667 0.72262968 2.14152800 2.42681667 2.68260200 [121] 2.97478667 2.08560133 0.05622645 -0.48612395 -1.73437083 2.86563333 [127] 2.55873267 -0.69022847 1.85452800 2.20556533 2.44742000 -0.03820907 [133] 2.41235000 1.25114580 -0.94412843 2.64992800 3.68459267 2.54775200 [139] 1.68669933 2.48121533 2.96464200 3.13013200 -0.34551059 -1.29797401 [145] 2.53471800 3.18640067 2.28510467 2.13905867 3.34846667 1.39857153 [151] 3.39205200 3.42567000 2.01387267 1.54476007 1.94181867 2.03038267 [157] -0.70217280 0.99629993 2.95277800 -1.13985623 2.56659333 0.91929687 [163] 2.55181000 3.25291800 2.86836133 0.82402970 -0.92691642 0.24594579 [169] 1.28742787 3.05688400 2.00045467 3.02263800 3.24132867 0.40664842 [175] 2.50465267 1.54863367 -1.27109263 -0.56773603 2.56470200 -0.05447273 [181] 2.06804533 2.89965933 0.52431989 1.40314947 2.08260933 2.13887333 [187] 3.41683600 3.23821533 3.33542600 2.15372933 3.29236667 2.68752133 [193] 3.40467733 1.98144933 2.19265867 2.67951000 0.47078238 3.61845000 [199] -2.65552493 3.23069467 2.81164067 0.15854382 2.80168933 1.74997667 [205] -0.69290116 2.02956733 3.24836667 2.58358400 2.95483800 2.04001667 [211] 3.53811467 2.53307667 3.05228467 0.15870975 2.52036333 3.09389800 [217] 2.92771400 3.24369000 2.65656800 3.14299333 1.11815767 -2.73284019 [223] 2.56156800 0.87175740 3.55017600 1.37653413 0.41103329 -0.68500978 [229] 2.80794800 3.33762267 3.76101467 3.66903467 3.67718067 3.36329200 [235] 3.58923667 |

|

1 |

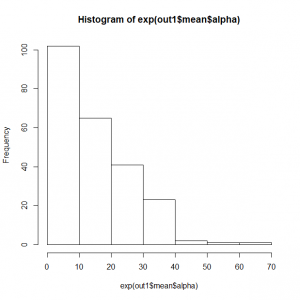

> hist((out1$mean$alpha) |

|

1 2 3 4 5 |

> mean(out1$mean$alpha) [1] 2.030321 > mean(exp(out1$mean$alpha)) [1] 14.25242 > hist(exp(out1$mean$alpha)) |

これだけの調査レポートだと、分析やり直しとなるであろう。

年ごとはどうなっているのかに答える必要があるだろう。

次は、固定サイト効果に固定年効果を組み込む

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 |

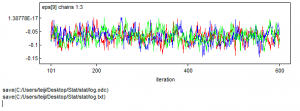

> # (c) Fixed site and fixed year effects > # Specify model in BUGS language > sink("GLM2.txt") > cat(" + model { + + # Priors + for (j in 1:nsite){ # site effects + alpha[j] ~ dnorm(0, 0.01) + } + + for (i in 2:nyear){ # nyear-1 year effects + eps[i] ~ dnorm(0, 0.01) + } + eps[1] <- 0 # Aliased + + # Likelihood + for (i in 1:nyear){ + for (j in 1:nsite){ + C[i,j] ~ dpois(lambda[i,j]) + lambda[i,j] <- exp(log.lambda[i,j]) + log.lambda[i,j] <- alpha[j] + eps[i] + } #j + } #i + } + ",fill = TRUE) > sink() > > # Bundle data > win.data <- list(C = t(C), nsite = nrow(C), nyear = ncol(C)) > > # Initial values > inits <- function() list(alpha = runif(235, -1, 1), eps = c(NA, runif(8, -1, 1))) > > # Parameters monitored > params <- c("alpha", "eps") > > # MCMC settings > ni <- 1200 > nt <- 2 > nb <- 200 > nc <- 3 > > # Call WinBUGS from R (BRT < 1 min) > out2 <- bugs(win.data, inits, params, "GLM2.txt", n.chains = nc, + n.thin = nt, n.iter = ni, n.burnin = nb, debug = TRUE, bugs.directory = bugs.dir, working.directory = getwd()) > > # Summarize posteriors > print(out2, dig = 2) Inference for Bugs model at "GLM2.txt", fit using WinBUGS, 3 chains, each with 1200 iterations (first 200 discarded), n.thin = 2 n.sims = 1500 iterations saved mean sd 2.5% 25% 50% 75% 97.5% Rhat alpha[1] -0.17 0.36 -0.92 -0.39 -0.15 0.08 0.49 1.00 alpha[2] -0.52 0.43 -1.43 -0.80 -0.48 -0.22 0.24 1.00 alpha[3] 2.46 0.10 2.26 2.38 2.46 2.53 2.66 1.00 alpha[4] 1.98 0.12 1.73 1.90 1.98 2.06 2.22 1.00 alpha[5] 3.09 0.07 2.94 3.04 3.09 3.14 3.22 1.00 alpha[6] -1.18 0.65 -2.61 -1.54 -1.10 -0.74 -0.11 1.00 alpha[7] 0.75 0.25 0.23 0.59 0.77 0.92 1.22 1.00 alpha[8] 2.71 0.09 2.54 2.65 2.71 2.77 2.88 1.00 alpha[9] 0.19 0.34 -0.52 -0.03 0.21 0.42 0.77 1.00 alpha[10] 1.74 0.14 1.46 1.65 1.74 1.83 2.01 1.00 alpha[11] 3.36 0.06 3.24 3.32 3.36 3.40 3.49 1.00 alpha[12] 3.55 0.06 3.44 3.51 3.55 3.59 3.66 1.00 alpha[13] 2.75 0.09 2.58 2.69 2.75 2.81 2.91 1.00 alpha[14] 1.74 0.15 1.44 1.64 1.74 1.84 2.01 1.00 alpha[15] 1.55 0.16 1.23 1.44 1.56 1.66 1.85 1.00 alpha[16] 2.51 0.10 2.31 2.45 2.52 2.58 2.70 1.00 alpha[17] 2.41 0.10 2.22 2.35 2.41 2.48 2.59 1.00 alpha[18] 2.71 0.09 2.53 2.65 2.71 2.77 2.88 1.00 alpha[19] 3.58 0.06 3.46 3.54 3.58 3.62 3.70 1.01 alpha[20] 2.62 0.09 2.44 2.56 2.62 2.68 2.80 1.00 alpha[21] 2.34 0.11 2.12 2.27 2.34 2.41 2.55 1.00 alpha[22] 1.96 0.13 1.67 1.87 1.97 2.06 2.21 1.00 alpha[23] 2.53 0.09 2.34 2.47 2.53 2.59 2.71 1.00 alpha[24] -0.94 0.53 -2.04 -1.26 -0.91 -0.57 -0.03 1.00 alpha[25] 2.77 0.08 2.60 2.71 2.77 2.82 2.92 1.00 alpha[26] 2.18 0.12 1.94 2.10 2.18 2.26 2.40 1.00 alpha[27] 2.95 0.08 2.80 2.90 2.95 3.00 3.11 1.01 alpha[28] 3.64 0.05 3.54 3.60 3.64 3.68 3.75 1.00 alpha[29] 1.55 0.16 1.24 1.44 1.55 1.66 1.85 1.00 alpha[30] 0.15 0.31 -0.52 -0.07 0.16 0.38 0.69 1.00 alpha[31] 1.55 0.15 1.23 1.45 1.55 1.65 1.83 1.00 alpha[32] 3.28 0.07 3.14 3.23 3.27 3.32 3.40 1.00 alpha[33] 4.18 0.04 4.09 4.15 4.18 4.21 4.26 1.00 alpha[34] -0.13 0.39 -0.98 -0.37 -0.11 0.15 0.55 1.00 alpha[35] 3.60 0.06 3.48 3.56 3.60 3.64 3.71 1.00 alpha[36] 2.66 0.10 2.45 2.59 2.66 2.73 2.85 1.00 alpha[37] 3.46 0.06 3.34 3.42 3.46 3.51 3.59 1.00 alpha[38] -0.82 0.55 -1.99 -1.15 -0.79 -0.44 0.11 1.00 alpha[39] 2.57 0.09 2.38 2.50 2.57 2.63 2.74 1.00 alpha[40] 3.09 0.07 2.93 3.04 3.09 3.13 3.22 1.00 alpha[41] 1.97 0.13 1.70 1.88 1.97 2.06 2.21 1.00 alpha[42] 1.90 0.14 1.63 1.80 1.89 1.98 2.16 1.00 alpha[43] 3.00 0.08 2.85 2.95 3.00 3.06 3.14 1.00 alpha[44] 4.07 0.05 3.97 4.04 4.07 4.10 4.16 1.00 alpha[45] 3.37 0.06 3.25 3.33 3.38 3.42 3.50 1.00 alpha[46] 1.46 0.16 1.13 1.35 1.46 1.58 1.74 1.00 alpha[47] -1.77 0.81 -3.61 -2.24 -1.68 -1.19 -0.47 1.00 alpha[48] 3.26 0.07 3.13 3.21 3.26 3.30 3.39 1.00 alpha[49] 3.16 0.07 3.02 3.12 3.16 3.21 3.29 1.00 alpha[50] 3.00 0.08 2.85 2.96 3.01 3.06 3.15 1.00 alpha[51] 0.72 0.23 0.24 0.58 0.73 0.88 1.16 1.00 alpha[52] 2.82 0.08 2.66 2.77 2.82 2.88 2.97 1.00 alpha[53] 2.65 0.09 2.48 2.59 2.65 2.71 2.82 1.00 alpha[54] 2.46 0.10 2.25 2.39 2.46 2.53 2.66 1.00 alpha[55] 2.92 0.08 2.76 2.87 2.92 2.97 3.08 1.00 alpha[56] 0.69 0.25 0.18 0.52 0.70 0.86 1.13 1.00 alpha[57] 3.70 0.06 3.59 3.67 3.70 3.74 3.81 1.00 alpha[58] 3.11 0.07 2.97 3.07 3.11 3.16 3.25 1.00 alpha[59] 3.10 0.07 2.96 3.05 3.10 3.15 3.24 1.00 alpha[60] 1.11 0.20 0.73 0.98 1.12 1.26 1.50 1.01 alpha[61] 2.81 0.08 2.65 2.76 2.82 2.87 2.97 1.00 alpha[62] 2.25 0.11 2.02 2.17 2.25 2.32 2.46 1.00 alpha[63] 3.02 0.07 2.88 2.97 3.02 3.07 3.16 1.00 alpha[64] 3.16 0.07 3.02 3.11 3.16 3.21 3.30 1.00 alpha[65] 3.46 0.06 3.34 3.42 3.46 3.50 3.57 1.00 alpha[66] 0.15 0.31 -0.49 -0.04 0.16 0.36 0.74 1.00 alpha[67] 3.51 0.06 3.39 3.47 3.52 3.56 3.63 1.00 alpha[68] 0.66 0.25 0.12 0.50 0.66 0.82 1.13 1.00 alpha[69] 2.65 0.09 2.47 2.59 2.66 2.71 2.83 1.00 alpha[70] 0.47 0.26 -0.08 0.30 0.49 0.65 0.95 1.00 alpha[71] -0.19 0.36 -0.95 -0.43 -0.17 0.06 0.45 1.00 alpha[72] 3.52 0.06 3.41 3.48 3.52 3.56 3.64 1.00 alpha[73] 2.68 0.09 2.51 2.62 2.68 2.74 2.84 1.00 alpha[74] 2.81 0.08 2.65 2.75 2.81 2.87 2.98 1.01 alpha[75] 0.40 0.27 -0.20 0.22 0.42 0.59 0.89 1.00 alpha[76] 1.19 0.19 0.82 1.06 1.19 1.32 1.53 1.00 alpha[77] 2.72 0.09 2.54 2.66 2.72 2.78 2.88 1.00 alpha[78] -1.77 0.80 -3.58 -2.23 -1.66 -1.21 -0.49 1.00 alpha[79] 3.33 0.07 3.18 3.28 3.32 3.37 3.46 1.00 alpha[80] 2.08 0.12 1.84 2.00 2.08 2.16 2.31 1.00 alpha[81] 2.78 0.10 2.60 2.72 2.79 2.85 2.97 1.00 alpha[82] 1.90 0.13 1.64 1.81 1.90 1.99 2.14 1.00 alpha[83] 2.31 0.11 2.09 2.23 2.31 2.38 2.52 1.00 alpha[84] 3.36 0.06 3.23 3.32 3.36 3.40 3.48 1.00 alpha[85] 2.64 0.09 2.46 2.58 2.64 2.70 2.81 1.00 alpha[86] 3.08 0.08 2.93 3.03 3.08 3.13 3.22 1.00 alpha[87] 3.48 0.06 3.37 3.44 3.48 3.53 3.59 1.00 alpha[88] 3.22 0.07 3.08 3.18 3.22 3.27 3.35 1.00 alpha[89] 3.16 0.07 3.02 3.12 3.16 3.21 3.30 1.00 alpha[90] 2.64 0.09 2.46 2.58 2.64 2.70 2.81 1.00 alpha[91] 1.62 0.15 1.32 1.52 1.62 1.73 1.90 1.00 alpha[92] 2.71 0.09 2.54 2.66 2.71 2.77 2.89 1.00 alpha[93] 2.54 0.09 2.34 2.48 2.55 2.61 2.72 1.00 alpha[94] 2.39 0.10 2.19 2.33 2.39 2.46 2.59 1.00 alpha[95] 1.55 0.15 1.23 1.45 1.56 1.66 1.83 1.00 alpha[96] 3.59 0.05 3.48 3.55 3.59 3.62 3.69 1.00 alpha[97] 2.79 0.09 2.61 2.73 2.79 2.85 2.97 1.00 alpha[98] 2.18 0.11 1.95 2.10 2.18 2.26 2.40 1.00 alpha[99] 3.66 0.06 3.55 3.62 3.66 3.70 3.77 1.01 alpha[100] 3.08 0.07 2.94 3.04 3.08 3.13 3.22 1.00 alpha[101] 2.46 0.10 2.26 2.40 2.46 2.53 2.65 1.00 alpha[102] 3.13 0.07 2.98 3.08 3.13 3.18 3.26 1.00 alpha[103] -1.75 0.79 -3.60 -2.20 -1.67 -1.18 -0.48 1.00 alpha[104] 3.41 0.06 3.29 3.37 3.42 3.46 3.53 1.00 alpha[105] 2.91 0.08 2.74 2.86 2.91 2.97 3.07 1.00 alpha[106] 2.14 0.11 1.91 2.07 2.15 2.22 2.36 1.00 alpha[107] -0.58 0.47 -1.59 -0.89 -0.55 -0.24 0.25 1.00 alpha[108] -1.78 0.80 -3.75 -2.25 -1.69 -1.20 -0.52 1.00 alpha[109] 1.68 0.14 1.39 1.59 1.69 1.78 1.95 1.00 alpha[110] 3.36 0.07 3.23 3.32 3.36 3.41 3.49 1.00 alpha[111] 2.56 0.09 2.38 2.51 2.57 2.63 2.75 1.00 n.eff alpha[1] 1300 alpha[2] 1500 alpha[3] 1500 alpha[4] 1500 alpha[5] 1500 alpha[6] 1200 alpha[7] 1500 alpha[8] 1500 alpha[9] 1500 alpha[10] 1500 alpha[11] 1500 alpha[12] 1500 alpha[13] 720 alpha[14] 1500 alpha[15] 1500 alpha[16] 1500 alpha[17] 1500 alpha[18] 1500 alpha[19] 380 alpha[20] 1500 alpha[21] 910 alpha[22] 510 alpha[23] 1500 alpha[24] 1500 alpha[25] 1500 alpha[26] 1500 alpha[27] 360 alpha[28] 1400 alpha[29] 1500 alpha[30] 1500 alpha[31] 680 alpha[32] 1500 alpha[33] 1500 alpha[34] 1500 alpha[35] 1500 alpha[36] 1500 alpha[37] 1500 alpha[38] 1500 alpha[39] 1500 alpha[40] 1500 alpha[41] 1500 alpha[42] 1500 alpha[43] 1500 alpha[44] 660 alpha[45] 540 alpha[46] 1500 alpha[47] 1400 alpha[48] 1100 alpha[49] 1500 alpha[50] 1500 alpha[51] 1500 alpha[52] 1500 alpha[53] 1500 alpha[54] 1500 alpha[55] 1500 alpha[56] 1000 alpha[57] 1500 alpha[58] 1500 alpha[59] 720 alpha[60] 1500 alpha[61] 1500 alpha[62] 1500 alpha[63] 1500 alpha[64] 540 alpha[65] 1400 alpha[66] 1500 alpha[67] 1500 alpha[68] 1500 alpha[69] 420 alpha[70] 1500 alpha[71] 860 alpha[72] 1500 alpha[73] 1500 alpha[74] 240 alpha[75] 1500 alpha[76] 1500 alpha[77] 1500 alpha[78] 1500 alpha[79] 1300 alpha[80] 690 alpha[81] 800 alpha[82] 1300 alpha[83] 1500 alpha[84] 880 alpha[85] 1500 alpha[86] 1500 alpha[87] 1500 alpha[88] 480 alpha[89] 1500 alpha[90] 1500 alpha[91] 650 alpha[92] 1500 alpha[93] 960 alpha[94] 1500 alpha[95] 800 alpha[96] 1500 alpha[97] 480 alpha[98] 1500 alpha[99] 390 alpha[100] 1500 alpha[101] 1500 alpha[102] 1500 alpha[103] 1000 alpha[104] 1500 alpha[105] 1500 alpha[106] 1500 alpha[107] 1500 alpha[108] 1300 alpha[109] 1300 alpha[110] 1500 alpha[111] 1500 [ reached getOption("max.print") -- omitted 133 rows ] For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = Dbar-Dhat) pD = 241.4 and DIC = 11478.7 DIC is an estimate of expected predictive error (lower deviance is better). |

|

1 2 3 4 5 |

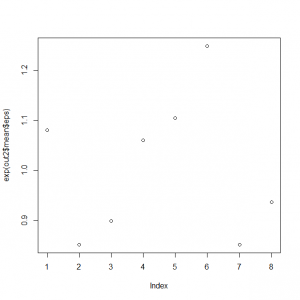

> exp(out2$mean$eps) [1] 1.0801500 0.8518834 0.8990630 1.0599091 1.1047034 1.2489761 0.8515608 [8] 0.9370838 > mean(exp(out2$mean$eps)) [1] 1.004166 |

年によって、計測数1程度影響することがわかる。

|

1 |

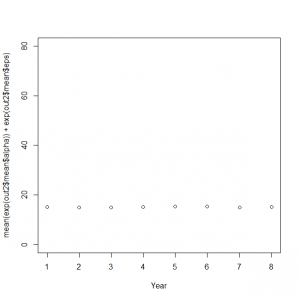

> plot(exp(out2$mean$eps)) |

|

1 |

> plot(mean(exp(out2$mean$alpha)) + exp(out2$mean$eps), xlab = "Year", ylim = c(0, 80)) |

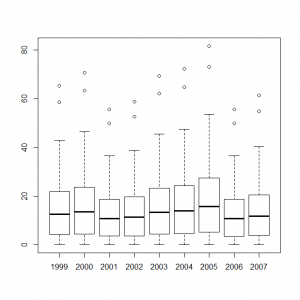

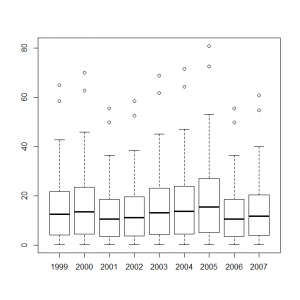

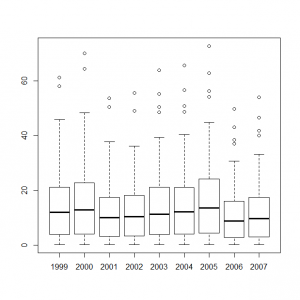

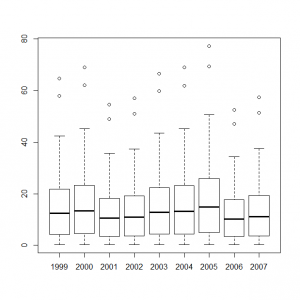

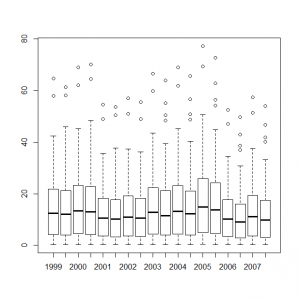

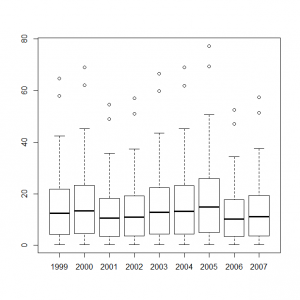

サイト効果に年ごとの固定効果を組み込んだモデルをボックスプロット表示してみる。

|

1 2 3 4 5 6 7 8 9 10 |

> y1999_s <- out1$mean$alpha + out2$mean$eps[0] > y2000_s <- out1$mean$alpha + out2$mean$eps[1] > y2001_s <- out1$mean$alpha + out2$mean$eps[2] > y2002_s <- out1$mean$alpha + out2$mean$eps[3] > y2003_s <- out1$mean$alpha + out2$mean$eps[4] > y2004_s <- out1$mean$alpha + out2$mean$eps[5] > y2005_s <- out1$mean$alpha + out2$mean$eps[6] > y2006_s <- out1$mean$alpha + out2$mean$eps[7] > y2007_s <- out1$mean$alpha + out2$mean$eps[8] > boxplot(exp(y1999_s), exp(y2000_s), exp(y2001_s), exp(y2002_s), exp(y2003_s), exp(y2004_s), exp(y2005_s), exp(y2006_s), exp(y2007_s), names=c(1999:2007)) |

次に固定効果の代わりに、ランダムサイト効果(年効果なし)を指定する。

この場合、alpha[j] ~ dnorm(mu.alpha, tau.alpha)で、平均mu.alpha、と標準偏差tau.alphaとその階層超パラメータsd.alpha

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 |

> # (d) Random site effects (no year effects) > # Specify model in BUGS language > sink("GLMM1.txt") > cat(" + model { + + # Priors + for (j in 1:nsite){ + alpha[j] ~ dnorm(mu.alpha, tau.alpha) # Random site effects + } + mu.alpha ~ dnorm(0, 0.01) + tau.alpha <- 1/ (sd.alpha * sd.alpha) + sd.alpha ~ dunif(0, 5) + + # Likelihood + for (i in 1:nyear){ + for (j in 1:nsite){ + C[i,j] ~ dpois(lambda[i,j]) + lambda[i,j] <- exp(log.lambda[i,j]) + log.lambda[i,j] <- alpha[j] + } #j + } #i + } + ",fill = TRUE) > sink() > > # Bundle data > win.data <- list(C = t(C), nsite = nrow(C), nyear = ncol(C)) > > # Initial values > inits <- function() list(mu.alpha = runif(1, 2, 3)) > > # Parameters monitored > params <- c("alpha", "mu.alpha", "sd.alpha") > > # MCMC settings > ni <- 1200 > nt <- 2 > nb <- 200 > nc <- 3 > > # Call WinBUGS from R (BRT < 1 min) > out3 <- bugs(win.data, inits, params, "GLMM1.txt", n.chains = nc, + n.thin = nt, n.iter = ni, n.burnin = nb, debug = TRUE, bugs.directory = bugs.dir, working.directory = getwd()) > > # Summarize posteriors > print(out3, dig = 2) Inference for Bugs model at "GLMM1.txt", fit using WinBUGS, 3 chains, each with 1200 iterations (first 200 discarded), n.thin = 2 n.sims = 1500 iterations saved mean sd 2.5% 25% 50% 75% 97.5% Rhat alpha[1] -0.02 0.32 -0.68 -0.23 -0.01 0.19 0.60 1.00 alpha[2] -0.28 0.37 -1.09 -0.50 -0.26 -0.02 0.40 1.00 alpha[3] 2.46 0.10 2.27 2.39 2.46 2.53 2.64 1.00 alpha[4] 1.98 0.13 1.72 1.90 1.99 2.06 2.23 1.00 alpha[5] 3.09 0.07 2.94 3.05 3.10 3.14 3.22 1.00 alpha[6] -0.66 0.46 -1.63 -0.98 -0.62 -0.34 0.19 1.00 alpha[7] 0.84 0.24 0.36 0.69 0.86 1.00 1.28 1.00 alpha[8] 2.71 0.09 2.54 2.65 2.71 2.77 2.87 1.01 alpha[9] 0.28 0.30 -0.35 0.08 0.29 0.49 0.82 1.00 alpha[10] 1.75 0.14 1.48 1.66 1.76 1.85 2.00 1.00 alpha[11] 3.36 0.06 3.24 3.32 3.36 3.40 3.48 1.00 alpha[12] 3.55 0.06 3.45 3.52 3.55 3.59 3.66 1.00 alpha[13] 2.74 0.09 2.57 2.68 2.75 2.80 2.91 1.00 alpha[14] 1.77 0.14 1.48 1.68 1.77 1.87 2.03 1.00 alpha[15] 1.55 0.16 1.23 1.45 1.55 1.65 1.86 1.00 alpha[16] 2.51 0.09 2.33 2.45 2.51 2.58 2.69 1.00 alpha[17] 2.42 0.10 2.21 2.35 2.42 2.48 2.61 1.00 alpha[18] 2.71 0.09 2.54 2.65 2.71 2.77 2.88 1.00 alpha[19] 3.58 0.06 3.47 3.54 3.58 3.62 3.68 1.00 alpha[20] 2.61 0.09 2.44 2.55 2.61 2.67 2.80 1.00 alpha[21] 2.34 0.11 2.12 2.27 2.34 2.41 2.53 1.00 alpha[22] 1.95 0.13 1.69 1.86 1.96 2.04 2.22 1.00 alpha[23] 2.53 0.10 2.34 2.46 2.53 2.60 2.71 1.00 alpha[24] -0.57 0.40 -1.44 -0.84 -0.55 -0.29 0.16 1.00 alpha[25] 2.77 0.09 2.60 2.71 2.77 2.83 2.92 1.00 alpha[26] 2.17 0.11 1.95 2.10 2.17 2.25 2.38 1.00 alpha[27] 2.95 0.08 2.79 2.90 2.95 3.00 3.09 1.00 alpha[28] 3.64 0.05 3.53 3.60 3.64 3.68 3.75 1.00 alpha[29] 1.56 0.15 1.24 1.46 1.57 1.66 1.85 1.00 alpha[30] 0.25 0.28 -0.35 0.08 0.27 0.44 0.76 1.00 alpha[31] 1.55 0.16 1.24 1.45 1.56 1.66 1.84 1.00 alpha[32] 3.27 0.06 3.15 3.23 3.27 3.32 3.40 1.00 alpha[33] 4.18 0.04 4.10 4.15 4.18 4.20 4.25 1.00 alpha[34] 0.11 0.35 -0.62 -0.10 0.14 0.35 0.74 1.00 alpha[35] 3.60 0.06 3.49 3.56 3.60 3.64 3.71 1.00 alpha[36] 2.67 0.10 2.47 2.60 2.67 2.74 2.86 1.00 alpha[37] 3.46 0.06 3.35 3.42 3.46 3.50 3.58 1.00 alpha[38] -0.47 0.41 -1.33 -0.76 -0.46 -0.17 0.29 1.00 alpha[39] 2.57 0.09 2.38 2.50 2.57 2.63 2.75 1.00 alpha[40] 3.09 0.07 2.95 3.04 3.09 3.14 3.21 1.00 alpha[41] 1.97 0.13 1.70 1.89 1.97 2.06 2.22 1.00 alpha[42] 1.92 0.13 1.64 1.83 1.92 2.01 2.18 1.00 alpha[43] 3.00 0.07 2.86 2.95 3.00 3.05 3.14 1.00 alpha[44] 4.07 0.04 3.98 4.04 4.07 4.10 4.15 1.00 alpha[45] 3.38 0.06 3.25 3.34 3.38 3.42 3.49 1.00 alpha[46] 1.47 0.16 1.14 1.37 1.47 1.57 1.78 1.00 alpha[47] -0.97 0.49 -2.00 -1.28 -0.93 -0.63 -0.08 1.00 alpha[48] 3.26 0.07 3.12 3.21 3.26 3.30 3.38 1.00 alpha[49] 3.16 0.07 3.02 3.11 3.16 3.20 3.29 1.00 alpha[50] 3.00 0.08 2.86 2.95 3.00 3.05 3.15 1.00 alpha[51] 0.76 0.22 0.28 0.61 0.76 0.91 1.16 1.01 alpha[52] 2.82 0.08 2.66 2.76 2.82 2.88 2.98 1.00 alpha[53] 2.66 0.09 2.49 2.60 2.66 2.72 2.82 1.00 alpha[54] 2.46 0.10 2.26 2.40 2.46 2.53 2.65 1.00 alpha[55] 2.92 0.08 2.76 2.86 2.92 2.97 3.07 1.00 alpha[56] 0.71 0.24 0.22 0.56 0.72 0.87 1.15 1.00 alpha[57] 3.70 0.05 3.59 3.66 3.70 3.74 3.80 1.00 alpha[58] 3.11 0.07 2.98 3.06 3.11 3.15 3.25 1.00 alpha[59] 3.10 0.07 2.96 3.05 3.10 3.15 3.24 1.00 alpha[60] 1.14 0.19 0.75 1.01 1.15 1.27 1.50 1.00 alpha[61] 2.81 0.08 2.65 2.76 2.81 2.87 2.97 1.00 alpha[62] 2.25 0.11 2.04 2.18 2.25 2.33 2.46 1.00 alpha[63] 3.02 0.07 2.88 2.98 3.02 3.07 3.16 1.00 alpha[64] 3.16 0.07 3.03 3.12 3.16 3.21 3.29 1.00 alpha[65] 3.45 0.06 3.33 3.41 3.46 3.50 3.56 1.00 alpha[66] 0.25 0.28 -0.32 0.07 0.26 0.44 0.79 1.00 alpha[67] 3.51 0.06 3.40 3.47 3.51 3.55 3.63 1.00 alpha[68] 0.72 0.25 0.18 0.56 0.73 0.89 1.18 1.00 alpha[69] 2.65 0.09 2.48 2.59 2.65 2.71 2.83 1.00 alpha[70] 0.55 0.24 0.08 0.38 0.55 0.72 1.00 1.00 alpha[71] -0.01 0.33 -0.72 -0.23 0.00 0.21 0.57 1.00 alpha[72] 3.52 0.06 3.41 3.48 3.52 3.56 3.63 1.00 alpha[73] 2.68 0.09 2.50 2.62 2.68 2.74 2.84 1.00 alpha[74] 2.81 0.08 2.65 2.75 2.81 2.86 2.98 1.00 alpha[75] 0.47 0.26 -0.09 0.31 0.48 0.65 0.96 1.00 alpha[76] 1.20 0.19 0.81 1.07 1.21 1.33 1.54 1.00 alpha[77] 2.72 0.09 2.55 2.66 2.72 2.78 2.89 1.00 alpha[78] -1.00 0.52 -2.15 -1.30 -0.96 -0.65 -0.09 1.00 alpha[79] 3.32 0.07 3.18 3.27 3.32 3.36 3.45 1.00 alpha[80] 2.08 0.12 1.84 2.00 2.08 2.16 2.31 1.00 alpha[81] 2.81 0.09 2.63 2.75 2.82 2.88 2.99 1.00 alpha[82] 1.91 0.13 1.66 1.83 1.91 2.00 2.15 1.00 alpha[83] 2.30 0.10 2.10 2.23 2.31 2.38 2.51 1.00 alpha[84] 3.36 0.06 3.24 3.32 3.36 3.40 3.49 1.00 alpha[85] 2.64 0.09 2.47 2.58 2.64 2.71 2.81 1.00 alpha[86] 3.08 0.07 2.94 3.03 3.08 3.13 3.22 1.00 alpha[87] 3.48 0.06 3.35 3.44 3.48 3.52 3.60 1.00 alpha[88] 3.22 0.07 3.09 3.18 3.22 3.27 3.34 1.00 alpha[89] 3.15 0.07 3.02 3.11 3.16 3.20 3.29 1.00 alpha[90] 2.64 0.09 2.46 2.58 2.64 2.70 2.81 1.00 alpha[91] 1.63 0.15 1.34 1.52 1.63 1.73 1.90 1.00 alpha[92] 2.72 0.09 2.55 2.66 2.72 2.77 2.88 1.00 alpha[93] 2.54 0.09 2.35 2.48 2.54 2.61 2.71 1.00 alpha[94] 2.39 0.10 2.20 2.32 2.39 2.46 2.58 1.00 alpha[95] 1.56 0.16 1.23 1.45 1.56 1.67 1.87 1.00 alpha[96] 3.59 0.06 3.48 3.55 3.59 3.62 3.69 1.00 alpha[97] 2.79 0.09 2.61 2.73 2.79 2.85 2.95 1.00 alpha[98] 2.18 0.11 1.97 2.11 2.18 2.26 2.40 1.00 alpha[99] 3.66 0.05 3.55 3.62 3.66 3.69 3.76 1.00 alpha[100] 3.09 0.07 2.95 3.04 3.09 3.13 3.23 1.00 alpha[101] 2.46 0.10 2.27 2.39 2.46 2.52 2.64 1.00 alpha[102] 3.12 0.07 2.99 3.07 3.12 3.17 3.26 1.00 alpha[103] -0.97 0.49 -2.02 -1.30 -0.94 -0.62 -0.09 1.00 alpha[104] 3.42 0.06 3.30 3.38 3.42 3.45 3.53 1.00 alpha[105] 2.91 0.08 2.75 2.86 2.92 2.97 3.07 1.00 alpha[106] 2.14 0.11 1.92 2.07 2.15 2.22 2.36 1.00 alpha[107] -0.30 0.40 -1.19 -0.54 -0.27 -0.03 0.42 1.00 alpha[108] -1.01 0.50 -2.11 -1.32 -0.97 -0.66 -0.14 1.00 alpha[109] 1.69 0.14 1.41 1.60 1.70 1.79 1.97 1.00 alpha[110] 3.36 0.06 3.24 3.32 3.36 3.41 3.48 1.00 alpha[111] 2.57 0.09 2.39 2.51 2.57 2.63 2.74 1.00 n.eff alpha[1] 970 alpha[2] 500 alpha[3] 1500 alpha[4] 1500 alpha[5] 1500 alpha[6] 1500 alpha[7] 790 alpha[8] 390 alpha[9] 1500 alpha[10] 1500 alpha[11] 990 alpha[12] 1500 alpha[13] 1500 alpha[14] 1100 alpha[15] 1500 alpha[16] 830 alpha[17] 1500 alpha[18] 1500 alpha[19] 1000 alpha[20] 1500 alpha[21] 900 alpha[22] 1500 alpha[23] 1500 alpha[24] 1500 alpha[25] 1300 alpha[26] 1500 alpha[27] 1500 alpha[28] 1500 alpha[29] 1500 alpha[30] 910 alpha[31] 1500 alpha[32] 1500 alpha[33] 1500 alpha[34] 1500 alpha[35] 1500 alpha[36] 1500 alpha[37] 1300 alpha[38] 1500 alpha[39] 1500 alpha[40] 1500 alpha[41] 1500 alpha[42] 1300 alpha[43] 1500 alpha[44] 1500 alpha[45] 960 alpha[46] 1500 alpha[47] 1100 alpha[48] 840 alpha[49] 1500 alpha[50] 660 alpha[51] 1300 alpha[52] 1500 alpha[53] 1500 alpha[54] 1500 alpha[55] 1500 alpha[56] 1500 alpha[57] 1500 alpha[58] 1500 alpha[59] 1000 alpha[60] 1500 alpha[61] 1500 alpha[62] 880 alpha[63] 1200 alpha[64] 1500 alpha[65] 1500 alpha[66] 1500 alpha[67] 1500 alpha[68] 1500 alpha[69] 1500 alpha[70] 1500 alpha[71] 1500 alpha[72] 1500 alpha[73] 1500 alpha[74] 1500 alpha[75] 600 alpha[76] 1500 alpha[77] 1100 alpha[78] 1500 alpha[79] 1500 alpha[80] 530 alpha[81] 1200 alpha[82] 1500 alpha[83] 530 alpha[84] 910 alpha[85] 1500 alpha[86] 600 alpha[87] 1500 alpha[88] 1500 alpha[89] 1300 alpha[90] 1500 alpha[91] 1100 alpha[92] 1200 alpha[93] 1200 alpha[94] 700 alpha[95] 1500 alpha[96] 1500 alpha[97] 1100 alpha[98] 1500 alpha[99] 1500 alpha[100] 1500 alpha[101] 1500 alpha[102] 610 alpha[103] 1500 alpha[104] 700 alpha[105] 1500 alpha[106] 1500 alpha[107] 1500 alpha[108] 1500 alpha[109] 1500 alpha[110] 1500 alpha[111] 1400 [ reached getOption("max.print") -- omitted 127 rows ] For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = Dbar-Dhat) pD = 229.8 and DIC = 11922.8 DIC is an estimate of expected predictive error (lower deviance is better). |

パラメータalpha, mu.alpha, tau.alpha, sd.alphaがどうなったかを確認する。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

> out3$mean $alpha [1] -0.01533725 -0.25483459 2.45991400 1.98360400 3.08743000 -0.65058863 [7] 0.83555450 2.71034067 0.26367719 1.74810800 3.36067600 3.55211800 [13] 2.74362533 1.76068533 1.55458653 2.51811000 2.41051333 2.71100733 [19] 3.57593200 2.61440133 2.33991533 1.95824067 2.53557733 -0.57266770 [25] 2.76574400 2.17597800 2.94881333 3.63954467 1.55761133 0.24076148 [31] 1.56049133 3.27382267 4.17649400 0.08410081 3.59772200 2.67575533 [37] 3.46755867 -0.46578006 2.56406200 3.08569067 1.97035200 1.92505200 [43] 3.00518133 4.07136067 3.37550600 1.45734520 -0.98750235 3.25465067 [49] 3.15778000 2.99898467 0.76640529 2.81877667 2.65594133 2.46071133 [55] 2.91586933 0.71273128 3.69966133 3.10829867 3.09257667 1.14205260 [61] 2.81288600 2.24844400 3.02433467 3.15767133 3.45548467 0.25415669 [67] 3.51180733 0.70812681 2.65263200 0.54197365 -0.04309421 3.52114133 [73] 2.68258867 2.80702467 0.47426575 1.20383853 2.71743933 -1.01332955 [79] 3.31562333 2.08604933 2.82052667 1.91033600 2.30389400 3.35725000 [85] 2.63965267 3.07884400 3.48091867 3.22642133 3.15869200 2.63616667 [91] 1.62374067 2.71726067 2.54215600 2.39303533 1.56222727 3.58530467 [97] 2.78935333 2.17980000 3.65813733 3.08270867 2.45983000 3.12173200 [103] -0.98512439 3.41575000 2.91305200 2.14147533 -0.30363415 -0.99167881 [109] 1.69184600 3.36377533 2.56437400 3.49118867 2.78286533 2.17745067 [115] 1.92194067 3.14188000 0.75938742 2.14241933 2.42534467 2.68444600 [121] 2.96716667 2.08624600 0.18285495 -0.27685094 -0.97894368 2.86532867 [127] 2.56211400 -0.41601571 1.85729600 2.20105400 2.45141067 0.09470000 [133] 2.41320667 1.27309053 -0.58257942 2.64987333 3.68171267 2.55100400 [139] 1.68370667 2.47877600 2.96373933 3.12956333 -0.14875821 -0.76882749 [145] 2.53467800 3.18005133 2.28354200 2.13743400 3.34740800 1.41059320 [151] 3.39228733 3.41933200 2.01853600 1.55735887 1.93617800 2.03170600 [157] -0.39585732 1.03422447 2.95073533 -0.65669532 2.56454933 0.94349007 [163] 2.54624200 3.24646933 2.86492733 0.85416301 -0.56884591 0.32289811 [169] 1.29707460 3.05689000 2.00239133 3.01875667 3.23918600 0.47118377 [175] 2.50150133 1.55617933 -0.75664216 -0.30672701 2.56147867 0.05974794 [181] 2.07017867 2.89769133 0.58723572 1.41451113 2.08919600 2.13740000 [187] 3.41672800 3.23547667 3.33813200 2.15142133 3.28565467 2.68743600 [193] 3.40568600 1.98758067 2.19114533 2.67946400 0.53302566 3.61407733 [199] -1.30134617 3.22686267 2.80979067 0.25148036 2.80160533 1.74418467 [205] -0.40631645 2.03235733 3.24277733 2.58175333 2.95073800 2.03068933 [211] 3.53456933 2.53464667 3.05011200 0.25024972 2.51641733 3.09360600 [217] 2.92952267 3.24012800 2.65700267 3.14045600 1.13328927 -1.27686185 [223] 2.56118200 0.90348025 3.54951467 1.38773340 0.48260841 -0.40627552 [229] 2.80675000 3.33482200 3.75916533 3.66569800 3.67643200 3.36068933 [235] 3.59042267 $mu.alpha [1] 2.092945 $sd.alpha [1] 1.328842 $deviance [1] 11692.51 |

sd.alphaが1.328842なので、tau.alphaは、

> 1/(1.328842)^2

[1] 0.5663088

となる。

サイトに関するランダム効果は、正規分布normal(mu.alpha, tau.alpha)であるが、normal(2,092945, 0.5663088)となる。

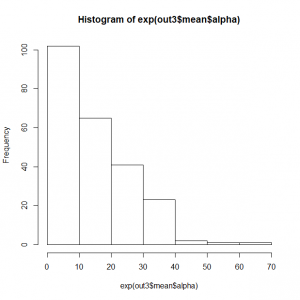

サイトランダム効果alphaの頻度分布

> hist(exp(out3$mean$alpha))

|

1 2 3 |

> quantile(exp(out3$mean$alpha)) 0% 25% 50% 75% 100% 0.2721652 4.2045089 12.6123690 21.9016223 65.1370818 |

|

1 2 3 4 |

> matplot(1999:2007, t(C), type = "p",pch=1, lty = 1, lwd = 1, main = "", las = 1, ylab = "Territory counts", xlab = "Year", ylim = c(0, 80), frame = FALSE) > abline(h=12.6123690) > abline(h= 4.2045089) > abline(h=21.9016223) |

次に、ランダムサイト効果、ランダム年効果を指定する。

モデルは、mu + alpha[j] + eps[i]

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 |

> # (e) Random site and random year effects > # Specify model in BUGS language > sink("GLMM2.txt") > cat(" + model { + + # Priors + mu ~ dnorm(0, 0.01) # Grand mean + + for (j in 1:nsite){ + alpha[j] ~ dnorm(0, tau.alpha) # Random site effects + } + tau.alpha <- 1/ (sd.alpha * sd.alpha) + sd.alpha ~ dunif(0, 5) + + for (i in 1:nyear){ + eps[i] ~ dnorm(0, tau.eps) # Random year effects + } + tau.eps <- 1/ (sd.eps * sd.eps) + sd.eps ~ dunif(0, 3) + + # Likelihood + for (i in 1:nyear){ + for (j in 1:nsite){ + C[i,j] ~ dpois(lambda[i,j]) + lambda[i,j] <- exp(log.lambda[i,j]) + log.lambda[i,j] <- mu + alpha[j] + eps[i] + } #j + } #i + } + ",fill = TRUE) > sink() > > # Bundle data > win.data <- list(C = t(C), nsite = nrow(C), nyear = ncol(C)) > > # Initial values (not required for all) > inits <- function() list(mu = runif(1, 0, 4), alpha = runif(235, -2, 2), eps = runif(9, -1, 1)) > > # Parameters monitored > params <- c("mu", "alpha", "eps", "sd.alpha", "sd.eps") > > # MCMC settings > ni <- 6000 > nt <- 5 > nb <- 1000 > nc <- 3 > > # Call WinBUGS from R (BRT 3 min) > out4 <- bugs(win.data, inits, params, "GLMM2.txt", n.chains = nc, + n.thin = nt, n.iter = ni, n.burnin = nb, debug = TRUE, bugs.directory = bugs.dir, working.directory = getwd()) > > # Summarize posteriors > print(out4, dig = 2) Inference for Bugs model at "GLMM2.txt", fit using WinBUGS, 3 chains, each with 6000 iterations (first 1000 discarded), n.thin = 5 n.sims = 3000 iterations saved mean sd 2.5% 25% 50% 75% 97.5% Rhat mu 2.12 0.08 1.95 2.07 2.13 2.18 2.27 1.01 alpha[1] -2.14 0.33 -2.81 -2.36 -2.12 -1.91 -1.51 1.00 alpha[2] -2.38 0.37 -3.15 -2.61 -2.36 -2.12 -1.70 1.00 alpha[3] 0.34 0.13 0.09 0.25 0.34 0.42 0.59 1.00 alpha[4] -0.13 0.14 -0.41 -0.22 -0.12 -0.03 0.14 1.00 alpha[5] 0.98 0.10 0.77 0.91 0.97 1.04 1.19 1.00 alpha[6] -2.78 0.47 -3.77 -3.08 -2.73 -2.46 -1.96 1.00 alpha[7] -1.31 0.26 -1.81 -1.47 -1.30 -1.14 -0.82 1.00 alpha[8] 0.59 0.12 0.37 0.51 0.59 0.67 0.82 1.00 alpha[9] -1.84 0.32 -2.50 -2.04 -1.83 -1.62 -1.25 1.00 alpha[10] -0.37 0.16 -0.68 -0.47 -0.36 -0.26 -0.05 1.00 alpha[11] 1.24 0.10 1.06 1.18 1.24 1.31 1.44 1.01 alpha[12] 1.44 0.09 1.26 1.37 1.43 1.50 1.62 1.01 alpha[13] 0.63 0.11 0.42 0.55 0.63 0.70 0.85 1.00 alpha[14] -0.37 0.16 -0.71 -0.48 -0.37 -0.26 -0.05 1.00 alpha[15] -0.55 0.18 -0.90 -0.67 -0.55 -0.43 -0.21 1.00 alpha[16] 0.40 0.12 0.16 0.32 0.40 0.48 0.64 1.00 alpha[17] 0.30 0.12 0.05 0.21 0.30 0.38 0.53 1.01 alpha[18] 0.59 0.11 0.38 0.52 0.59 0.66 0.82 1.00 alpha[19] 1.46 0.09 1.28 1.40 1.46 1.52 1.64 1.01 alpha[20] 0.50 0.12 0.28 0.42 0.50 0.58 0.73 1.01 alpha[21] 0.22 0.13 -0.03 0.14 0.22 0.31 0.48 1.00 alpha[22] -0.15 0.15 -0.44 -0.25 -0.15 -0.05 0.15 1.00 alpha[23] 0.42 0.12 0.18 0.34 0.42 0.50 0.66 1.00 alpha[24] -2.69 0.42 -3.58 -2.97 -2.66 -2.39 -1.93 1.00 alpha[25] 0.65 0.11 0.43 0.57 0.65 0.73 0.87 1.00 alpha[26] 0.06 0.14 -0.20 -0.03 0.06 0.16 0.33 1.00 alpha[27] 0.84 0.11 0.64 0.76 0.84 0.91 1.05 1.00 alpha[28] 1.52 0.09 1.34 1.46 1.52 1.58 1.71 1.00 alpha[29] -0.56 0.17 -0.89 -0.67 -0.56 -0.44 -0.22 1.00 alpha[30] -1.86 0.29 -2.48 -2.05 -1.85 -1.66 -1.31 1.00 alpha[31] -0.55 0.17 -0.90 -0.67 -0.55 -0.43 -0.23 1.00 alpha[32] 1.15 0.10 0.97 1.09 1.15 1.22 1.35 1.01 alpha[33] 2.06 0.09 1.90 2.00 2.06 2.12 2.25 1.01 alpha[34] -2.05 0.35 -2.77 -2.27 -2.03 -1.80 -1.42 1.00 alpha[35] 1.48 0.09 1.31 1.42 1.48 1.54 1.67 1.01 alpha[36] 0.55 0.12 0.30 0.46 0.54 0.63 0.79 1.00 alpha[37] 1.35 0.09 1.17 1.28 1.35 1.41 1.54 1.00 alpha[38] -2.59 0.43 -3.50 -2.87 -2.55 -2.27 -1.82 1.00 alpha[39] 0.45 0.11 0.22 0.37 0.45 0.52 0.67 1.00 alpha[40] 0.97 0.10 0.77 0.90 0.97 1.04 1.17 1.01 alpha[41] -0.14 0.15 -0.44 -0.24 -0.14 -0.04 0.13 1.01 alpha[42] -0.21 0.16 -0.52 -0.32 -0.20 -0.10 0.08 1.00 alpha[43] 0.89 0.11 0.68 0.82 0.88 0.96 1.10 1.00 alpha[44] 1.96 0.09 1.79 1.90 1.95 2.01 2.13 1.01 alpha[45] 1.26 0.10 1.07 1.19 1.26 1.32 1.46 1.00 alpha[46] -0.65 0.17 -1.00 -0.77 -0.65 -0.54 -0.32 1.00 alpha[47] -3.12 0.52 -4.26 -3.44 -3.08 -2.75 -2.22 1.00 alpha[48] 1.14 0.10 0.96 1.08 1.14 1.21 1.33 1.00 alpha[49] 1.04 0.10 0.84 0.97 1.04 1.11 1.25 1.01 alpha[50] 0.89 0.10 0.69 0.81 0.88 0.95 1.10 1.00 alpha[51] -1.35 0.24 -1.83 -1.51 -1.34 -1.18 -0.92 1.00 alpha[52] 0.70 0.11 0.49 0.63 0.70 0.78 0.92 1.00 alpha[53] 0.54 0.11 0.33 0.47 0.54 0.62 0.76 1.00 alpha[54] 0.35 0.12 0.11 0.27 0.35 0.43 0.59 1.00 alpha[55] 0.80 0.11 0.60 0.73 0.80 0.87 1.02 1.01 alpha[56] -1.37 0.26 -1.89 -1.54 -1.36 -1.19 -0.88 1.00 alpha[57] 1.59 0.09 1.42 1.52 1.58 1.64 1.78 1.01 alpha[58] 0.99 0.10 0.80 0.92 0.99 1.06 1.21 1.01 alpha[59] 0.98 0.10 0.78 0.91 0.98 1.05 1.19 1.00 alpha[60] -0.97 0.21 -1.38 -1.12 -0.97 -0.83 -0.57 1.00 alpha[61] 0.70 0.11 0.49 0.62 0.70 0.77 0.92 1.00 alpha[62] 0.13 0.13 -0.12 0.04 0.14 0.22 0.39 1.00 alpha[63] 0.91 0.10 0.70 0.84 0.91 0.98 1.12 1.01 alpha[64] 1.04 0.10 0.85 0.97 1.04 1.11 1.25 1.00 alpha[65] 1.34 0.10 1.16 1.27 1.34 1.40 1.54 1.00 alpha[66] -1.86 0.30 -2.47 -2.04 -1.84 -1.66 -1.29 1.00 alpha[67] 1.40 0.09 1.22 1.33 1.39 1.46 1.59 1.01 alpha[68] -1.41 0.26 -1.93 -1.58 -1.40 -1.23 -0.93 1.00 alpha[69] 0.53 0.12 0.30 0.46 0.53 0.61 0.77 1.01 alpha[70] -1.58 0.26 -2.12 -1.74 -1.57 -1.40 -1.10 1.00 alpha[71] -2.14 0.34 -2.84 -2.37 -2.13 -1.91 -1.52 1.00 alpha[72] 1.41 0.09 1.22 1.34 1.40 1.47 1.60 1.01 alpha[73] 0.56 0.11 0.34 0.49 0.56 0.64 0.79 1.00 alpha[74] 0.69 0.11 0.48 0.61 0.69 0.76 0.91 1.00 alpha[75] -1.65 0.27 -2.21 -1.82 -1.64 -1.46 -1.15 1.00 alpha[76] -0.91 0.19 -1.29 -1.03 -0.91 -0.78 -0.54 1.00 alpha[77] 0.60 0.12 0.37 0.52 0.60 0.68 0.83 1.00 alpha[78] -3.11 0.52 -4.23 -3.43 -3.06 -2.76 -2.19 1.00 alpha[79] 1.21 0.10 1.02 1.14 1.20 1.27 1.42 1.01 alpha[80] -0.03 0.14 -0.30 -0.13 -0.03 0.07 0.25 1.00 alpha[81] 0.67 0.12 0.44 0.59 0.67 0.75 0.90 1.00 alpha[82] -0.21 0.15 -0.50 -0.31 -0.21 -0.11 0.08 1.01 alpha[83] 0.19 0.13 -0.06 0.11 0.19 0.28 0.44 1.00 alpha[84] 1.24 0.10 1.05 1.18 1.24 1.31 1.44 1.01 alpha[85] 0.52 0.12 0.30 0.44 0.52 0.60 0.75 1.00 alpha[86] 0.97 0.10 0.77 0.90 0.97 1.03 1.17 1.00 alpha[87] 1.37 0.10 1.18 1.30 1.37 1.43 1.56 1.00 alpha[88] 1.11 0.10 0.92 1.04 1.11 1.17 1.31 1.01 alpha[89] 1.04 0.10 0.85 0.97 1.04 1.11 1.25 1.00 alpha[90] 0.53 0.11 0.30 0.45 0.52 0.60 0.75 1.00 alpha[91] -0.49 0.17 -0.82 -0.60 -0.49 -0.38 -0.18 1.00 alpha[92] 0.60 0.11 0.38 0.52 0.60 0.67 0.82 1.00 alpha[93] 0.42 0.12 0.19 0.34 0.42 0.50 0.66 1.00 alpha[94] 0.27 0.12 0.04 0.19 0.28 0.36 0.52 1.00 alpha[95] -0.56 0.17 -0.89 -0.66 -0.56 -0.44 -0.23 1.01 alpha[96] 1.47 0.09 1.30 1.41 1.47 1.53 1.66 1.01 alpha[97] 0.67 0.11 0.44 0.59 0.67 0.75 0.90 1.00 alpha[98] 0.06 0.13 -0.21 -0.02 0.06 0.15 0.33 1.00 alpha[99] 1.54 0.09 1.36 1.48 1.54 1.60 1.73 1.01 alpha[100] 0.97 0.11 0.77 0.90 0.97 1.04 1.19 1.00 alpha[101] 0.35 0.12 0.10 0.26 0.35 0.43 0.58 1.00 alpha[102] 1.01 0.10 0.82 0.94 1.01 1.08 1.22 1.00 alpha[103] -3.12 0.51 -4.21 -3.43 -3.09 -2.76 -2.22 1.00 alpha[104] 1.30 0.10 1.11 1.24 1.30 1.36 1.50 1.00 alpha[105] 0.80 0.11 0.58 0.72 0.79 0.87 1.01 1.01 alpha[106] 0.02 0.14 -0.25 -0.07 0.03 0.12 0.29 1.00 alpha[107] -2.43 0.40 -3.25 -2.69 -2.41 -2.16 -1.68 1.00 alpha[108] -3.10 0.52 -4.23 -3.42 -3.06 -2.74 -2.20 1.00 alpha[109] -0.42 0.16 -0.73 -0.52 -0.42 -0.32 -0.12 1.00 alpha[110] 1.24 0.10 1.06 1.18 1.24 1.31 1.45 1.01 n.eff mu 310 alpha[1] 3000 alpha[2] 3000 alpha[3] 1100 alpha[4] 1100 alpha[5] 940 alpha[6] 3000 alpha[7] 3000 alpha[8] 1700 alpha[9] 3000 alpha[10] 2700 alpha[11] 500 alpha[12] 420 alpha[13] 860 alpha[14] 3000 alpha[15] 3000 alpha[16] 950 alpha[17] 450 alpha[18] 1000 alpha[19] 500 alpha[20] 590 alpha[21] 690 alpha[22] 990 alpha[23] 880 alpha[24] 640 alpha[25] 1400 alpha[26] 470 alpha[27] 1000 alpha[28] 580 alpha[29] 880 alpha[30] 2200 alpha[31] 950 alpha[32] 710 alpha[33] 630 alpha[34] 2000 alpha[35] 430 alpha[36] 710 alpha[37] 780 alpha[38] 3000 alpha[39] 3000 alpha[40] 410 alpha[41] 340 alpha[42] 790 alpha[43] 810 alpha[44] 310 alpha[45] 620 alpha[46] 3000 alpha[47] 1400 alpha[48] 650 alpha[49] 300 alpha[50] 570 alpha[51] 600 alpha[52] 790 alpha[53] 700 alpha[54] 650 alpha[55] 320 alpha[56] 3000 alpha[57] 410 alpha[58] 370 alpha[59] 720 alpha[60] 1300 alpha[61] 660 alpha[62] 1400 alpha[63] 640 alpha[64] 650 alpha[65] 560 alpha[66] 2500 alpha[67] 460 alpha[68] 1600 alpha[69] 390 alpha[70] 2700 alpha[71] 1700 alpha[72] 280 alpha[73] 780 alpha[74] 650 alpha[75] 700 alpha[76] 1700 alpha[77] 550 alpha[78] 530 alpha[79] 420 alpha[80] 530 alpha[81] 3000 alpha[82] 390 alpha[83] 870 alpha[84] 450 alpha[85] 730 alpha[86] 670 alpha[87] 610 alpha[88] 410 alpha[89] 510 alpha[90] 1100 alpha[91] 1400 alpha[92] 510 alpha[93] 2200 alpha[94] 1000 alpha[95] 420 alpha[96] 460 alpha[97] 790 alpha[98] 3000 alpha[99] 430 alpha[100] 680 alpha[101] 830 alpha[102] 940 alpha[103] 1800 alpha[104] 470 alpha[105] 570 alpha[106] 570 alpha[107] 3000 alpha[108] 3000 alpha[109] 3000 alpha[110] 380 [ reached getOption("max.print") -- omitted 137 rows ] For each parameter, n.eff is a crude measure of effective sample size, and Rhat is the potential scale reduction factor (at convergence, Rhat=1). DIC info (using the rule, pD = Dbar-Dhat) pD = 238.0 and DIC = 11479.5 DIC is an estimate of expected predictive error (lower deviance is better). |

out4の結果を分析してみる。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 |

> out4$mean $mu [1] 2.079934 $alpha [1] -2.1076160000 -2.3545093333 0.3751784247 -0.0984386457 1.0117119667 [6] -2.7426016667 -1.2722481667 0.6290491000 -1.8041110667 -0.3334423581 [11] 1.2776041333 1.4716913333 0.6638995000 -0.3316310800 -0.5219901773 [16] 0.4376290920 0.3309014903 0.6258340000 1.4953263333 0.5365536000 [21] 0.2569850564 -0.1067241607 0.4524984760 -2.6553843333 0.6851756333 [26] 0.1011082043 0.8702921000 1.5584740000 -0.5210529813 -1.8374272333 [31] -0.5172678170 1.1925051000 2.0971663333 -2.0156803333 1.5172503333 [36] 0.5815341567 1.3847936667 -2.5509730000 0.4860883400 1.0044183667 [41] -0.1149639381 -0.1809190222 0.9218477333 1.9897960000 1.2954192333 [46] -0.6179790767 -3.0743640000 1.1765601000 1.0785564000 0.9208005667 [51] -1.3141167000 0.7389478000 0.5762309000 0.3779662058 0.8343946667 [56] -1.3364468333 1.6224210000 1.0303545333 1.0165552667 -0.9413371333 [61] 0.7309692000 0.1710958487 0.9433328667 1.0801161000 1.3741570000 [66] -1.8304656667 1.4331556667 -1.3692234667 0.5680491333 -1.5452493000 [71] -2.1153153333 1.4399076667 0.6005449333 0.7269949333 -1.6066126333 [76] -0.8761065533 0.6366652667 -3.0903596667 1.2442758000 0.0063760463 [81] 0.7061618333 -0.1762970090 0.2235286068 1.2789878333 0.5588846667 [86] 0.9991912333 1.4022243333 1.1422687333 1.0787838333 0.5608177033 [91] -0.4528720143 0.6340963667 0.4599763233 0.3121647289 -0.5274245900 [96] 1.5048220000 0.7055925000 0.0960184140 1.5761736667 1.0060722667 [101] 0.3778606759 1.0448842333 -3.0750446667 1.3337299333 0.8297113000 [106] 0.0624435267 -2.3842036667 -3.0836513333 -0.3925182080 1.2829311667 [111] 0.4872490100 1.4112093333 0.7004724000 0.0940167048 -0.1538368739 [116] 1.0600331000 -1.3133232667 0.0574847059 0.3177391546 0.6007570333 [121] 0.8875548333 0.0062407210 -1.9186790000 -2.3564910000 -3.0848003333 [126] 0.7630713667 0.4794156700 -2.4906266667 -0.2246371851 0.1215710147 [131] 0.3711607637 -2.0100346667 0.3331621467 -0.8154101000 -2.6595820000 [136] 0.5663238000 1.6012320000 0.4664238067 -0.3920611097 0.3991698107 [141] 0.8802210667 1.0505269667 -2.2329366667 -2.8376356667 0.4507405133 [146] 1.1030347667 0.2028010757 0.0580259607 1.2652343667 -0.6707134367 [151] 1.3082605333 1.3406847000 -0.0674420304 -0.5236424237 -0.1402735253 [156] -0.0485068804 -2.4839703333 -1.0550641667 0.8693103000 -2.7530903333 [161] 0.4886674733 -1.1371263000 0.4706723233 1.1709639667 0.7846504667 [166] -1.2228740667 -2.6560760000 -1.7564072667 -0.7843870333 0.9741382333 [171] -0.0787713362 0.9382541333 1.1588118667 -1.6077497667 0.4257994027 [176] -0.5320175540 -2.8471593333 -2.3876526667 0.4780909833 -2.0132366667 [181] -0.0224946329 0.8170963667 -1.4903307333 -0.6725187500 0.0002735269 [186] 0.0615285808 1.3355770000 1.1549789333 1.2540017000 0.0616946154 [191] 1.2071309333 0.6062342633 1.3229721667 -0.0934144066 0.1088368608 [196] 0.5962894000 -1.5499949667 1.5353040000 -3.3432026667 1.1474043667 [201] 0.7259523667 -1.8305562333 0.7120414667 -0.3306824111 -2.4851983333 [206] -0.0489758090 1.1645877000 0.5020208667 0.8698751333 -0.0625316114 [211] 1.4539290000 0.4534100500 0.9690620667 -1.8343858333 0.4377375067 [216] 1.0084791667 0.8493568000 1.1608506667 0.5755300733 1.0593044000 [221] -0.9435206000 -3.3489493333 0.4749199933 -1.1803739667 1.4680206667 [226] -0.6889885667 -1.6056878333 -2.4965093333 0.7285914667 1.2559645000 [231] 1.6771703333 1.5841696667 1.5941433333 1.2796602333 1.5100196667 $eps [1] -0.002253751 0.071110502 -0.160016563 -0.108322322 0.054035349 0.094344020 [7] 0.216188823 -0.161350977 -0.067930269 $sd.alpha [1] 1.329022 $sd.eps [1] 0.1589848 $deviance [1] 11240.39 |

tau.alphaは、

|

1 2 |

> 1/(out4$mean$sd.alpha)^2 [1] 0.5661551 |

μ=2.079934

alpha = サイトランダム要因

年ランダム要因epsは、

[1] -0.002253751 0.071110502 -0.160016563 -0.108322322 0.054035349 0.094344020

[7] 0.216188823 -0.161350977 -0.067930269

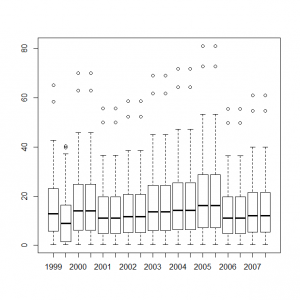

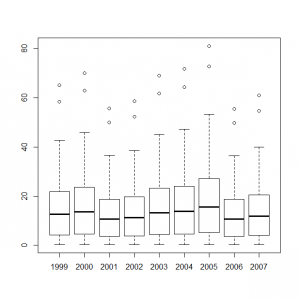

これらのデータをまとめて、統計モデル化した年ごとの計測数値のボックスプロットを作成すると、

|

1 2 3 4 5 6 7 8 9 10 |

> y1999_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[1] > y2000_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[2] > y2001_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[3] > y2002_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[4] > y2003_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[5] > y2004_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[6] > y2005_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[7] > y2006_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[8] > y2007_t <- out4$mean$alpha + out4$mean$mu + out4$mean$eps[9] > boxplot(exp(y1999_t), exp(y2000_t), exp(y2001_t), exp(y2002_t), exp(y2003_t), exp(y2004_t), exp(y2005_t), exp(y2006_t), exp(y2007_t), names=c(1999:2007)) |

次に初年観察者効果を固定効果として追加する。

モデルは mu + beta2 * first[i,j] + alpha[j] + eps[i]

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 |