Mastering Machine Learning with Spark 2.xを学習する(続編)。Giggs粒子の判定モデル構築へ

????????????????????

H2O Deep Learning モデル構築へ

始めっからスタート:HDFS、Yarnを立ち上げ、H2Oのパッケージを組み込んでSPARK 2.1.1を立ち上げる。

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 |

MacBook-Pro-5:sbin $ ./start-dfs.sh Starting namenodes on [localhost] Starting datanodes Starting secondary namenodes [MacBook-Pro-5] MacBook-Pro-5:sbin $ ./start-yarn.sh WARNING: YARN_OPTS has been replaced by HADOOP_OPTS. Using value of YARN_OPTS. Starting resourcemanager Starting nodemanagers localhost: WARNING: YARN_OPTS has been replaced by HADOOP_OPTS. Using value of YARN_OPTS. MacBook-Pro-5:sbin $ export SPARKLING_WATER_VERSION="2.1.12" MacBook-Pro-5:sbin $ export SPARK_PACKAGES="ai.h2o:sparkling-water-core_2.11:${SPARKLING_WATER_VERSION},\ ai.h2o:sparkling-water-repl_2.11:${SPARKLING_WATER_VERSION},\ ai.h2o:sparkling-water-ml_2.11:${SPARKLING_WATER_VERSION}" MacBook-Pro-5:sbin $SPARK_HOME/bin/spark-shell --master local[*] --driver-memory 8g --executor-memory 8g --packages "$SPARK_PACKAGES" Ivy Default Cache set to: /Users/*******/.ivy2/cache The jars for the packages stored in: /Users*******/.ivy2/jars :: loading settings :: url = jar:file:/opt/spark-2.1.1-bin-hadoop2.7/jars/ivy-2.4.0.jar!/org/apache/ivy/core/settings/ivysettings.xml ai.h2o#sparkling-water-core_2.11 added as a dependency ai.h2o#sparkling-water-repl_2.11 added as a dependency ai.h2o#sparkling-water-ml_2.11 added as a dependency :: resolving dependencies :: org.apache.spark#spark-submit-parent;1.0 confs: [default] ...... --------------------------------------------------------------------- | | modules || artifacts | | conf | number| search|dwnlded|evicted|| number|dwnlded| --------------------------------------------------------------------- | default | 77 | 0 | 0 | 11 || 62 | 0 | --------------------------------------------------------------------- :: retrieving :: org.apache.spark#spark-submit-parent confs: [default] 0 artifacts copied, 62 already retrieved (0kB/26ms) Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties Setting default log level to "WARN". To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel). 18/10/28 10:14:19 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 18/10/28 10:14:26 WARN ObjectStore: Failed to get database global_temp, returning NoSuchObjectException Spark context Web UI available at http://***.***.***.***:4040 Spark context available as 'sc' (master = local[*], app id = local-1540689260706). Spark session available as 'spark'. Welcome to ____ __ / __/__ ___ _____/ /__ _\ \/ _ \/ _ `/ __/ '_/ /___/ .__/\_,_/_/ /_/\_\ version 2.1.1 /_/ Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_181) Type in expressions to have them evaluated. Type :help for more information. |

以下、前回までのプログラムを再現

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 |

scala> import org.apache.spark.rdd.RDD import org.apache.spark.rdd.RDD scala> import org.apache.spark.mllib.regression.LabeledPoint import org.apache.spark.mllib.regression.LabeledPoint scala> import org.apache.spark.mllib.linalg._ import org.apache.spark.mllib.linalg._ scala> import org.apache.spark.mllib.linalg.distributed.RowMatrix import org.apache.spark.mllib.linalg.distributed.RowMatrix scala> import org.apache.spark.mllib.evaluation._ import org.apache.spark.mllib.evaluation._ scala> import org.apache.spark.mllib.tree._ import org.apache.spark.mllib.tree._ scala> val rawData = sc.textFile("hdfs://localhost/user/*******/ds/HIGGS.csv") rawData: org.apache.spark.rdd.RDD[String] = hdfs://localhost/user/*******/ds/HIGGS.csv MapPartitionsRDD[1] at textFile at <console>:36 scala> println(s"Number of rows: ${rawData.count}") Number of rows: 11000000 scala> println("Rows") Rows scala> println(rawData.take(2).mkString("\n")) 1.000000000000000000e+00,8.692932128906250000e-01,-6.350818276405334473e-01,2.256902605295181274e-01,3.274700641632080078e-01,-6.899932026863098145e-01,7.542022466659545898e-01,-2.485731393098831177e-01,-1.092063903808593750e+00,0.000000000000000000e+00,1.374992132186889648e+00,-6.536741852760314941e-01,9.303491115570068359e-01,1.107436060905456543e+00,1.138904333114624023e+00,-1.578198313713073730e+00,-1.046985387802124023e+00,0.000000000000000000e+00,6.579295396804809570e-01,-1.045456994324922562e-02,-4.576716944575309753e-02,3.101961374282836914e+00,1.353760004043579102e+00,9.795631170272827148e-01,9.780761599540710449e-01,9.200048446655273438e-01,7.216574549674987793e-01,9.887509346008300781e-01,8.766783475875854492e-01 1.000000000000000000e+00,9.075421094894409180e-01,3.291472792625427246e-01,3.594118654727935791e-01,1.497969865798950195e+00,-3.130095303058624268e-01,1.095530629158020020e+00,-5.575249195098876953e-01,-1.588229775428771973e+00,2.173076152801513672e+00,8.125811815261840820e-01,-2.136419266462326050e-01,1.271014571189880371e+00,2.214872121810913086e+00,4.999939501285552979e-01,-1.261431813240051270e+00,7.321561574935913086e-01,0.000000000000000000e+00,3.987008929252624512e-01,-1.138930082321166992e+00,-8.191101951524615288e-04,0.000000000000000000e+00,3.022198975086212158e-01,8.330481648445129395e-01,9.856996536254882812e-01,9.780983924865722656e-01,7.797321677207946777e-01,9.923557639122009277e-01,7.983425855636596680e-01 scala> val data = rawData.map(line => line.split(',').map(_.toDouble)) data: org.apache.spark.rdd.RDD[Array[Double]] = MapPartitionsRDD[2] at map at <console>:38 scala> val response: RDD[Int] = data.map(row => row(0).toInt) response: org.apache.spark.rdd.RDD[Int] = MapPartitionsRDD[3] at map at <console>:40 scala> val features: RDD[Vector] = data.map(line => Vectors.dense(line.slice(1, line.size))) features: org.apache.spark.rdd.RDD[org.apache.spark.mllib.linalg.Vector] = MapPartitionsRDD[4] at map at <console>:40 scala> val featuresMatrix = new RowMatrix(features) featuresMatrix: org.apache.spark.mllib.linalg.distributed.RowMatrix = org.apache.spark.mllib.linalg.distributed.RowMatrix@7a543319 scala> val featuresSummary = featuresMatrix.computeColumnSummaryStatistics() featuresSummary: org.apache.spark.mllib.stat.MultivariateStatisticalSummary = org.apache.spark.mllib.stat.MultivariateOnlineSummarizer@74ddae54 scala> import com.packtpub.mmlwspark.utils.Tabulizer.table import com.packtpub.mmlwspark.utils.Tabulizer.table scala> println(s"Higgs Features Mean Values = ${table(featuresSummary.mean, 8)}") Higgs Features Mean Values = +-----+------+------+------+-----+-----+------+-----+ |0.991|-0.000|-0.000| 0.999|0.000|0.991|-0.000|0.000| |1.000| 0.993|-0.000|-0.000|1.000|0.992| 0.000|0.000| |1.000| 0.986|-0.000| 0.000|1.000|1.034| 1.025|1.051| |1.010| 0.973| 1.033| 0.960| -| -| -| -| +-----+------+------+------+-----+-----+------+-----+ scala> println(s"Higgs Features Variance Values = ${table(featuresSummary.variance, 8)}") Higgs Features Variance Values = +-----+-----+-----+-----+-----+-----+-----+-----+ |0.320|1.018|1.013|0.360|1.013|0.226|1.019|1.012| |1.056|0.250|1.019|1.012|1.101|0.238|1.018|1.013| |1.425|0.256|1.015|1.013|1.961|0.455|0.145|0.027| |0.158|0.276|0.133|0.098| -| -| -| -| +-----+-----+-----+-----+-----+-----+-----+-----+ scala> val nonZeros = featuresSummary.numNonzeros nonZeros: org.apache.spark.mllib.linalg.Vector = [1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,5605389.0,1.1E7,1.1E7,1.1E7,5476088.0,1.1E7,1.1E7,1.1E7,4734760.0,1.1E7,1.1E7,1.1E7,3869383.0,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7] scala> println(s"Non-zero values count per column: ${table(nonZeros, cols = 8, format = "%.0f")}") Non-zero values count per column: +--------+--------+--------+--------+--------+--------+--------+--------+ |11000000|11000000|11000000|11000000|11000000|11000000|11000000|11000000| | 5605389|11000000|11000000|11000000| 5476088|11000000|11000000|11000000| | 4734760|11000000|11000000|11000000| 3869383|11000000|11000000|11000000| |11000000|11000000|11000000|11000000| -| -| -| -| +--------+--------+--------+--------+--------+--------+--------+--------+ scala> val numRows = featuresMatrix.numRows numRows: Long = 11000000 scala> val numCols = featuresMatrix.numCols numCols: Long = 28 scala> val colsWithZeros = nonZeros colsWithZeros: org.apache.spark.mllib.linalg.Vector = [1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,5605389.0,1.1E7,1.1E7,1.1E7,5476088.0,1.1E7,1.1E7,1.1E7,4734760.0,1.1E7,1.1E7,1.1E7,3869383.0,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7,1.1E7] scala> .toArray res6: Array[Double] = Array(1.1E7, 1.1E7, 1.1E7, 1.1E7, 1.1E7, 1.1E7, 1.1E7, 1.1E7, 5605389.0, 1.1E7, 1.1E7, 1.1E7, 5476088.0, 1.1E7, 1.1E7, 1.1E7, 4734760.0, 1.1E7, 1.1E7, 1.1E7, 3869383.0, 1.1E7, 1.1E7, 1.1E7, 1.1E7, 1.1E7, 1.1E7, 1.1E7) scala> .zipWithIndex res8: Array[(Double, Int)] = Array((1.1E7,0), (1.1E7,1), (1.1E7,2), (1.1E7,3), (1.1E7,4), (1.1E7,5), (1.1E7,6), (1.1E7,7), (5605389.0,8), (1.1E7,9), (1.1E7,10), (1.1E7,11), (5476088.0,12), (1.1E7,13), (1.1E7,14), (1.1E7,15), (4734760.0,16), (1.1E7,17), (1.1E7,18), (1.1E7,19), (3869383.0,20), (1.1E7,21), (1.1E7,22), (1.1E7,23), (1.1E7,24), (1.1E7,25), (1.1E7,26), (1.1E7,27)) scala> .filter { case (rows, idx) => rows != numRows } res9: Array[(Double, Int)] = Array((5605389.0,8), (5476088.0,12), (4734760.0,16), (3869383.0,20)) scala> val sparsity = nonZeros.toArray.sum / (numRows * numCols) sparsity: Double = 0.9210572077922078 scala> println(f"Data sparsity: ${sparsity}%.2f") Data sparsity: 0.92 scala> val responseValues = response.distinct.collect responseValues: Array[Int] = Array(0, 1) scala> println(s"Response values: ${responseValues.mkString(", ")}") Response values: 0, 1 scala> val responseDistribution = response.map(v => (v,1)).countByKey responseDistribution: scala.collection.Map[Int,Long] = Map(0 -> 5170877, 1 -> 5829123) scala> println(s"Response distribution:\n${table(responseDistribution)}") Response distribution: +-------+-------+ | 0| 1| +-------+-------+ |5170877|5829123| +-------+-------+ scala> val responseDistribution = response.map(v => (v,1)).countByKey responseDistribution: scala.collection.Map[Int,Long] = Map(0 -> 5170877, 1 -> 5829123) scala> println(s"Response distribution:\n${table(responseDistribution)}") Response distribution: +-------+-------+ | 0| 1| +-------+-------+ |5170877|5829123| +-------+-------+ |

ここからが、H2Oライブラリーインポート

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 |

scala> import org.apache.spark.h2o._ import org.apache.spark.h2o._ scala> val h2oContext = H2OContext.getOrCreate(sc) 18/10/28 10:54:53 WARN H2OContext: Method H2OContext.getOrCreate with an argument of type SparkContext is deprecated and parameter of type SparkSession is preferred. 18/10/28 10:54:53 WARN InternalH2OBackend: Increasing 'spark.locality.wait' to value 30000 18/10/28 10:54:53 WARN InternalH2OBackend: Due to non-deterministic behavior of Spark broadcast-based joins We recommend to disable them by configuring `spark.sql.autoBroadcastJoinThreshold` variable to value `-1`: sqlContext.sql("SET spark.sql.autoBroadcastJoinThreshold=-1") ........ 10-28 10:54:56.596 ***.***.***.***:54321 25930 #r thread INFO: Open H2O Flow in your web browser: http://***.***.***.***:54321 10-28 10:54:56.596 ***.***.***.***:54321 25930 #r thread INFO: 10-28 10:55:00.888 ***.***.***.***:54321 25930 main TRACE: H2OContext initialized h2oContext: org.apache.spark.h2o.H2OContext = Sparkling Water Context: * H2O name: sparkling-water-*****_local-1540689260706 * cluster size: 1 * list of used nodes: (executorId, host, port) ------------------------ (driver,***.***.***.***,54321) ------------------------ Open H2O Flow in browser: http://***.***.***.***:54321 (CMD + click in Mac OSX) scala> val h2oResponse = h2oContext.asH2OFrame(response, "response") 10-28 10:55:04.691 ***.***.***.***:54321 25930 main INFO: Locking cloud to new members, because water.Lockable h2oResponse: org.apache.spark.h2o.H2OFrame = Frame key: response cols: 1 rows: 11000000 chunks: 60 size: 1379214 scala> h2oContext.openFlow 10-28 10:57:01.408 ***.***.***.***:54321 25930 #2701-205 INFO: GET /, parms: {} 10-28 10:57:01.454 ***.***.***.***:54321 25930 #2701-205 INFO: GET /flow/index.html, parms: {} 10-28 10:57:02.190 ***.***.***.***:54321 25930 #2701-205 INFO: GET /flow/index.html, parms: {} 10-28 10:57:02.201 ***.***.***.***:54321 25930 #2701-205 INFO: GET /3/Metadata/endpoints, parms: {} 10-28 10:57:02.604 ***.***.***.***:54321 25930 #2701-205 INFO: GET /3/NodePersistentStorage/notebook, parms: {} 10-28 10:57:02.604 ***.***.***.***:54321 25930 #2701-203 INFO: GET /3/NodePersistentStorage/categories/environment/names/clips/exists, parms: {} 10-28 10:57:02.608 ***.***.***.***:54321 25930 #2701-206 INFO: GET /flow/help/catalog.json, parms: {} 10-28 10:57:02.609 ***.***.***.***:54321 25930 #2701-207 INFO: GET /3/About, parms: {} 10-28 10:57:02.612 ***.***.***.***:54321 25930 #2701-208 INFO: GET /3/ModelBuilders, parms: {} 10-28 10:57:02.616 ***.***.***.***:54321 25930 #2701-209 INFO: POST /3/scalaint, parms: {} 10-28 10:57:02.637 ***.***.***.***:54321 25930 #2701-208 INFO: Found XGBoost backend with library: xgboost4j 10-28 10:57:03.004 ***.***.***.***:54321 25930 #2701-205 INFO: GET /flow/fonts/fontawesome-webfont.woff, parms: {v=4.2.0} |

H2OのUIがwebブラウザに立ち上がる

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 321 322 323 324 325 326 327 328 329 330 331 332 333 334 335 336 337 338 339 340 341 342 343 344 345 346 347 348 349 350 351 352 353 354 355 356 357 358 359 360 361 362 363 364 365 366 367 368 369 370 371 372 373 374 375 376 377 378 379 380 381 382 383 384 385 386 387 388 389 390 391 392 393 394 395 396 397 398 399 400 401 402 403 404 405 406 407 408 409 410 411 412 413 414 415 416 417 418 419 420 421 422 423 424 425 426 427 428 429 430 431 432 433 434 435 436 437 438 439 440 441 442 443 444 445 446 447 448 449 450 451 452 453 454 455 456 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 |

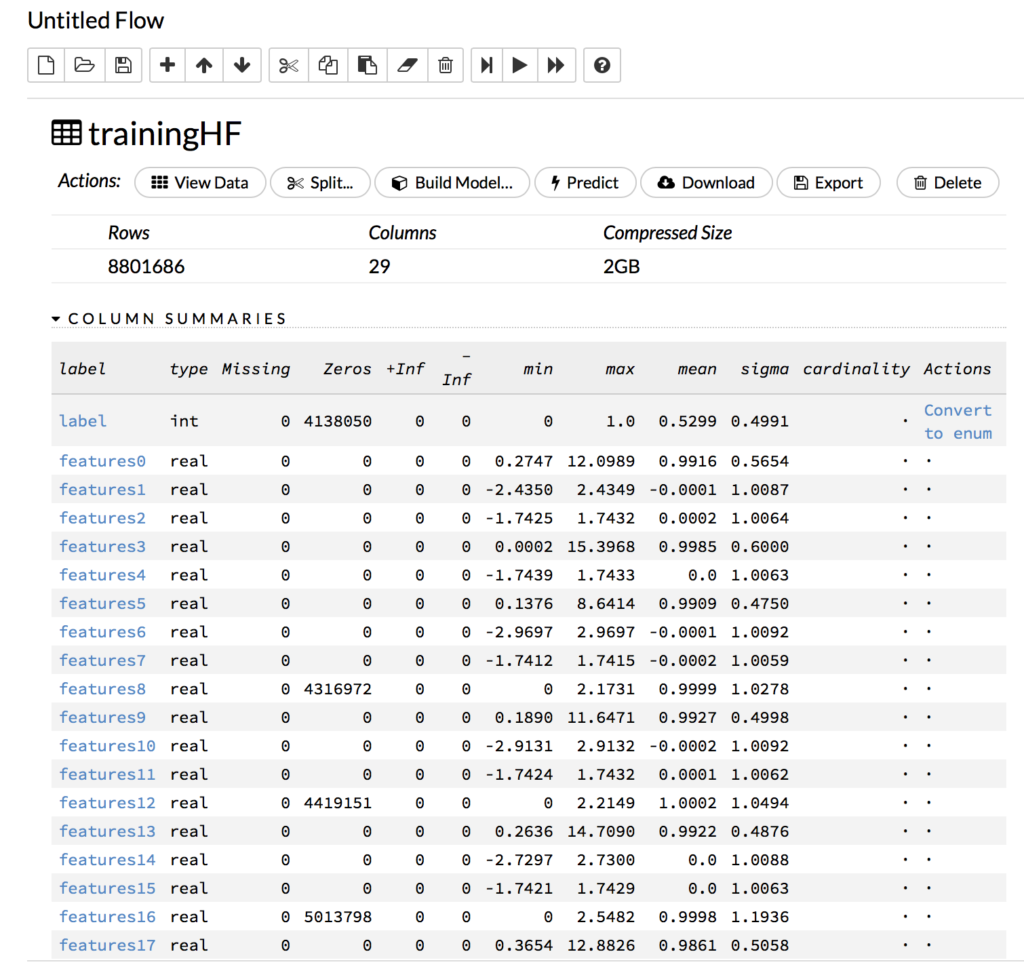

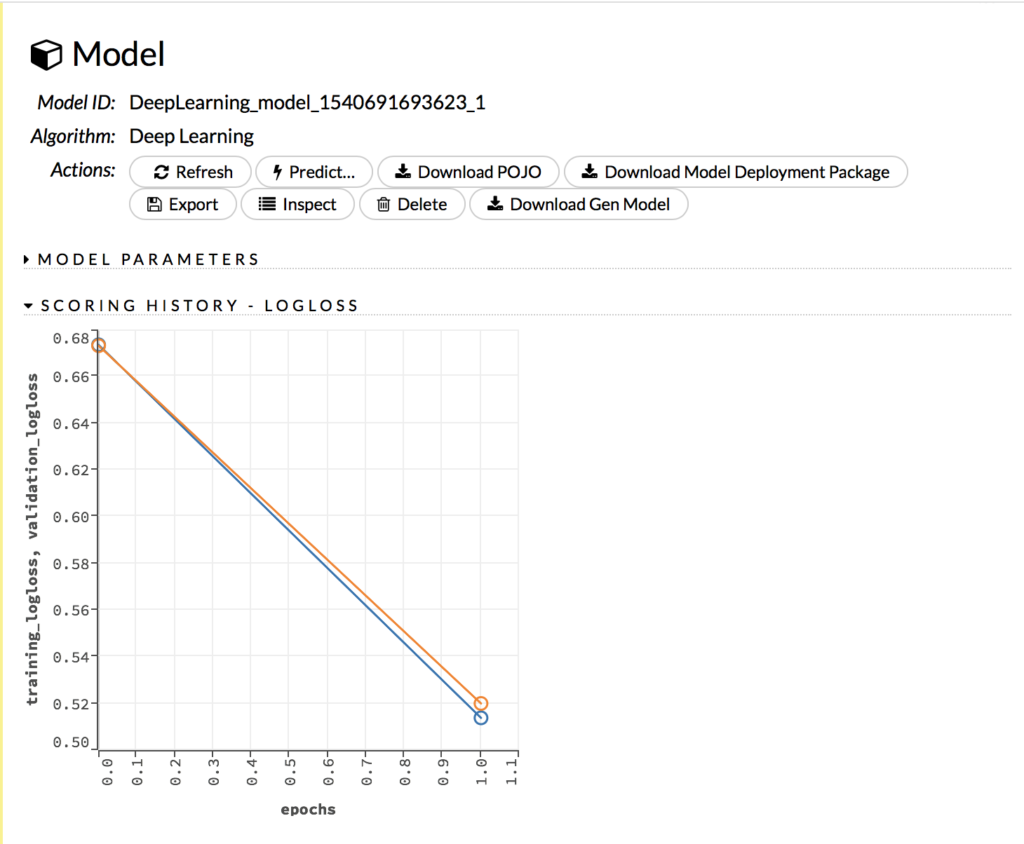

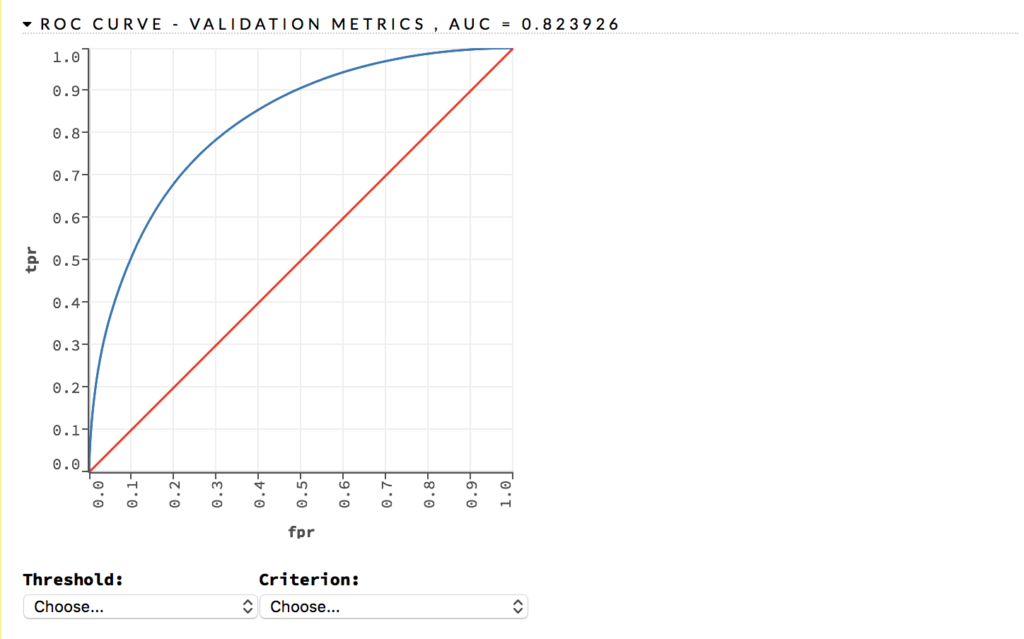

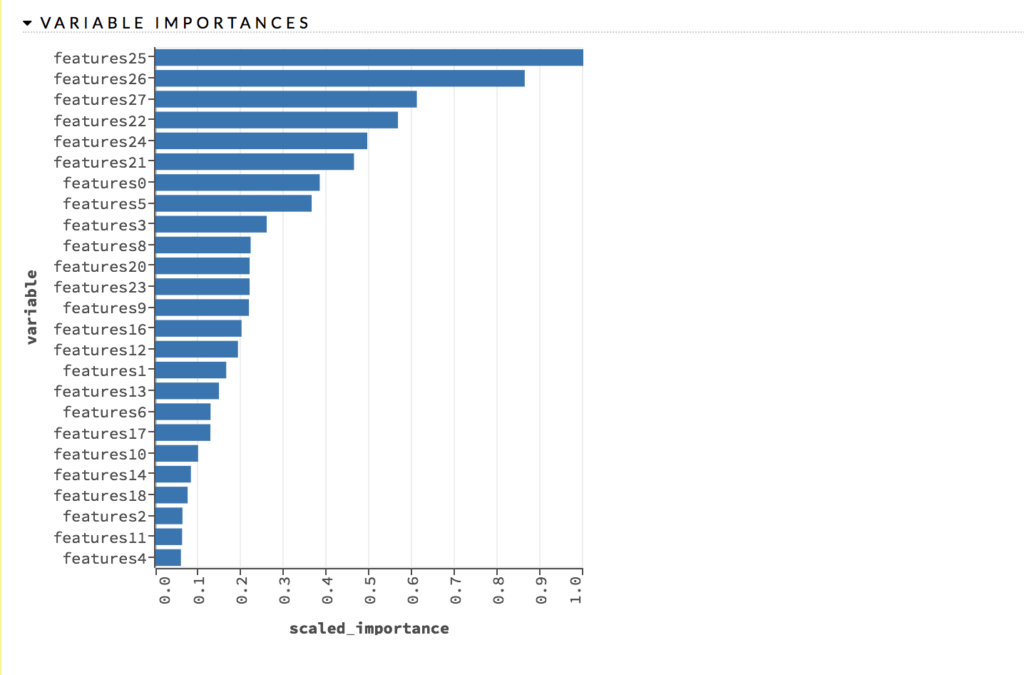

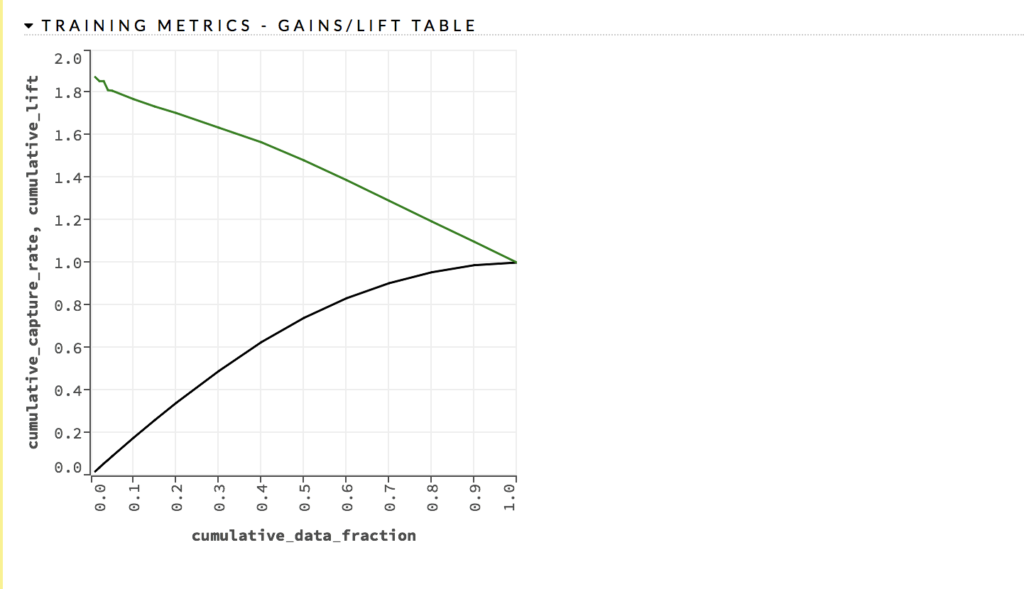

scala> val higgs = response.zip(features).map { case (response, features) => LabeledPoint(response, features) } higgs: org.apache.spark.rdd.RDD[org.apache.spark.mllib.regression.LabeledPoint] = MapPartitionsRDD[23] at map at <console>:48 scala> higgs.setName("higgs").cache() res30: higgs.type = higgs MapPartitionsRDD[23] at map at <console>:48 scala> val trainTestSplits = higgs.randomSplit(Array(0.8, 0.2)) trainTestSplits: Array[org.apache.spark.rdd.RDD[org.apache.spark.mllib.regression.LabeledPoint]] = Array(MapPartitionsRDD[24] at randomSplit at <console>:50, MapPartitionsRDD[25] at randomSplit at <console>:50) scala> val (trainingData, testData) = (trainTestSplits(0), trainTestSplits(1)) trainingData: org.apache.spark.rdd.RDD[org.apache.spark.mllib.regression.LabeledPoint] = MapPartitionsRDD[24] at randomSplit at <console>:50 testData: org.apache.spark.rdd.RDD[org.apache.spark.mllib.regression.LabeledPoint] = MapPartitionsRDD[25] at randomSplit at <console>:50 scala> val trainingHF = h2oContext.asH2OFrame(trainingData.toDF, "trainingHF") trainingHF: org.apache.spark.h2o.H2OFrame = Frame key: trainingHF cols: 29 rows: 8801686 chunks: 60 size: 1761563557 scala> val testHF = h2oContext.asH2OFrame(testData.toDF, "testHF") testHF: org.apache.spark.h2o.H2OFrame = Frame key: testHF cols: 29 rows: 2198314 chunks: 60 size: 440063739 scala> 10-28 11:06:29.799 ***.***.***.***:54321 25930 #2701-210 INFO: GET /3/Frames, parms: {} 10-28 11:06:51.462 ***.***.***.***:54321 25930 #2701-210 INFO: GET /3/Frames/trainingHF/summary, parms: {_exclude_fields=frames/columns/data,frames/columns/domain,frames/columns/histogram_bins,frames/columns/percentiles, column_offset=0, column_count=20} 10-28 11:06:53.083 ***.***.***.***:54321 25930 #2701-210 INFO: GET /flow/fonts/SourceCodePro-It.ttf.woff2, parms: {} scala> trainingHF.replace(0, trainingHF.vecs()(0).toCategoricalVec).remove() scala> trainingHF.update() res32: water.fvec.Frame = Frame key: trainingHF cols: 29 rows: 8801686 chunks: 60 size: 1761583393 scala> testHF.replace(0, testHF.vecs()(0).toCategoricalVec).remove() scala> testHF.update() res34: water.fvec.Frame = Frame key: testHF cols: 29 rows: 2198314 chunks: 60 size: 440083575 scala> import _root_.hex.deeplearning._ import _root_.hex.deeplearning._ scala> import _root_.hex.deeplearning.DeepLearningModel.DeepLearningParameters import _root_.hex.deeplearning.DeepLearningModel.DeepLearningParameters scala> import _root_.hex.deeplearning.DeepLearningModel.DeepLearningParameters.Activation import _root_.hex.deeplearning.DeepLearningModel.DeepLearningParameters.Activation scala> val dlParams = new DeepLearningParameters() dlParams: hex.deeplearning.DeepLearningModel.DeepLearningParameters = hex.deeplearning.DeepLearningModel$DeepLearningParameters@34bb4e23 scala> dlParams._train = trainingHF._key dlParams._train: water.Key[water.fvec.Frame] = trainingHF scala> dlParams._valid = testHF._key dlParams._valid: water.Key[water.fvec.Frame] = testHF scala> dlParams._response_column = "label" dlParams._response_column: String = label scala> dlParams._epochs = 1 dlParams._epochs: Double = 1.0 scala> dlParams._activation = Activation.RectifierWithDropout dlParams._activation: hex.deeplearning.DeepLearningModel.DeepLearningParameters.Activation = RectifierWithDropout scala> dlParams._hidden = Array[Int](500, 500, 500) dlParams._hidden: Array[Int] = [I@756ef1a5 scala> val dlBuilder = new DeepLearning(dlParams) dlBuilder: hex.deeplearning.DeepLearning = hex.deeplearning.DeepLearning@2e1933b6 scala> val dlModel = dlBuilder.trainModel.get 10-28 11:10:44.844 ***.***.***.***:54321 25930 FJ-1-3 INFO: Building H2O DeepLearning model with these parameters: 10-28 11:10:44.920 ***.***.***.***:54321 25930 FJ-1-3 INFO: {"_train":{"name":"trainingHF","type":"Key"},"_valid":{"name":"testHF","type":"Key"},"_nfolds":0,"_keep_cross_validation_predictions":false,"_keep_cross_validation_fold_assignment":false,"_parallelize_cross_validation":true,"_auto_rebalance":true,"_seed":-1,"_fold_assignment":"AUTO","_categorical_encoding":"AUTO","_max_categorical_levels":10,"_distribution":"AUTO","_tweedie_power":1.5,"_quantile_alpha":0.5,"_huber_alpha":0.9,"_ignored_columns":null,"_ignore_const_cols":true,"_weights_column":null,"_offset_column":null,"_fold_column":null,"_is_cv_model":false,"_score_each_iteration":false,"_max_runtime_secs":0.0,"_stopping_rounds":5,"_stopping_metric":"AUTO","_stopping_tolerance":0.0,"_response_column":"label","_balance_classes":false,"_max_after_balance_size":5.0,"_class_sampling_factors":null,"_max_confusion_matrix_size":20,"_checkpoint":null,"_pretrained_autoencoder":null,"_overwrite_with_best_model":true,"_autoencoder":false,"_use_all_factor_levels":true,"_standardize":true,"_activation":"RectifierWithDropout","_hidden":[500,500,500],"_epochs":1.0,"_train_samples_per_iteration":-2,"_target_ratio_comm_to_comp":0.05,"_adaptive_rate":true,"_rho":0.99,"_epsilon":1.0E-8,"_rate":0.005,"_rate_annealing":1.0E-6,"_rate_decay":1.0,"_momentum_start":0.0,"_momentum_ramp":1000000.0,"_momentum_stable":0.0,"_nesterov_accelerated_gradient":true,"_input_dropout_ratio":0.0,"_hidden_dropout_ratios":null,"_l1":0.0,"_l2":0.0,"_max_w2":3.4028235E38,"_initial_weight_distribution":"UniformAdaptive","_initial_weight_scale":1.0,"_initial_weights":null,"_initial_biases":null,"_loss":"Automatic","_score_interval":5.0,"_score_training_samples":10000,"_score_validation_samples":0,"_score_duty_cycle":0.1,"_classification_stop":0.0,"_regression_stop":1.0E-6,"_quiet_mode":false,"_score_validation_sampling":"Uniform","_diagnostics":true,"_variable_importances":true,"_fast_mode":true,"_force_load_balance":true,"_replicate_training_data":true,"_single_node_mode":false,"_shuffle_training_data":false,"_missing_values_handling":"MeanImputation","_sparse":false,"_col_major":false,"_average_activation":0.0,"_sparsity_beta":0.0,"_max_categorical_features":2147483647,"_reproducible":false,"_export_weights_and_biases":false,"_elastic_averaging":false,"_elastic_averaging_moving_rate":0.9,"_elastic_averaging_regularization":0.001,"_mini_batch_size":1} 10-28 11:10:45.000 ***.***.***.***:54321 25930 FJ-1-3 INFO: Dataset already contains 60 chunks. No need to rebalance. 10-28 11:10:45.000 ***.***.***.***:54321 25930 FJ-1-3 INFO: Dataset already contains 60 chunks. No need to rebalance. 10-28 11:10:47.478 ***.***.***.***:54321 25930 FJ-1-3 INFO: Building the model on 28 numeric features and 0 (one-hot encoded) categorical features. 10-28 11:10:47.481 ***.***.***.***:54321 25930 FJ-1-3 INFO: _hidden_dropout_ratios: Automatically setting all hidden dropout ratios to 0.5. 10-28 11:10:47.481 ***.***.***.***:54321 25930 FJ-1-3 INFO: _replicate_training_data: Disabling replicate_training_data on 1 node. 10-28 11:10:47.482 ***.***.***.***:54321 25930 FJ-1-3 INFO: _categorical_encoding: Automatically enabling OneHotInternal categorical encoding. 10-28 11:10:47.482 ***.***.***.***:54321 25930 FJ-1-3 INFO: _adaptive_rate: Using automatic learning rate. Ignoring the following input parameters: rate, rate_decay, rate_annealing, momentum_start, momentum_ramp, momentum_stable. 10-28 11:10:47.687 ***.***.***.***:54321 25930 FJ-1-3 INFO: Created random initial model state. 10-28 11:10:47.687 ***.***.***.***:54321 25930 FJ-1-3 INFO: Model category: Classification 10-28 11:10:47.689 ***.***.***.***:54321 25930 FJ-1-3 INFO: Number of model parameters (weights/biases): 516,502 10-28 11:10:47.712 ***.***.***.***:54321 25930 FJ-1-3 INFO: One epoch corresponds to 8801686 training data rows. 10-28 11:10:48.073 ***.***.***.***:54321 25930 FJ-1-3 INFO: Number of chunks of the training data: 60 10-28 11:10:48.073 ***.***.***.***:54321 25930 FJ-1-3 INFO: Number of chunks of the validation data: 60 10-28 11:10:48.128 ***.***.***.***:54321 25930 FJ-1-3 INFO: Auto-tuning parameter 'train_samples_per_iteration': 10-28 11:10:48.128 ***.***.***.***:54321 25930 FJ-1-3 INFO: Estimated compute power : 6 GFlops 10-28 11:10:48.128 ***.***.***.***:54321 25930 FJ-1-3 INFO: Estimated time for comm : 10.584 msec 10-28 11:10:48.128 ***.***.***.***:54321 25930 FJ-1-3 INFO: Estimated time per row : 1.082 msec 10-28 11:10:48.129 ***.***.***.***:54321 25930 FJ-1-3 INFO: Estimated training speed: 924 rows/sec 10-28 11:10:48.129 ***.***.***.***:54321 25930 FJ-1-3 INFO: Setting train_samples_per_iteration (-2) to auto-tuned value: 9240 10-28 11:10:48.139 ***.***.***.***:54321 25930 FJ-1-3 INFO: Total setup time: 3.302 sec 10-28 11:10:48.139 ***.***.***.***:54321 25930 FJ-1-3 INFO: Starting to train the Deep Learning model. 10-28 11:10:58.171 ***.***.***.***:54321 25930 FJ-1-3 INFO: Scoring the model. 10-28 11:10:58.193 ***.***.***.***:54321 25930 FJ-1-3 INFO: Status of Neuron Layers (predicting label, 2-class classification, bernoulli distribution, CrossEntropy loss, 516,502 weights/biases, 5.9 MB, 9,297 training samples, mini-batch size 1): 10-28 11:10:58.193 ***.***.***.***:54321 25930 FJ-1-3 INFO: Layer Units Type Dropout L1 L2 Mean Rate Rate RMS Momentum Mean Weight Weight RMS Mean Bias Bias RMS 10-28 11:10:58.193 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 28 Input 0.00 % 10-28 11:10:58.193 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 500 RectifierDropout 50.00 % 0.000000 0.000000 0.023864 0.005227 0.000000 -0.000594 0.064161 0.480197 0.024219 10-28 11:10:58.193 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 500 RectifierDropout 50.00 % 0.000000 0.000000 0.018674 0.005700 0.000000 -0.003234 0.047685 0.982279 0.040789 10-28 11:10:58.193 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 500 RectifierDropout 50.00 % 0.000000 0.000000 0.016836 0.033811 0.000000 -0.006638 0.046928 0.971871 0.010261 10-28 11:10:58.193 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 2 Softmax 0.000000 0.000000 0.001384 0.000508 0.000000 0.006201 0.235411 0.000427 0.078857 10-28 11:12:33.979 ***.***.***.***:54321 25930 #e Thread WARN: Swapping! GC CALLBACK, (K/V:2.07 GB + POJO:3.33 GB + FREE:1.71 GB == MEM_MAX:7.11 GB), desiredKV=910.3 MB OOM! ............... 10-28 11:23:03.736 ***.***.***.***:54321 25930 #e Thread WARN: Swapping! GC CALLBACK, (K/V:2.10 GB + POJO:3.33 GB + FREE:1.69 GB == MEM_MAX:7.11 GB), desiredKV=910.3 MB OOM! 10-28 11:23:10.893 ***.***.***.***:54321 25930 FJ-1-3 INFO: Computing variable importances. 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Model Metrics Type: Binomial 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Description: Metrics reported on temporary training frame with 10034 samples 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: model id: DeepLearning_model_1540691693623_1 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: frame id: trainingHF.temporary.sample.0.11% 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: MSE: 0.24041794 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: RMSE: 0.49032432 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: AUC: 0.6487153 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: logloss: 0.6734272 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: mean_per_class_error: 0.4451185 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: default threshold: 0.5109130144119263 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 1 Error Rate 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 726 3999 0.8463 3,999 / 4,725 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 233 5076 0.0439 233 / 5,309 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Totals 959 9075 0.4218 4,232 / 10,034 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Gains/Lift Table (Avg response rate: 52.91 %): 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate Gain Cumulative Gain 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 0.01006578 0.714229 1.665444 1.665444 0.881188 0.881188 0.016764 0.016764 66.544388 66.544388 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 0.02003189 0.700567 1.549798 1.607909 0.820000 0.850746 0.015445 0.032209 54.979846 60.790885 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 0.02999801 0.693275 1.341899 1.519533 0.710000 0.803987 0.013374 0.045583 34.189866 51.953337 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 0.04006378 0.688087 1.459603 1.504476 0.772277 0.796020 0.014692 0.060275 45.960251 50.447611 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 0.05002990 0.683851 1.398599 1.483385 0.740000 0.784861 0.013939 0.074214 39.859861 48.338498 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 6 0.10005980 0.669399 1.291373 1.387379 0.683267 0.734064 0.064607 0.138821 29.137321 38.737910 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 7 0.14999003 0.659352 1.256226 1.343720 0.664671 0.710963 0.062724 0.201545 25.622629 34.371959 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 8 0.20001993 0.651498 1.208545 1.309909 0.639442 0.693074 0.060463 0.262008 20.854461 30.990901 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 9 0.29998007 0.637835 1.136260 1.252045 0.601196 0.662458 0.113581 0.375589 13.626008 25.204526 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 10 0.40003986 0.624735 1.144541 1.225156 0.605578 0.648231 0.114523 0.490111 14.454069 22.515573 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 11 0.50000000 0.611183 1.062771 1.192692 0.562313 0.631054 0.106235 0.596346 6.277063 19.269166 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 12 0.59996014 0.595890 1.000587 1.160685 0.529412 0.614120 0.100019 0.696365 0.058724 16.068489 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 13 0.70001993 0.577300 0.969471 1.133353 0.512948 0.599658 0.097005 0.793370 -3.052885 13.335308 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 14 0.79998007 0.552717 0.878105 1.101459 0.464606 0.582783 0.087775 0.881145 -12.189519 10.145897 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 15 0.89994020 0.513171 0.710398 1.058022 0.375872 0.559801 0.071011 0.952157 -28.960190 5.802220 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 16 1.00000000 0.157244 0.478147 1.000000 0.252988 0.529101 0.047843 1.000000 -52.185307 0.000000 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Model Metrics Type: Binomial 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Description: Metrics reported on full validation frame 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: model id: DeepLearning_model_1540691693623_1 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: frame id: testHF 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: MSE: 0.24023864 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: RMSE: 0.49014145 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: AUC: 0.6473551 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: logloss: 0.6730615 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: mean_per_class_error: 0.44070113 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: default threshold: 0.516148567199707 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 1 Error Rate 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 173883 858944 0.8316 858,944 / 1,032,827 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 57993 1107494 0.0498 57,993 / 1,165,487 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Totals 231876 1966438 0.4171 916,937 / 2,198,314 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Gains/Lift Table (Avg response rate: 53.02 %): 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate Gain Cumulative Gain 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 0.01000039 0.711305 1.650919 1.650919 0.875273 0.875273 0.016510 0.016510 65.091908 65.091908 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 0.02000033 0.699189 1.492261 1.571592 0.791157 0.833216 0.014923 0.031432 49.226124 57.159196 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 0.03000026 0.692037 1.424907 1.522698 0.755447 0.807293 0.014249 0.045681 42.490699 52.269772 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 0.04000020 0.686854 1.388956 1.489263 0.736387 0.789567 0.013889 0.059571 38.895613 48.926270 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 0.05000014 0.682738 1.362872 1.463985 0.722558 0.776165 0.013629 0.073199 36.287244 46.398488 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 6 0.10000027 0.668663 1.315601 1.389793 0.697496 0.736831 0.065780 0.138980 31.560096 38.979292 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 7 0.14999995 0.659091 1.248327 1.342638 0.661830 0.711831 0.062416 0.201396 24.832732 34.263801 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 8 0.20000009 0.651271 1.207904 1.308954 0.640398 0.693972 0.060395 0.261791 20.790378 30.895437 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 9 0.29999991 0.637659 1.160564 1.259491 0.615300 0.667748 0.116056 0.377847 16.056418 25.949105 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 10 0.40000018 0.624659 1.106908 1.221345 0.586853 0.647524 0.110691 0.488538 10.690765 22.134511 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 11 0.50000000 0.611020 1.061232 1.189323 0.562637 0.630547 0.106123 0.594661 6.123213 18.932258 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 12 0.59999982 0.595739 1.010223 1.159473 0.535593 0.614721 0.101022 0.695683 1.022332 15.947274 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 13 0.70000009 0.577638 0.951814 1.129807 0.504626 0.598993 0.095182 0.790865 -4.818589 12.980715 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 14 0.79999991 0.553441 0.876185 1.098104 0.464530 0.582185 0.087618 0.878483 -12.381532 9.810439 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 15 0.89999973 0.512547 0.753120 1.059773 0.399284 0.561863 0.075312 0.953795 -24.687998 5.977286 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 16 1.00000000 0.071608 0.462046 1.000000 0.244964 0.530173 0.046205 1.000000 -53.795407 0.000000 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Variable Importances: 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Variable Relative Importance Scaled Importance Percentage 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features25 1.000000 1.000000 0.042259 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features5 0.961014 0.961014 0.040612 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features27 0.950141 0.950141 0.040152 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features3 0.908379 0.908379 0.038387 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features12 0.874357 0.874357 0.036950 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features23 0.872185 0.872185 0.036858 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features2 0.863494 0.863494 0.036490 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features17 0.856389 0.856389 0.036190 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features13 0.854462 0.854462 0.036109 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features24 0.849487 0.849487 0.035899 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: --- 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features16 0.816568 0.816568 0.034507 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features15 0.810991 0.810991 0.034272 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features14 0.808711 0.808711 0.034175 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features11 0.803530 0.803530 0.033956 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features6 0.801766 0.801766 0.033882 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features4 0.799988 0.799988 0.033807 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features22 0.798922 0.798922 0.033762 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features19 0.795052 0.795052 0.033598 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features21 0.786645 0.786645 0.033243 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: features18 0.777682 0.777682 0.032864 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Status of Neuron Layers (predicting label, 2-class classification, bernoulli distribution, CrossEntropy loss, 516,502 weights/biases, 5.9 MB, 9,297 training samples, mini-batch size 1): 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Layer Units Type Dropout L1 L2 Mean Rate Rate RMS Momentum Mean Weight Weight RMS Mean Bias Bias RMS 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 28 Input 0.00 % 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 500 RectifierDropout 50.00 % 0.000000 0.000000 0.023864 0.005227 0.000000 -0.000594 0.064161 0.480197 0.024219 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 500 RectifierDropout 50.00 % 0.000000 0.000000 0.018674 0.005700 0.000000 -0.003234 0.047685 0.982279 0.040789 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 500 RectifierDropout 50.00 % 0.000000 0.000000 0.016836 0.033811 0.000000 -0.006638 0.046928 0.971871 0.010261 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 2 Softmax 0.000000 0.000000 0.001384 0.000508 0.000000 0.006201 0.235411 0.000427 0.078857 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Scoring History: 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: Timestamp Duration Training Speed Epochs Iterations Samples Training RMSE Training LogLoss Training AUC Training Lift Training Classification Error Validation RMSE Validation LogLoss Validation AUC Validation Lift Validation Classification Error 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2018-10-28 11:10:47 0.000 sec 0.00000 0 0.000000 NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN 10-28 11:23:11.092 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2018-10-28 11:10:58 12 min 26.167 sec 931 obs/sec 0.00106 1 9297.000000 0.49032 0.67343 0.64872 1.66544 0.42177 0.49014 0.67306 0.64736 1.65092 0.41711 10-28 11:23:11.095 ***.***.***.***:54321 25930 FJ-1-3 INFO: Time taken for scoring and diagnostics: 12 min 12.887 sec 10-28 11:23:11.095 ***.***.***.***:54321 25930 FJ-1-3 INFO: Training time: 12 min 26.268 sec (scoring: 12 min 12.887 sec). Processed 9,297 samples (0.001 epochs). 10-28 11:23:11.095 ***.***.***.***:54321 25930 FJ-1-3 INFO: Iterations: 1. Epochs: 0.00105627. Speed: 922 samples/sec. 10-28 11:23:20.028 ***.***.***.***:54321 25930 #e Thread WARN: Swapping! GC CALLBACK, (K/V:2.08 GB + POJO:3.33 GB + FREE:1.71 GB == MEM_MAX:7.11 GB), desiredKV=910.3 MB OOM! 10-28 11:23:23.662 ***.***.***.***:54321 25930 FJ-1-3 INFO: Training time: 12 min 38.835 sec (scoring: 12 min 12.887 sec). Processed 18,551 samples (0.002 epochs). ...... 10-28 12:25:37.216 ***.***.***.***:54321 25930 FJ-1-3 INFO: Training time: 1:14:52.389 (scoring: 12 min 12.887 sec). Processed 8,798,219 samples (1.000 epochs). 10-28 12:25:37.216 ***.***.***.***:54321 25930 FJ-1-3 INFO: Iterations: 952. Epochs: 0.999606. Speed: 2,342 samples/sec. Estimated time left: 7.385 sec 10-28 12:25:39.941 ***.***.***.***:54321 25930 #e Thread WARN: Swapping! GC CALLBACK, (K/V:2.08 GB + POJO:3.33 GB + FREE:1.70 GB == MEM_MAX:7.11 GB), desiredKV=910.3 MB OOM! 10-28 12:25:42.540 ***.***.***.***:54321 25930 FJ-1-3 INFO: Scoring the model. 10-28 12:25:42.552 ***.***.***.***:54321 25930 FJ-1-3 INFO: Status of Neuron Layers (predicting label, 2-class classification, bernoulli distribution, CrossEntropy loss, 516,502 weights/biases, 5.9 MB, 8,807,558 training samples, mini-batch size 1): 10-28 12:25:42.552 ***.***.***.***:54321 25930 FJ-1-3 INFO: Layer Units Type Dropout L1 L2 Mean Rate Rate RMS Momentum Mean Weight Weight RMS Mean Bias Bias RMS 10-28 12:25:42.552 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 28 Input 0.00 % 10-28 12:25:42.552 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 500 RectifierDropout 50.00 % 0.000000 0.000000 0.005289 0.002722 0.000000 -0.014167 0.325117 -0.823028 0.329013 10-28 12:25:42.552 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 500 RectifierDropout 50.00 % 0.000000 0.000000 0.014575 0.013544 0.000000 -0.057675 0.122358 0.040999 0.388743 10-28 12:25:42.552 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 500 RectifierDropout 50.00 % 0.000000 0.000000 0.043309 0.038748 0.000000 -0.022375 0.112984 -0.475442 0.620491 10-28 12:25:42.552 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 2 Softmax 0.000000 0.000000 0.002478 0.001711 0.000000 0.006162 0.193755 -0.019024 0.100142 10-28 12:39:31.472 ***.***.***.***:54321 25930 FJ-1-3 INFO: Computing variable importances. 10-28 12:39:31.553 ***.***.***.***:54321 25930 FJ-1-3 INFO: Time taken for scoring and diagnostics: 13 min 48.972 sec 10-28 12:39:31.553 ***.***.***.***:54321 25930 FJ-1-3 INFO: Training time: 1:28:46.726 (scoring: 26 min 1.859 sec). Processed 8,807,558 samples (1.001 epochs). 10-28 12:39:31.554 ***.***.***.***:54321 25930 FJ-1-3 INFO: Iterations: 953. Epochs: 1.00067. Speed: 2,341 samples/sec. Estimated time left: 3.152 sec 10-28 12:39:31.561 ***.***.***.***:54321 25930 FJ-1-3 INFO: ============================================================================================================================================================================== 10-28 12:39:31.561 ***.***.***.***:54321 25930 FJ-1-3 INFO: Finished training the Deep Learning model. 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Model Metrics Type: Binomial 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Description: Metrics reported on temporary training frame with 10034 samples 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: model id: DeepLearning_model_1540691693623_1 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: frame id: trainingHF.temporary.sample.0.11% 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: MSE: 0.17058964 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: RMSE: 0.413025 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: AUC: 0.8325065 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: logloss: 0.5137846 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: mean_per_class_error: 0.2561099 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: default threshold: 0.47307735681533813 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 1 Error Rate 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 3031 1694 0.3585 1,694 / 4,725 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 816 4493 0.1537 816 / 5,309 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Totals 3847 6187 0.2501 2,510 / 10,034 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Gains/Lift Table (Avg response rate: 52.91 %): 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate Gain Cumulative Gain 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 0.01006578 0.938660 1.871285 1.871285 0.990099 0.990099 0.018836 0.018836 87.128526 87.128526 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 0.02003189 0.924062 1.833298 1.852386 0.970000 0.980100 0.018271 0.037107 83.329817 85.238621 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 0.02999801 0.911974 1.852198 1.852324 0.980000 0.980066 0.018459 0.055566 85.219815 85.232374 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 0.04006378 0.902853 1.684157 1.810073 0.891089 0.957711 0.016952 0.072518 68.415674 81.007282 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 0.05002990 0.894835 1.795498 1.807170 0.950000 0.956175 0.017894 0.090413 79.549821 80.716951 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 6 0.10005980 0.847356 1.728106 1.767638 0.914343 0.935259 0.086457 0.176869 72.810585 76.763768 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 7 0.14999003 0.801989 1.663651 1.733022 0.880240 0.916944 0.083066 0.259936 66.365104 73.302153 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 8 0.20001993 0.753095 1.611393 1.702599 0.852590 0.900847 0.080618 0.340554 61.139282 70.259920 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 9 0.29998007 0.665526 1.496170 1.633812 0.791625 0.864452 0.149557 0.490111 49.616999 63.381233 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 10 0.40003986 0.603491 1.359142 1.565111 0.719124 0.828102 0.135995 0.626107 35.914207 56.511055 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 11 0.50000000 0.547910 1.140029 1.480128 0.603190 0.783137 0.113957 0.740064 14.002877 48.012808 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 12 0.59996014 0.484354 0.923329 1.387359 0.488534 0.734053 0.092296 0.832360 -7.667091 38.735908 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 13 0.70001993 0.406472 0.707808 1.290225 0.374502 0.682659 0.070823 0.903183 -29.219194 29.022508 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 14 0.79998007 0.312288 0.512542 1.193051 0.271186 0.631245 0.051234 0.954417 -48.745814 19.305101 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 15 0.89994020 0.181031 0.333529 1.097580 0.176471 0.580731 0.033340 0.987757 -66.647092 9.758030 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 16 1.00000000 0.000332 0.122360 1.000000 0.064741 0.529101 0.012243 1.000000 -87.763956 0.000000 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Model Metrics Type: Binomial 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Description: Metrics reported on full validation frame 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: model id: DeepLearning_model_1540691693623_1 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: frame id: testHF 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: MSE: 0.1734764 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: RMSE: 0.41650498 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: AUC: 0.82392573 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: logloss: 0.519945 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: mean_per_class_error: 0.27632737 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: default threshold: 0.4431946277618408 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 1 Error Rate 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 0 600334 432493 0.4187 432,493 / 1,032,827 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 156068 1009419 0.1339 156,068 / 1,165,487 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Totals 756402 1441912 0.2677 588,561 / 2,198,314 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Gains/Lift Table (Avg response rate: 53.02 %): 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate Gain Cumulative Gain 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 0.01000039 0.942709 1.850056 1.850056 0.980850 0.980850 0.018501 0.018501 85.005551 85.005551 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 0.02000033 0.927157 1.829118 1.839587 0.969749 0.975300 0.018291 0.036792 82.911828 83.958713 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 0.03000026 0.915037 1.818994 1.832723 0.964382 0.971660 0.018190 0.054982 81.899369 83.272276 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 0.04000020 0.904276 1.795741 1.823478 0.952054 0.966759 0.017957 0.072939 79.574146 82.347754 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 0.05000014 0.894180 1.782700 1.815322 0.945139 0.962435 0.017827 0.090766 78.269962 81.532203 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 6 0.10000027 0.847596 1.738033 1.776677 0.921458 0.941947 0.086902 0.177668 73.803266 77.667734 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 7 0.14999995 0.802427 1.665031 1.739462 0.882755 0.922216 0.083251 0.260919 66.503132 73.946223 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 8 0.20000009 0.755289 1.580760 1.699787 0.838076 0.901181 0.079038 0.339957 58.075978 69.978653 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 9 0.29999991 0.665356 1.455411 1.618328 0.771620 0.857994 0.145541 0.485498 45.541142 61.832828 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 10 0.40000018 0.602567 1.309955 1.541235 0.694503 0.817121 0.130996 0.616494 30.995527 54.123485 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 11 0.50000000 0.546179 1.127994 1.458587 0.598032 0.773304 0.112799 0.729293 12.799404 45.858684 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 12 0.59999982 0.483054 0.930368 1.370551 0.493256 0.726629 0.093037 0.822330 -6.963186 37.055052 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 13 0.70000009 0.407534 0.743078 1.280911 0.393960 0.679105 0.074308 0.896638 -25.692210 28.091134 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 14 0.79999991 0.311927 0.551745 1.189766 0.292520 0.630782 0.055174 0.951812 -44.825539 18.976566 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 15 0.89999973 0.182314 0.352351 1.096720 0.186807 0.581451 0.035235 0.987047 -64.764880 9.671975 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 16 1.00000000 0.000002 0.129525 1.000000 0.068671 0.530173 0.012953 1.000000 -87.047510 0.000000 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Variable Importances: 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Variable Relative Importance Scaled Importance Percentage 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features25 1.000000 1.000000 0.133907 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features26 0.863066 0.863066 0.115570 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features27 0.610918 0.610918 0.081806 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features22 0.566826 0.566826 0.075902 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features24 0.495027 0.495027 0.066288 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features21 0.464018 0.464018 0.062135 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features0 0.383988 0.383988 0.051419 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features5 0.365159 0.365159 0.048897 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features3 0.260043 0.260043 0.034822 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features8 0.222461 0.222461 0.029789 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: --- 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features17 0.128377 0.128377 0.017191 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features10 0.099615 0.099615 0.013339 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features14 0.082811 0.082811 0.011089 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features18 0.075230 0.075230 0.010074 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features2 0.063171 0.063171 0.008459 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features11 0.062316 0.062316 0.008345 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features4 0.059529 0.059529 0.007971 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features15 0.058508 0.058508 0.007835 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features19 0.057827 0.057827 0.007743 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: features7 0.053465 0.053465 0.007159 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Status of Neuron Layers (predicting label, 2-class classification, bernoulli distribution, CrossEntropy loss, 516,502 weights/biases, 5.9 MB, 8,807,558 training samples, mini-batch size 1): 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Layer Units Type Dropout L1 L2 Mean Rate Rate RMS Momentum Mean Weight Weight RMS Mean Bias Bias RMS 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 1 28 Input 0.00 % 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2 500 RectifierDropout 50.00 % 0.000000 0.000000 0.005289 0.002722 0.000000 -0.014167 0.325117 -0.823028 0.329013 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 3 500 RectifierDropout 50.00 % 0.000000 0.000000 0.014575 0.013544 0.000000 -0.057675 0.122358 0.040999 0.388743 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 4 500 RectifierDropout 50.00 % 0.000000 0.000000 0.043309 0.038748 0.000000 -0.022375 0.112984 -0.475442 0.620491 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 5 2 Softmax 0.000000 0.000000 0.002478 0.001711 0.000000 0.006162 0.193755 -0.019024 0.100142 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Scoring History: 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: Timestamp Duration Training Speed Epochs Iterations Samples Training RMSE Training LogLoss Training AUC Training Lift Training Classification Error Validation RMSE Validation LogLoss Validation AUC Validation Lift Validation Classification Error 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2018-10-28 11:10:47 0.000 sec 0.00000 0 0.000000 NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2018-10-28 11:10:58 12 min 26.167 sec 931 obs/sec 0.00106 1 9297.000000 0.49032 0.67343 0.64872 1.66544 0.42177 0.49014 0.67306 0.64736 1.65092 0.41711 10-28 12:39:31.566 ***.***.***.***:54321 25930 FJ-1-3 INFO: 2018-10-28 12:25:42 1:28:46.680 2341 obs/sec 1.00067 953 8807558.000000 0.41302 0.51378 0.83251 1.87129 0.25015 0.41650 0.51995 0.82393 1.85006 0.26773 10-28 12:39:31.570 ***.***.***.***:54321 25930 FJ-1-3 INFO: ============================================================================================================================================================================== dlModel: hex.deeplearning.DeepLearningModel = Model Metrics Type: Binomial Description: Metrics reported on temporary training frame with 10034 samples model id: DeepLearning_model_1540691693623_1 frame id: trainingHF.temporary.sample.0.11% MSE: 0.17058964 RMSE: 0.413025 AUC: 0.8325065 logloss: 0.5137846 mean_per_class_error: 0.2561099 default threshold: 0.47307735681533813 CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 0 1 Error Rate 0 3031 1694 0.3585 1,694 / 4,725 1 816 4493 0.1537 816 / 5,309 Totals 3847 6187 0.2501 2,510 / 10,034 Gains/Lift Table (Avg response rate: 52.91 %): Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Respo... scala> println(s"DL Model: ${dlModel}") DL Model: Model Metrics Type: Binomial Description: Metrics reported on temporary training frame with 10034 samples model id: DeepLearning_model_1540691693623_1 frame id: trainingHF.temporary.sample.0.11% MSE: 0.17058964 RMSE: 0.413025 AUC: 0.8325065 logloss: 0.5137846 mean_per_class_error: 0.2561099 default threshold: 0.47307735681533813 CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 0 1 Error Rate 0 3031 1694 0.3585 1,694 / 4,725 1 816 4493 0.1537 816 / 5,309 Totals 3847 6187 0.2501 2,510 / 10,034 Gains/Lift Table (Avg response rate: 52.91 %): Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate Gain Cumulative Gain 1 0.01006578 0.938660 1.871285 1.871285 0.990099 0.990099 0.018836 0.018836 87.128526 87.128526 2 0.02003189 0.924062 1.833298 1.852386 0.970000 0.980100 0.018271 0.037107 83.329817 85.238621 3 0.02999801 0.911974 1.852198 1.852324 0.980000 0.980066 0.018459 0.055566 85.219815 85.232374 4 0.04006378 0.902853 1.684157 1.810073 0.891089 0.957711 0.016952 0.072518 68.415674 81.007282 5 0.05002990 0.894835 1.795498 1.807170 0.950000 0.956175 0.017894 0.090413 79.549821 80.716951 6 0.10005980 0.847356 1.728106 1.767638 0.914343 0.935259 0.086457 0.176869 72.810585 76.763768 7 0.14999003 0.801989 1.663651 1.733022 0.880240 0.916944 0.083066 0.259936 66.365104 73.302153 8 0.20001993 0.753095 1.611393 1.702599 0.852590 0.900847 0.080618 0.340554 61.139282 70.259920 9 0.29998007 0.665526 1.496170 1.633812 0.791625 0.864452 0.149557 0.490111 49.616999 63.381233 10 0.40003986 0.603491 1.359142 1.565111 0.719124 0.828102 0.135995 0.626107 35.914207 56.511055 11 0.50000000 0.547910 1.140029 1.480128 0.603190 0.783137 0.113957 0.740064 14.002877 48.012808 12 0.59996014 0.484354 0.923329 1.387359 0.488534 0.734053 0.092296 0.832360 -7.667091 38.735908 13 0.70001993 0.406472 0.707808 1.290225 0.374502 0.682659 0.070823 0.903183 -29.219194 29.022508 14 0.79998007 0.312288 0.512542 1.193051 0.271186 0.631245 0.051234 0.954417 -48.745814 19.305101 15 0.89994020 0.181031 0.333529 1.097580 0.176471 0.580731 0.033340 0.987757 -66.647092 9.758030 16 1.00000000 0.000332 0.122360 1.000000 0.064741 0.529101 0.012243 1.000000 -87.763956 0.000000 Model Metrics Type: Binomial Description: Metrics reported on full validation frame model id: DeepLearning_model_1540691693623_1 frame id: testHF MSE: 0.1734764 RMSE: 0.41650498 AUC: 0.82392573 logloss: 0.519945 mean_per_class_error: 0.27632737 default threshold: 0.4431946277618408 CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 0 1 Error Rate 0 600334 432493 0.4187 432,493 / 1,032,827 1 156068 1009419 0.1339 156,068 / 1,165,487 Totals 756402 1441912 0.2677 588,561 / 2,198,314 Gains/Lift Table (Avg response rate: 53.02 %): Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate Gain Cumulative Gain 1 0.01000039 0.942709 1.850056 1.850056 0.980850 0.980850 0.018501 0.018501 85.005551 85.005551 2 0.02000033 0.927157 1.829118 1.839587 0.969749 0.975300 0.018291 0.036792 82.911828 83.958713 3 0.03000026 0.915037 1.818994 1.832723 0.964382 0.971660 0.018190 0.054982 81.899369 83.272276 4 0.04000020 0.904276 1.795741 1.823478 0.952054 0.966759 0.017957 0.072939 79.574146 82.347754 5 0.05000014 0.894180 1.782700 1.815322 0.945139 0.962435 0.017827 0.090766 78.269962 81.532203 6 0.10000027 0.847596 1.738033 1.776677 0.921458 0.941947 0.086902 0.177668 73.803266 77.667734 7 0.14999995 0.802427 1.665031 1.739462 0.882755 0.922216 0.083251 0.260919 66.503132 73.946223 8 0.20000009 0.755289 1.580760 1.699787 0.838076 0.901181 0.079038 0.339957 58.075978 69.978653 9 0.29999991 0.665356 1.455411 1.618328 0.771620 0.857994 0.145541 0.485498 45.541142 61.832828 10 0.40000018 0.602567 1.309955 1.541235 0.694503 0.817121 0.130996 0.616494 30.995527 54.123485 11 0.50000000 0.546179 1.127994 1.458587 0.598032 0.773304 0.112799 0.729293 12.799404 45.858684 12 0.59999982 0.483054 0.930368 1.370551 0.493256 0.726629 0.093037 0.822330 -6.963186 37.055052 13 0.70000009 0.407534 0.743078 1.280911 0.393960 0.679105 0.074308 0.896638 -25.692210 28.091134 14 0.79999991 0.311927 0.551745 1.189766 0.292520 0.630782 0.055174 0.951812 -44.825539 18.976566 15 0.89999973 0.182314 0.352351 1.096720 0.186807 0.581451 0.035235 0.987047 -64.764880 9.671975 16 1.00000000 0.000002 0.129525 1.000000 0.068671 0.530173 0.012953 1.000000 -87.047510 0.000000 Variable Importances: Variable Relative Importance Scaled Importance Percentage features25 1.000000 1.000000 0.133907 features26 0.863066 0.863066 0.115570 features27 0.610918 0.610918 0.081806 features22 0.566826 0.566826 0.075902 features24 0.495027 0.495027 0.066288 features21 0.464018 0.464018 0.062135 features0 0.383988 0.383988 0.051419 features5 0.365159 0.365159 0.048897 features3 0.260043 0.260043 0.034822 features8 0.222461 0.222461 0.029789 --- features17 0.128377 0.128377 0.017191 features10 0.099615 0.099615 0.013339 features14 0.082811 0.082811 0.011089 features18 0.075230 0.075230 0.010074 features2 0.063171 0.063171 0.008459 features11 0.062316 0.062316 0.008345 features4 0.059529 0.059529 0.007971 features15 0.058508 0.058508 0.007835 features19 0.057827 0.057827 0.007743 features7 0.053465 0.053465 0.007159 Status of Neuron Layers (predicting label, 2-class classification, bernoulli distribution, CrossEntropy loss, 516,502 weights/biases, 5.9 MB, 8,807,558 training samples, mini-batch size 1): Layer Units Type Dropout L1 L2 Mean Rate Rate RMS Momentum Mean Weight Weight RMS Mean Bias Bias RMS 1 28 Input 0.00 % 2 500 RectifierDropout 50.00 % 0.000000 0.000000 0.005289 0.002722 0.000000 -0.014167 0.325117 -0.823028 0.329013 3 500 RectifierDropout 50.00 % 0.000000 0.000000 0.014575 0.013544 0.000000 -0.057675 0.122358 0.040999 0.388743 4 500 RectifierDropout 50.00 % 0.000000 0.000000 0.043309 0.038748 0.000000 -0.022375 0.112984 -0.475442 0.620491 5 2 Softmax 0.000000 0.000000 0.002478 0.001711 0.000000 0.006162 0.193755 -0.019024 0.100142 Scoring History: Timestamp Duration Training Speed Epochs Iterations Samples Training RMSE Training LogLoss Training AUC Training Lift Training Classification Error Validation RMSE Validation LogLoss Validation AUC Validation Lift Validation Classification Error 2018-10-28 11:10:47 0.000 sec 0.00000 0 0.000000 NaN NaN NaN NaN NaN NaN NaN NaN NaN NaN 2018-10-28 11:10:58 12 min 26.167 sec 931 obs/sec 0.00106 1 9297.000000 0.49032 0.67343 0.64872 1.66544 0.42177 0.49014 0.67306 0.64736 1.65092 0.41711 2018-10-28 12:25:42 1:28:46.680 2341 obs/sec 1.00067 953 8807558.000000 0.41302 0.51378 0.83251 1.87129 0.25015 0.41650 0.51995 0.82393 1.85006 0.26773 |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 |

scala> val testPredictions = dlModel.score(testHF) testPredictions: water.fvec.Frame = Frame key: _b2172fcfe3fcb11d5cc7099ba9a44c9d cols: 3 rows: 2198314 chunks: 60 size: 35480039 scala> import water.support.ModelMetricsSupport._ import water.support.ModelMetricsSupport._ scala> import _root_.hex.ModelMetricsBinomial import _root_.hex.ModelMetricsBinomial scala> val dlMetrics = modelMetrics[ModelMetricsBinomial](dlModel, testHF) dlMetrics: hex.ModelMetricsBinomial = Model Metrics Type: Binomial Description: N/A model id: DeepLearning_model_1540691693623_1 frame id: testHF MSE: 0.1734764 RMSE: 0.41650498 AUC: 0.82392573 logloss: 0.519945 mean_per_class_error: 0.27632737 default threshold: 0.4431946277618408 CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 0 1 Error Rate 0 600334 432493 0.4187 432,493 / 1,032,827 1 156068 1009419 0.1339 156,068 / 1,165,487 Totals 756402 1441912 0.2677 588,561 / 2,198,314 Gains/Lift Table (Avg response rate: 53.02 %): Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate ... scala> println(dlMetrics) Model Metrics Type: Binomial Description: N/A model id: DeepLearning_model_1540691693623_1 frame id: testHF MSE: 0.1734764 RMSE: 0.41650498 AUC: 0.82392573 logloss: 0.519945 mean_per_class_error: 0.27632737 default threshold: 0.4431946277618408 CM: Confusion Matrix (Row labels: Actual class; Column labels: Predicted class): 0 1 Error Rate 0 600334 432493 0.4187 432,493 / 1,032,827 1 156068 1009419 0.1339 156,068 / 1,165,487 Totals 756402 1441912 0.2677 588,561 / 2,198,314 Gains/Lift Table (Avg response rate: 53.02 %): Group Cumulative Data Fraction Lower Threshold Lift Cumulative Lift Response Rate Cumulative Response Rate Capture Rate Cumulative Capture Rate Gain Cumulative Gain 1 0.01000039 0.942709 1.850056 1.850056 0.980850 0.980850 0.018501 0.018501 85.005551 85.005551 2 0.02000033 0.927157 1.829118 1.839587 0.969749 0.975300 0.018291 0.036792 82.911828 83.958713 3 0.03000026 0.915037 1.818994 1.832723 0.964382 0.971660 0.018190 0.054982 81.899369 83.272276 4 0.04000020 0.904276 1.795741 1.823478 0.952054 0.966759 0.017957 0.072939 79.574146 82.347754 5 0.05000014 0.894180 1.782700 1.815322 0.945139 0.962435 0.017827 0.090766 78.269962 81.532203 6 0.10000027 0.847596 1.738033 1.776677 0.921458 0.941947 0.086902 0.177668 73.803266 77.667734 7 0.14999995 0.802427 1.665031 1.739462 0.882755 0.922216 0.083251 0.260919 66.503132 73.946223 8 0.20000009 0.755289 1.580760 1.699787 0.838076 0.901181 0.079038 0.339957 58.075978 69.978653 9 0.29999991 0.665356 1.455411 1.618328 0.771620 0.857994 0.145541 0.485498 45.541142 61.832828 10 0.40000018 0.602567 1.309955 1.541235 0.694503 0.817121 0.130996 0.616494 30.995527 54.123485 11 0.50000000 0.546179 1.127994 1.458587 0.598032 0.773304 0.112799 0.729293 12.799404 45.858684 12 0.59999982 0.483054 0.930368 1.370551 0.493256 0.726629 0.093037 0.822330 -6.963186 37.055052 13 0.70000009 0.407534 0.743078 1.280911 0.393960 0.679105 0.074308 0.896638 -25.692210 28.091134 14 0.79999991 0.311927 0.551745 1.189766 0.292520 0.630782 0.055174 0.951812 -44.825539 18.976566 15 0.89999973 0.182314 0.352351 1.096720 0.186807 0.581451 0.035235 0.987047 -64.764880 9.671975 16 1.00000000 0.000002 0.129525 1.000000 0.068671 0.530173 0.012953 1.000000 -87.047510 0.000000 |